HeadGAP: Few-shot 3D Head Avatar via Generalizable Gaussian Priors

Xiaozheng Zheng, Chao Wen, Zhaohu Li, Weiyi Zhang, Zhuo Su, Xu Chang, Yang Zhao, Zheng Lv, Xiaoyuan Zhang, Yongjie Zhang, Guidong Wang, Lan Xu

2024-08-13

Summary

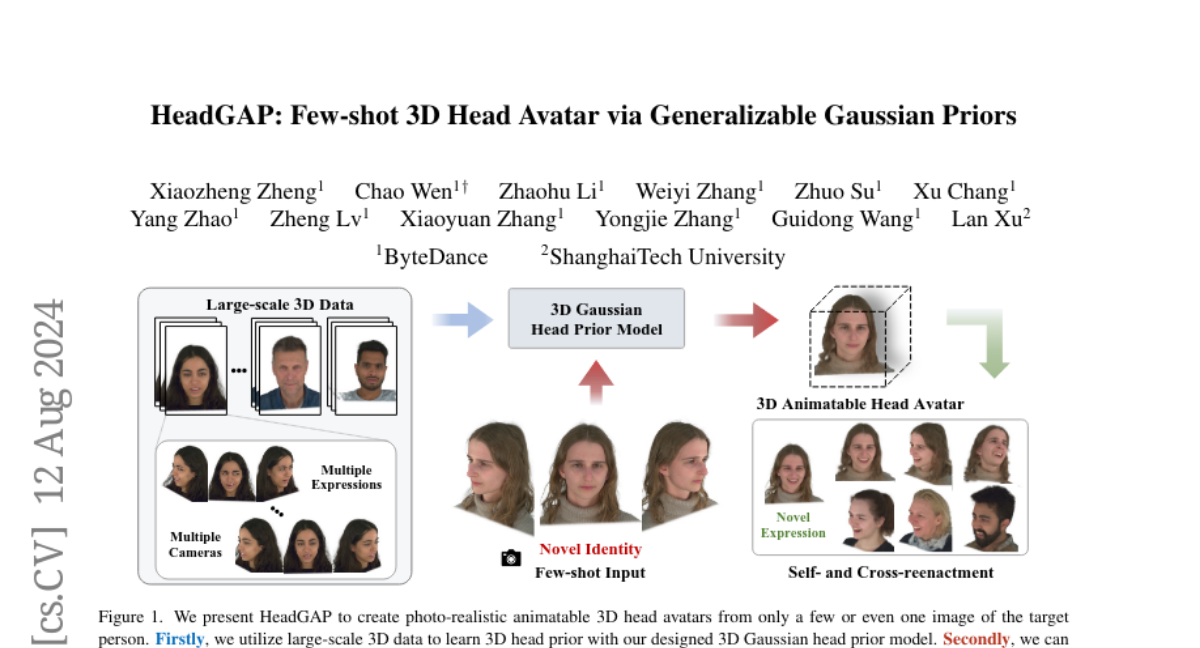

This paper introduces HeadGAP, a new method for creating 3D head avatars that can be personalized quickly and accurately using only a few example images.

What's the problem?

Creating realistic 3D head avatars from limited data is challenging because traditional methods often require a lot of images to produce high-quality results. This can be difficult in real-life situations where you may not have many pictures of a person.

What's the solution?

HeadGAP uses a two-phase approach: first, it learns general features of 3D heads from a large dataset, and then it applies this knowledge to create personalized avatars from just a few images. It employs advanced techniques like Gaussian Splatting and part-based dynamic modeling to ensure that the avatars look realistic and can be animated smoothly. The method also includes strategies for fast personalization, allowing for quick adjustments based on individual characteristics.

Why it matters?

This research is significant because it makes it easier to create customized 3D avatars for various applications, such as gaming, virtual reality, and social media. By enabling high-quality avatar creation from minimal data, it opens up new possibilities for personal expression and interaction in digital environments.

Abstract

In this paper, we present a novel 3D head avatar creation approach capable of generalizing from few-shot in-the-wild data with high-fidelity and animatable robustness. Given the underconstrained nature of this problem, incorporating prior knowledge is essential. Therefore, we propose a framework comprising prior learning and avatar creation phases. The prior learning phase leverages 3D head priors derived from a large-scale multi-view dynamic dataset, and the avatar creation phase applies these priors for few-shot personalization. Our approach effectively captures these priors by utilizing a Gaussian Splatting-based auto-decoder network with part-based dynamic modeling. Our method employs identity-shared encoding with personalized latent codes for individual identities to learn the attributes of Gaussian primitives. During the avatar creation phase, we achieve fast head avatar personalization by leveraging inversion and fine-tuning strategies. Extensive experiments demonstrate that our model effectively exploits head priors and successfully generalizes them to few-shot personalization, achieving photo-realistic rendering quality, multi-view consistency, and stable animation.