Hierarchical Patch Diffusion Models for High-Resolution Video Generation

Ivan Skorokhodov, Willi Menapace, Aliaksandr Siarohin, Sergey Tulyakov

2024-06-13

Summary

This paper presents a new method called Hierarchical Patch Diffusion Models designed to generate high-resolution videos more efficiently. It improves how diffusion models work by focusing on smaller parts of the video and using advanced techniques to enhance quality.

What's the problem?

Diffusion models are powerful tools for creating images and videos, but they struggle when it comes to producing high-resolution content. Scaling these models requires complex changes that can make them less efficient and harder to use in practical applications. This means that generating high-quality videos can take a lot of time and resources.

What's the solution?

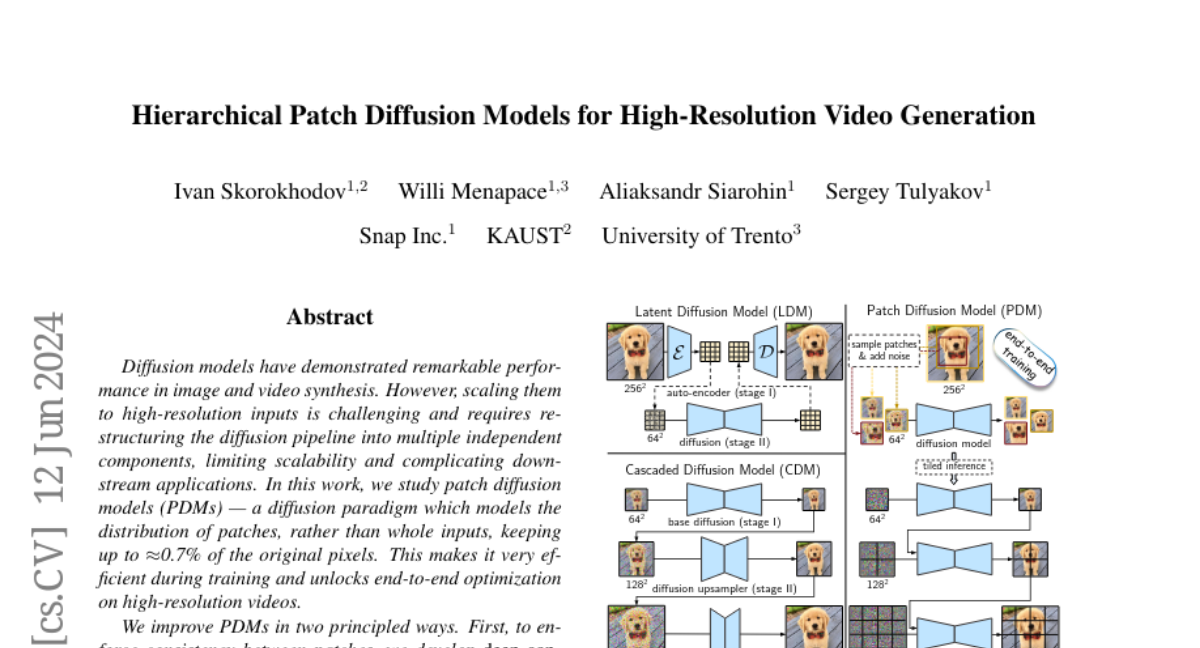

The authors developed a hierarchical approach that breaks down video generation into smaller, manageable patches instead of processing the entire video frame at once. They introduced two key techniques: deep context fusion, which helps maintain consistency between different patches by sharing information from lower-resolution patches to higher ones, and adaptive computation, which focuses processing power on the most important details first. These improvements allow the model to generate high-quality videos faster and more efficiently.

Why it matters?

This research is important because it sets a new standard for video generation technology, enabling the creation of high-resolution videos more effectively than before. By improving the efficiency of diffusion models, this work could lead to better applications in entertainment, education, and virtual reality, where high-quality video content is essential.

Abstract

Diffusion models have demonstrated remarkable performance in image and video synthesis. However, scaling them to high-resolution inputs is challenging and requires restructuring the diffusion pipeline into multiple independent components, limiting scalability and complicating downstream applications. This makes it very efficient during training and unlocks end-to-end optimization on high-resolution videos. We improve PDMs in two principled ways. First, to enforce consistency between patches, we develop deep context fusion -- an architectural technique that propagates the context information from low-scale to high-scale patches in a hierarchical manner. Second, to accelerate training and inference, we propose adaptive computation, which allocates more network capacity and computation towards coarse image details. The resulting model sets a new state-of-the-art FVD score of 66.32 and Inception Score of 87.68 in class-conditional video generation on UCF-101 256^2, surpassing recent methods by more than 100%. Then, we show that it can be rapidly fine-tuned from a base 36times 64 low-resolution generator for high-resolution 64 times 288 times 512 text-to-video synthesis. To the best of our knowledge, our model is the first diffusion-based architecture which is trained on such high resolutions entirely end-to-end. Project webpage: https://snap-research.github.io/hpdm.