HiRED: Attention-Guided Token Dropping for Efficient Inference of High-Resolution Vision-Language Models in Resource-Constrained Environments

Kazi Hasan Ibn Arif, JinYi Yoon, Dimitrios S. Nikolopoulos, Hans Vandierendonck, Deepu John, Bo Ji

2024-08-26

Summary

This paper introduces HiRED, a new method that improves the efficiency of high-resolution Vision-Language Models (VLMs) by selectively dropping less important visual tokens during processing.

What's the problem?

High-resolution VLMs generate a lot of visual tokens when analyzing images, which can be too much for regular computers to handle, especially in situations where resources are limited. This makes it hard to process images quickly and efficiently.

What's the solution?

The authors propose HiRED, a technique that drops unnecessary visual tokens before they reach the language model stage. This method uses attention mechanisms from the model to decide which tokens are most important, allowing for better performance without needing extra training. By focusing only on the essential tokens, HiRED helps maintain high accuracy while significantly speeding up processing times and reducing memory usage.

Why it matters?

This research is important because it makes it easier to use advanced VLMs in everyday devices that may not have a lot of computing power. By improving how these models work, HiRED can enable faster and more efficient applications in areas like real-time image analysis, gaming, and augmented reality, making advanced technology more accessible.

Abstract

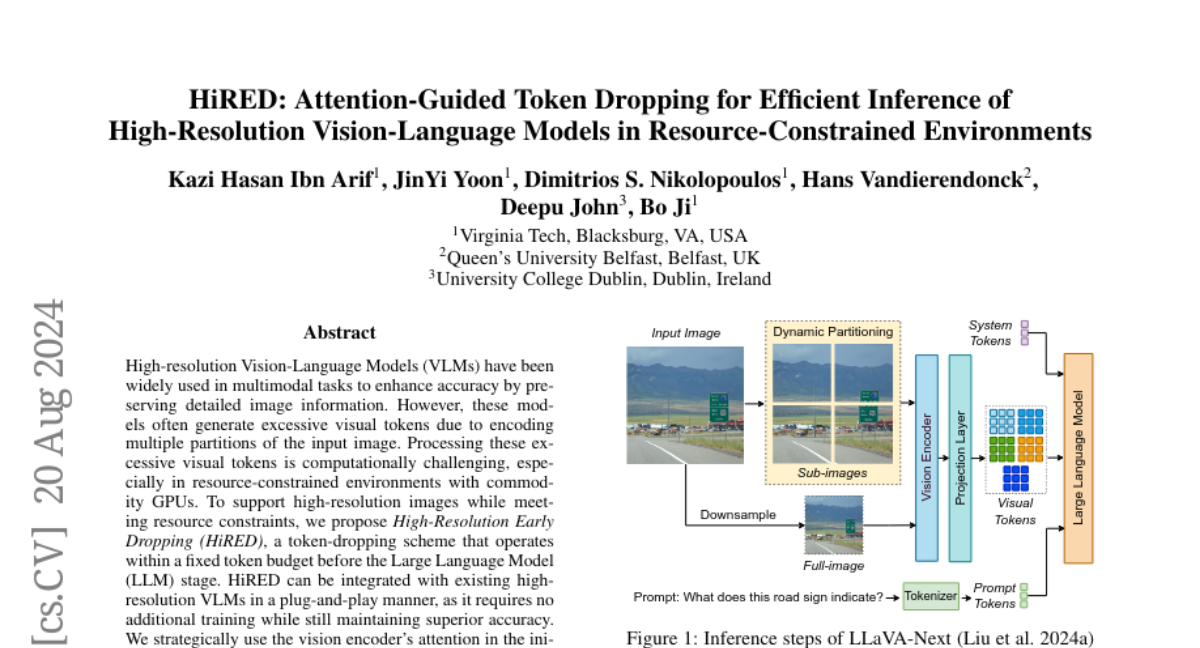

High-resolution Vision-Language Models (VLMs) have been widely used in multimodal tasks to enhance accuracy by preserving detailed image information. However, these models often generate excessive visual tokens due to encoding multiple partitions of the input image. Processing these excessive visual tokens is computationally challenging, especially in resource-constrained environments with commodity GPUs. To support high-resolution images while meeting resource constraints, we propose High-Resolution Early Dropping (HiRED), a token-dropping scheme that operates within a fixed token budget before the Large Language Model (LLM) stage. HiRED can be integrated with existing high-resolution VLMs in a plug-and-play manner, as it requires no additional training while still maintaining superior accuracy. We strategically use the vision encoder's attention in the initial layers to assess the visual content of each image partition and allocate the token budget accordingly. Then, using the attention in the final layer, we select the most important visual tokens from each partition within the allocated budget, dropping the rest. Empirically, when applied to LLaVA-Next-7B on NVIDIA TESLA P40 GPU, HiRED with a 20% token budget increases token generation throughput by 4.7, reduces first-token generation latency by 15 seconds, and saves 2.3 GB of GPU memory for a single inference.