HunyuanCustom: A Multimodal-Driven Architecture for Customized Video Generation

Teng Hu, Zhentao Yu, Zhengguang Zhou, Sen Liang, Yuan Zhou, Qin Lin, Qinglin Lu

2025-05-08

Summary

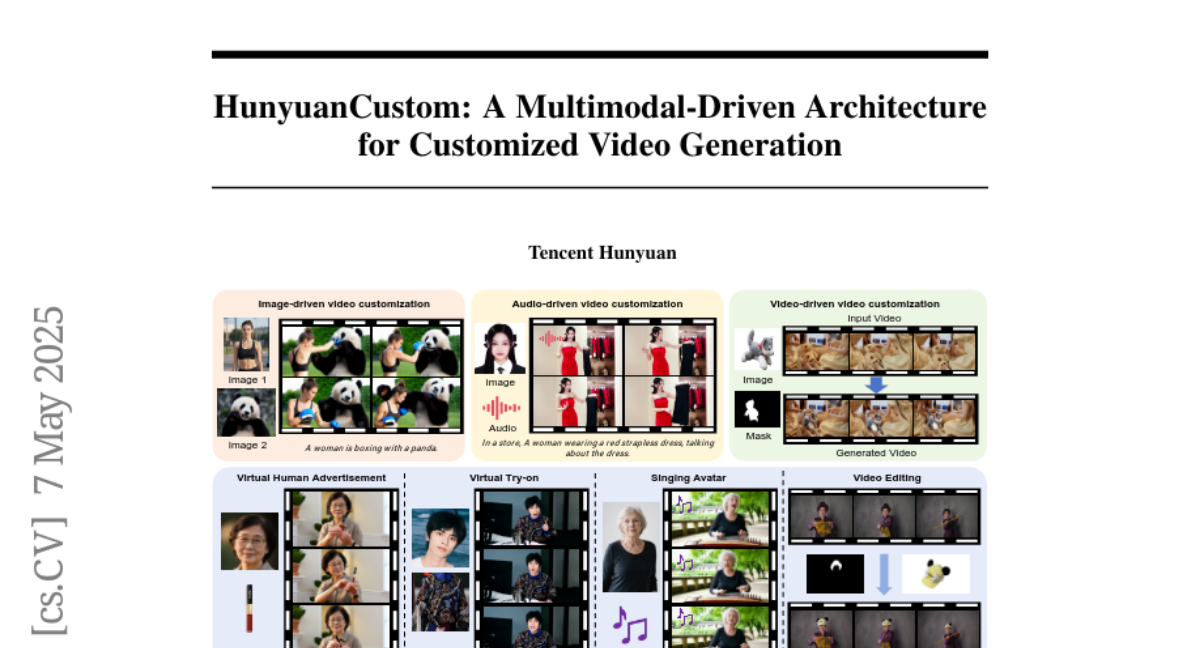

This paper talks about HunyuanCustom, which is a new system for creating videos that can use different types of input, like images, text, or audio, and make sure that important details, such as a person's identity, stay consistent throughout the video.

What's the problem?

The problem is that most video generation tools have trouble keeping things like a person's face or style the same when making videos from different types of input. They also struggle to handle many types of input at once, which can make the results look weird or unrealistic.

What's the solution?

The researchers designed HunyuanCustom as a framework that combines, or 'fuses,' information from different sources in a smart way and uses special techniques to keep important features, like someone's identity, the same throughout the video. Their system can take in all kinds of input and blend them smoothly, and it works better than other existing methods.

Why it matters?

This matters because it means people can create more realistic and personalized videos using all sorts of information, without losing important details. It opens up new possibilities for creative projects, entertainment, and even professional uses where identity and consistency are really important.

Abstract

HunyuanCustom is a multi-modal video generation framework that enhances identity consistency and handles various types of input conditions through specific fusion and injection mechanisms, outperforming existing methods.