Image as an IMU: Estimating Camera Motion from a Single Motion-Blurred Image

Jerred Chen, Ronald Clark

2025-03-27

Summary

This paper is about figuring out how a camera is moving by looking at a single blurry picture, instead of needing a clear video or special sensors.

What's the problem?

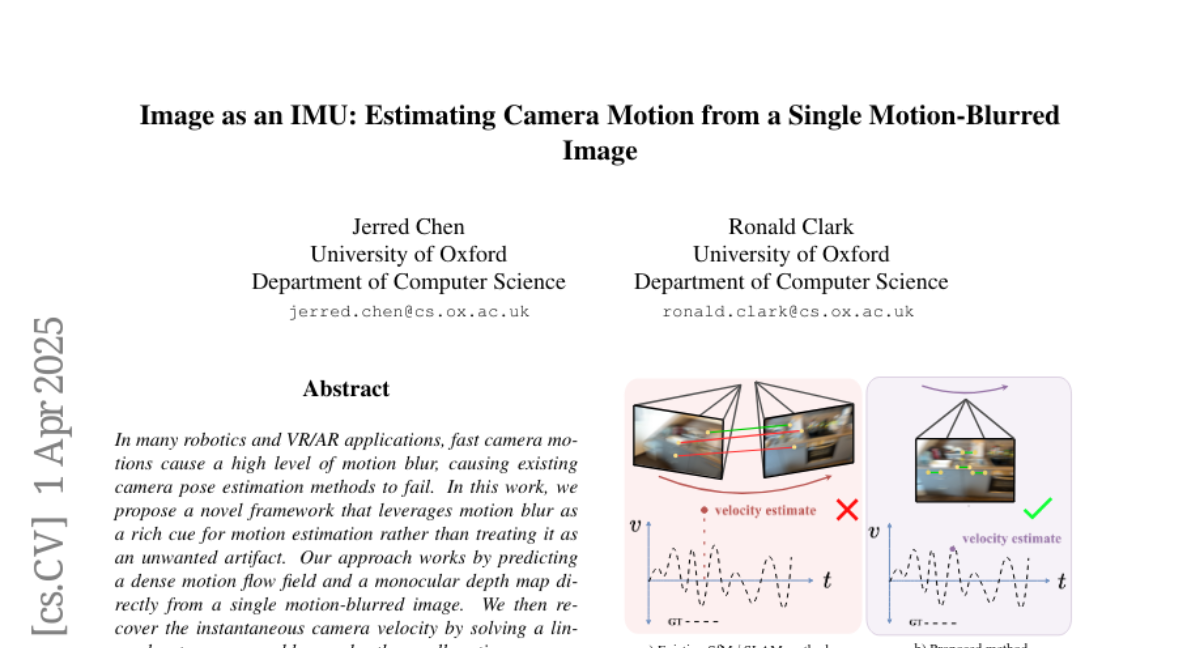

When cameras move quickly, they create blurry images, which makes it hard to tell how the camera was moving. Current methods don't work well with these blurry images.

What's the solution?

The researchers developed a new method that uses the blur itself to estimate the camera's movement. It's like turning the blurry image into a sensor that measures motion.

Why it matters?

This work matters because it can help robots and VR/AR systems understand how they're moving, even when the camera is shaking or moving quickly.

Abstract

In many robotics and VR/AR applications, fast camera motions cause a high level of motion blur, causing existing camera pose estimation methods to fail. In this work, we propose a novel framework that leverages motion blur as a rich cue for motion estimation rather than treating it as an unwanted artifact. Our approach works by predicting a dense motion flow field and a monocular depth map directly from a single motion-blurred image. We then recover the instantaneous camera velocity by solving a linear least squares problem under the small motion assumption. In essence, our method produces an IMU-like measurement that robustly captures fast and aggressive camera movements. To train our model, we construct a large-scale dataset with realistic synthetic motion blur derived from ScanNet++v2 and further refine our model by training end-to-end on real data using our fully differentiable pipeline. Extensive evaluations on real-world benchmarks demonstrate that our method achieves state-of-the-art angular and translational velocity estimates, outperforming current methods like MASt3R and COLMAP.