Imagen 3

Imagen-Team-Google, Jason Baldridge, Jakob Bauer, Mukul Bhutani, Nicole Brichtova, Andrew Bunner, Kelvin Chan, Yichang Chen, Sander Dieleman, Yuqing Du, Zach Eaton-Rosen, Hongliang Fei, Nando de Freitas, Yilin Gao, Evgeny Gladchenko, Sergio Gómez Colmenarejo, Mandy Guo, Alex Haig, Will Hawkins, Hexiang Hu, Huilian Huang, Tobenna Peter Igwe

2024-08-14

Summary

This paper introduces Imagen 3, a new model that creates high-quality images from text descriptions using advanced techniques.

What's the problem?

While many models can generate images from text, they often struggle to produce high-quality results consistently and may not address issues related to safety and representation in the images they create.

What's the solution?

Imagen 3 improves upon previous models by using better evaluation methods to ensure the images it generates are of higher quality. The researchers also focus on making the model safer and more responsible by identifying potential risks and implementing strategies to minimize harm.

Why it matters?

This research is important because it not only enhances the ability of AI to create images from text but also addresses ethical concerns about how these images are generated and used. By ensuring that AI-generated images are safe and representative, it helps build trust in AI technologies.

Abstract

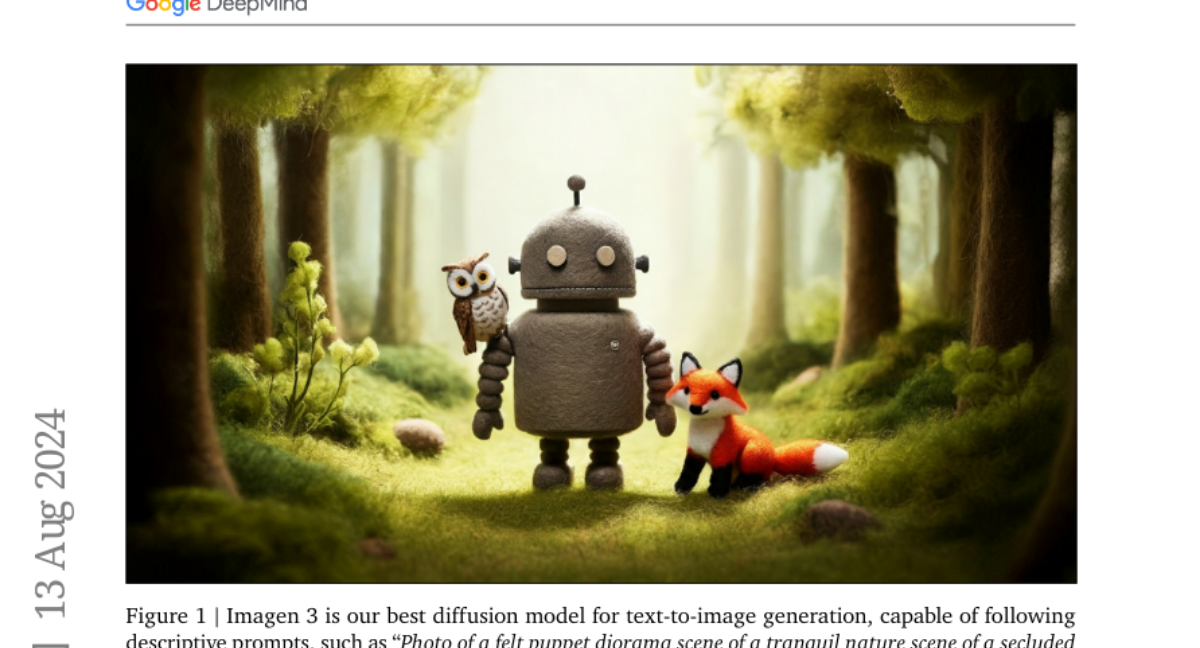

We introduce Imagen 3, a latent diffusion model that generates high quality images from text prompts. We describe our quality and responsibility evaluations. Imagen 3 is preferred over other state-of-the-art (SOTA) models at the time of evaluation. In addition, we discuss issues around safety and representation, as well as methods we used to minimize the potential harm of our models.