In-Context Edit: Enabling Instructional Image Editing with In-Context Generation in Large Scale Diffusion Transformer

Zechuan Zhang, Ji Xie, Yu Lu, Zongxin Yang, Yi Yang

2025-04-30

Summary

This paper talks about a new way for AI to edit images based on instructions, making it easier and more accurate for the AI to understand what changes people want in pictures.

What's the problem?

Current AI tools often need lots of training and huge amounts of data to learn how to follow detailed instructions for editing images, which makes the process slow and less flexible.

What's the solution?

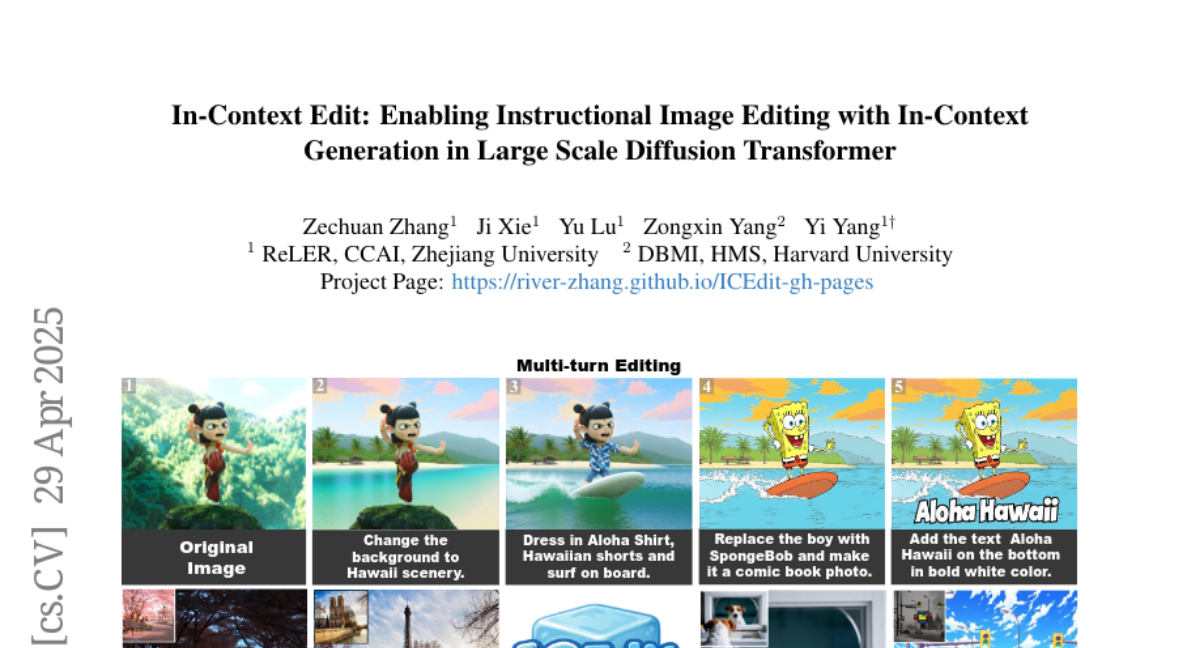

The researchers developed an in-context editing method using a special AI called a Diffusion Transformer. This approach lets the AI learn from just a few examples, so it can follow instructions and edit images correctly without needing tons of extra training.

Why it matters?

This matters because it makes image editing with AI faster, more precise, and accessible to more people, even if there isn’t a lot of training data available. It could help artists, designers, and anyone who wants to quickly change images using simple instructions.

Abstract

A novel in-context editing framework using a Diffusion Transformer enhances instruction-following precision and efficiency without extensive retraining or large datasets.