In-Context Editing: Learning Knowledge from Self-Induced Distributions

Siyuan Qi, Bangcheng Yang, Kailin Jiang, Xiaobo Wang, Jiaqi Li, Yifan Zhong, Yaodong Yang, Zilong Zheng

2024-06-18

Summary

This paper introduces a new method called Consistent In-Context Editing (ICE) for improving how language models learn and adapt to new information without needing to be retrained extensively. It aims to make the process of editing knowledge in these models more reliable and effective.

What's the problem?

Current methods for updating language models with new information can be fragile and often lead to problems like overfitting, where the model becomes too tailored to the new data and performs poorly on other tasks. This can also result in unnatural language generation, making the model's responses sound awkward or incorrect. Essentially, it's hard for these models to learn new things smoothly without messing up what they already know.

What's the solution?

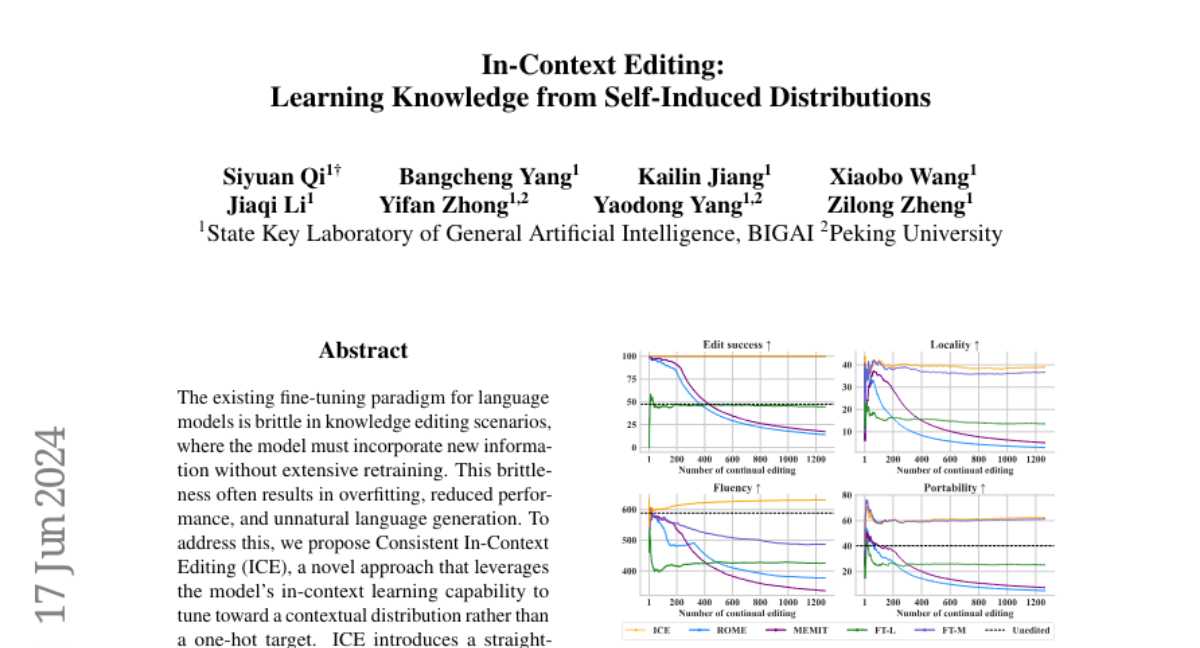

To solve this issue, the authors propose ICE, which allows language models to learn from the context in which they operate rather than just trying to match specific targets. This approach uses a straightforward optimization framework that helps the model adjust its learning process more effectively. The paper analyzes how ICE improves four key areas of knowledge editing: accuracy (how correct the information is), locality (how relevant the information is to its context), generalization (how well the model applies what it has learned to new situations), and linguistic quality (how natural the language sounds). Experimental results show that ICE works well across various datasets and helps maintain the model's overall performance while allowing for continuous updates.

Why it matters?

This research is important because it enhances how AI language models can adapt to new information over time without losing their effectiveness. By improving the way these models learn and edit their knowledge, ICE can lead to better applications in areas like chatbots, virtual assistants, and any system that relies on accurate and natural language understanding. This advancement could make AI interactions more reliable and user-friendly.

Abstract

The existing fine-tuning paradigm for language models is brittle in knowledge editing scenarios, where the model must incorporate new information without extensive retraining. This brittleness often results in overfitting, reduced performance, and unnatural language generation. To address this, we propose Consistent In-Context Editing (ICE), a novel approach that leverages the model's in-context learning capability to tune toward a contextual distribution rather than a one-hot target. ICE introduces a straightforward optimization framework that includes both a target and a procedure, enhancing the robustness and effectiveness of gradient-based tuning methods. We provide analytical insights into ICE across four critical aspects of knowledge editing: accuracy, locality, generalization, and linguistic quality, showing its advantages. Experimental results across four datasets confirm the effectiveness of ICE and demonstrate its potential for continual editing, ensuring that updated information is incorporated while preserving the integrity of the model.