Incorporating Domain Knowledge into Materials Tokenization

Yerim Oh, Jun-Hyung Park, Junho Kim, SungHo Kim, SangKeun Lee

2025-06-17

Summary

This paper talks about MATTER, a new way to break down scientific texts about materials so that AI models can understand material concepts better. Instead of just splitting words by frequency like usual methods, MATTER uses special knowledge about materials to keep important material terms whole and meaningful during tokenization.

What's the problem?

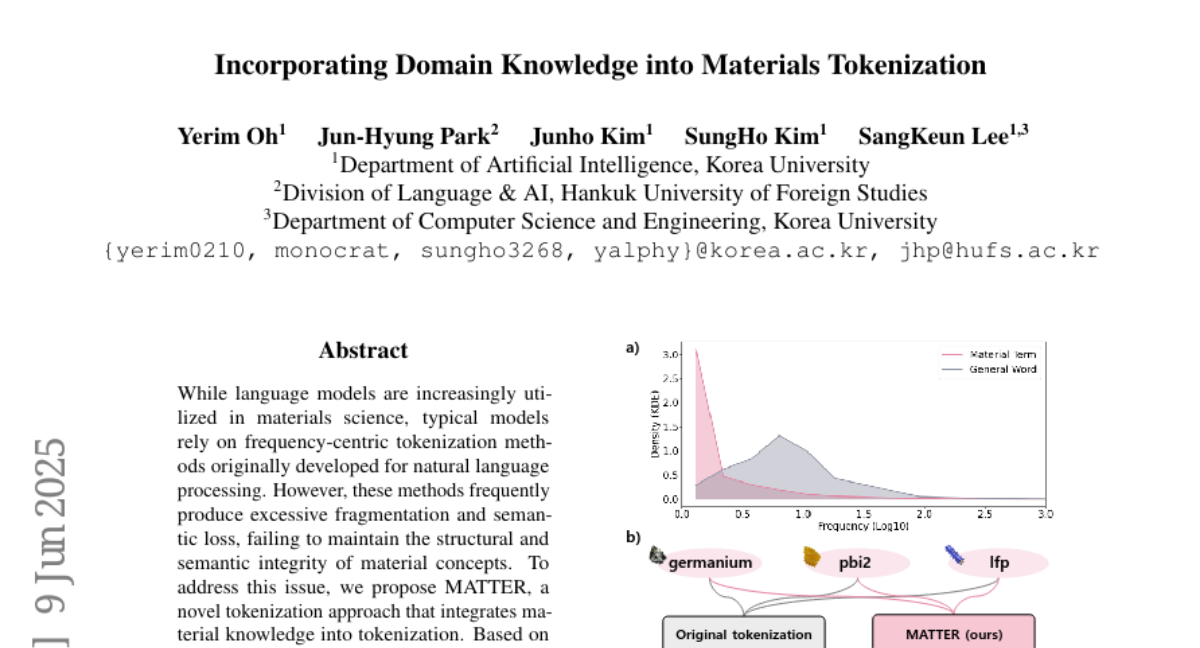

The problem is that common tokenization methods used in language models often break scientific material terms into smaller parts that lose their real meaning and structure. This excessive fragmentation makes it hard for AI models to fully grasp and work with material concepts accurately in scientific text tasks.

What's the solution?

The solution is MATTER, which combines a material concept detector called MatDetector trained on a materials knowledge base with a smart re-ranking method that prioritizes keeping whole material terms intact when merging tokens. By adjusting how word frequencies are counted to highlight material-related words, MATTER maintains the structural and semantic integrity of material concepts during tokenization, improving AI understanding.

Why it matters?

This matters because accurately understanding material terms helps AI models perform better in scientific tasks like text generation and classification related to materials science. By incorporating domain knowledge into tokenization, MATTER reduces meaning loss and fragmentation, leading to improved performance and more reliable AI tools for scientific research.

Abstract

MATTER, a novel tokenization approach incorporating material knowledge, improves performance in scientific text processing tasks by maintaining structural and semantic material integrity.