Insight-V: Exploring Long-Chain Visual Reasoning with Multimodal Large Language Models

Yuhao Dong, Zuyan Liu, Hai-Long Sun, Jingkang Yang, Winston Hu, Yongming Rao, Ziwei Liu

2024-11-22

Summary

This paper introduces Insight-V, a new system designed to improve the reasoning abilities of multimodal large language models (MLLMs) by creating long and detailed reasoning data for complex tasks involving both text and images.

What's the problem?

While large language models have become better at reasoning, they still struggle with tasks that require long, complex reasoning, especially when dealing with visual information. There is a lack of high-quality data and effective training methods for these types of reasoning tasks, making it hard for models to perform well in real-world situations.

What's the solution?

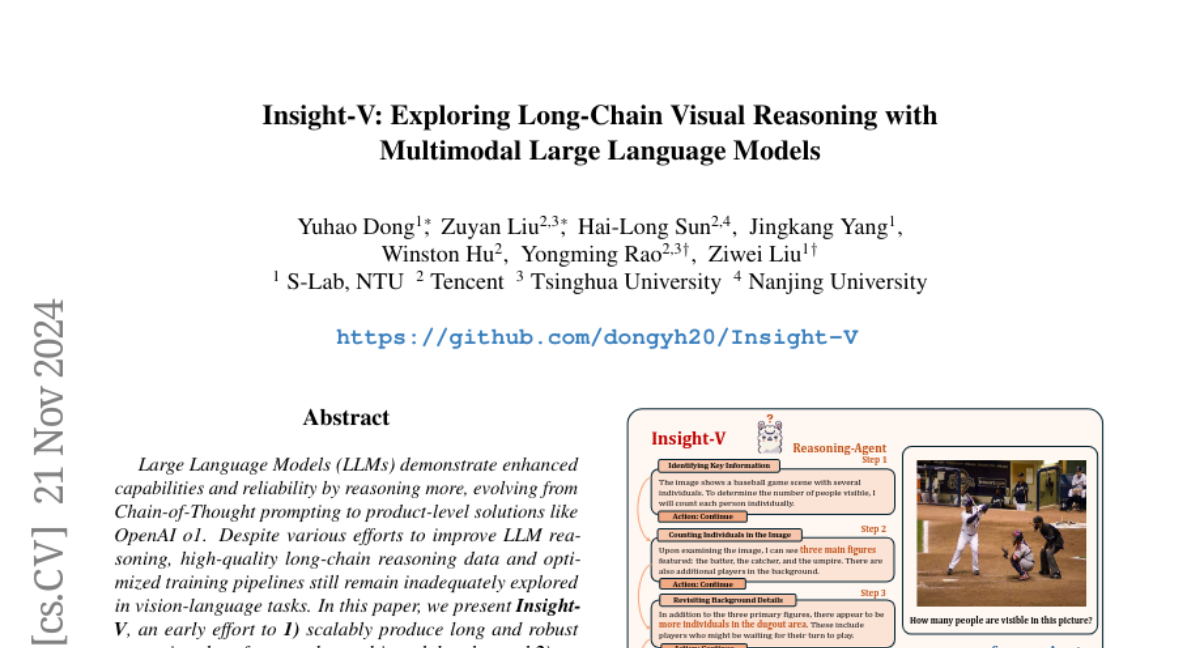

To solve this problem, the authors developed Insight-V, which includes a two-step process for generating long reasoning data without needing human input. This involves creating diverse reasoning paths and assessing their quality. Additionally, they designed a multi-agent system with two types of agents: one focuses on generating detailed reasoning while the other summarizes and evaluates this reasoning. They also used an iterative algorithm to improve the stability and quality of the reasoning produced.

Why it matters?

This research is significant because it enhances the capabilities of AI systems in understanding and reasoning about complex visual information. By providing a structured way to generate and assess reasoning data, Insight-V can lead to better performance in various applications, such as image recognition, automated decision-making, and any area where combining text and visual data is essential.

Abstract

Large Language Models (LLMs) demonstrate enhanced capabilities and reliability by reasoning more, evolving from Chain-of-Thought prompting to product-level solutions like OpenAI o1. Despite various efforts to improve LLM reasoning, high-quality long-chain reasoning data and optimized training pipelines still remain inadequately explored in vision-language tasks. In this paper, we present Insight-V, an early effort to 1) scalably produce long and robust reasoning data for complex multi-modal tasks, and 2) an effective training pipeline to enhance the reasoning capabilities of multi-modal large language models (MLLMs). Specifically, to create long and structured reasoning data without human labor, we design a two-step pipeline with a progressive strategy to generate sufficiently long and diverse reasoning paths and a multi-granularity assessment method to ensure data quality. We observe that directly supervising MLLMs with such long and complex reasoning data will not yield ideal reasoning ability. To tackle this problem, we design a multi-agent system consisting of a reasoning agent dedicated to performing long-chain reasoning and a summary agent trained to judge and summarize reasoning results. We further incorporate an iterative DPO algorithm to enhance the reasoning agent's generation stability and quality. Based on the popular LLaVA-NeXT model and our stronger base MLLM, we demonstrate significant performance gains across challenging multi-modal benchmarks requiring visual reasoning. Benefiting from our multi-agent system, Insight-V can also easily maintain or improve performance on perception-focused multi-modal tasks.