Insights from the Inverse: Reconstructing LLM Training Goals Through Inverse RL

Jared Joselowitz, Arjun Jagota, Satyapriya Krishna, Sonali Parbhoo

2024-10-17

Summary

This paper introduces a new method to understand how large language models (LLMs) make decisions by using a technique called inverse reinforcement learning (IRL) to uncover their hidden reward systems.

What's the problem?

Large language models, like those used in chatbots and text generation, are trained using feedback from human preferences, but it's often unclear how they decide what responses to give. Understanding their decision-making process is important for ensuring these models behave safely and effectively. Current methods do not provide a clear view of the reward functions that guide these models.

What's the solution?

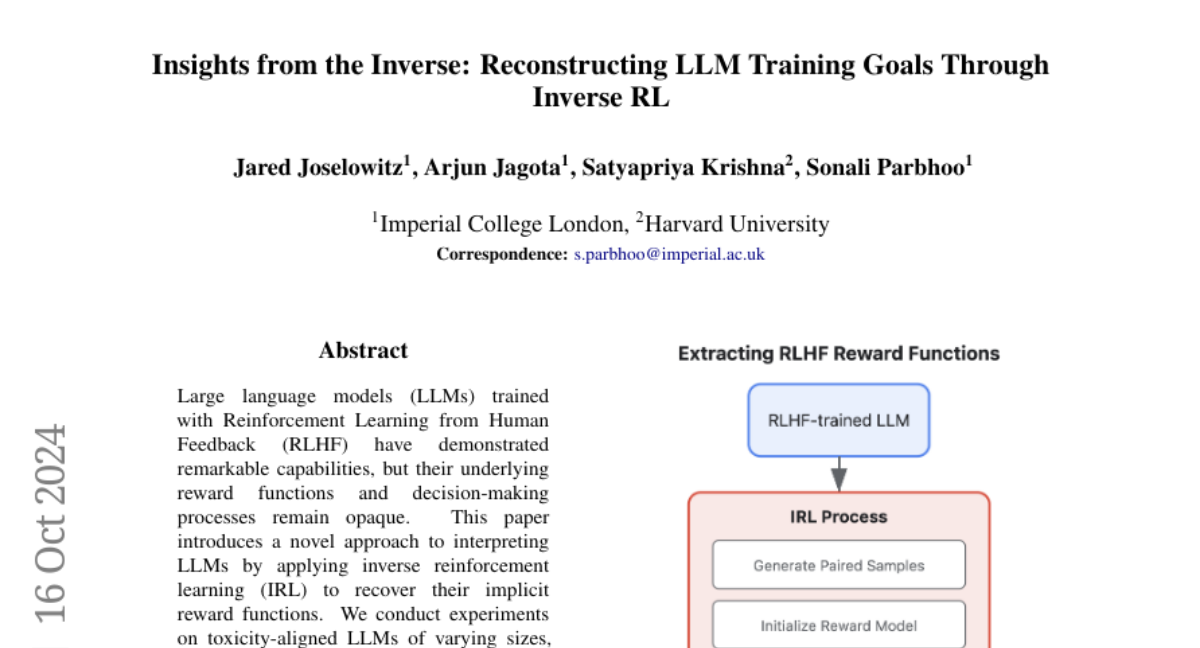

To address this issue, the authors applied inverse reinforcement learning (IRL) to analyze LLMs that were trained with human feedback. They conducted experiments on different LLMs and discovered that they could extract reward models that predict human preferences with up to 80.40% accuracy. This means they could identify what factors the models consider when making decisions. The researchers also found that the size of the model affects how easily its decision-making process can be interpreted. They demonstrated that these IRL-derived reward models could be used to fine-tune new LLMs, improving their performance on tasks related to toxicity (like avoiding harmful content).

Why it matters?

This research is significant because it provides a way to better understand and improve the alignment of LLMs with human values. By revealing how these models make decisions, developers can create safer and more effective AI systems. This work is crucial as AI becomes more integrated into everyday life, ensuring that it behaves in ways that are beneficial and aligned with societal norms.

Abstract

Large language models (LLMs) trained with Reinforcement Learning from Human Feedback (RLHF) have demonstrated remarkable capabilities, but their underlying reward functions and decision-making processes remain opaque. This paper introduces a novel approach to interpreting LLMs by applying inverse reinforcement learning (IRL) to recover their implicit reward functions. We conduct experiments on toxicity-aligned LLMs of varying sizes, extracting reward models that achieve up to 80.40% accuracy in predicting human preferences. Our analysis reveals key insights into the non-identifiability of reward functions, the relationship between model size and interpretability, and potential pitfalls in the RLHF process. We demonstrate that IRL-derived reward models can be used to fine-tune new LLMs, resulting in comparable or improved performance on toxicity benchmarks. This work provides a new lens for understanding and improving LLM alignment, with implications for the responsible development and deployment of these powerful systems.