Instant Facial Gaussians Translator for Relightable and Interactable Facial Rendering

Dafei Qin, Hongyang Lin, Qixuan Zhang, Kaichun Qiao, Longwen Zhang, Zijun Zhao, Jun Saito, Jingyi Yu, Lan Xu, Taku Komura

2024-09-12

Summary

This paper talks about GauFace, a new method for rendering and animating realistic facial features using a technique called Gaussian Splatting.

What's the problem?

Creating realistic animations and renderings of human faces can be difficult and resource-intensive. Traditional methods may not provide the real-time performance needed for interactive applications, especially on mobile devices.

What's the solution?

The authors developed GauFace, which uses a structured representation of facial features to enable efficient animation and rendering. They also introduced TransGS, a system that quickly converts facial assets into GauFace representations. This system uses advanced techniques to ensure that the generated music aligns with the video content, allowing for high-quality animations at 30 frames per second on mobile devices. Their approach includes a novel way to process video frames and align music beats with the movements in the video.

Why it matters?

This research is important because it allows for high-quality facial animations that can be used in various applications, such as video games, virtual reality, and film production. By improving how we render and animate faces, GauFace can enhance user experiences in interactive media.

Abstract

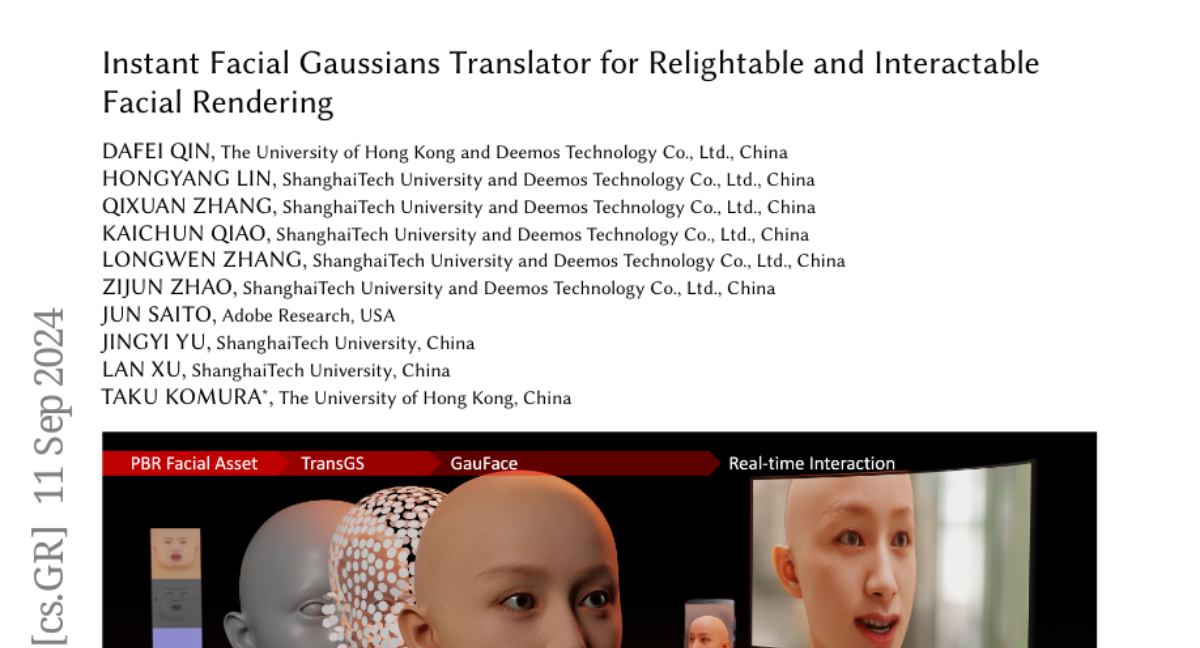

We propose GauFace, a novel Gaussian Splatting representation, tailored for efficient animation and rendering of physically-based facial assets. Leveraging strong geometric priors and constrained optimization, GauFace ensures a neat and structured Gaussian representation, delivering high fidelity and real-time facial interaction of 30fps@1440p on a Snapdragon 8 Gen 2 mobile platform. Then, we introduce TransGS, a diffusion transformer that instantly translates physically-based facial assets into the corresponding GauFace representations. Specifically, we adopt a patch-based pipeline to handle the vast number of Gaussians effectively. We also introduce a novel pixel-aligned sampling scheme with UV positional encoding to ensure the throughput and rendering quality of GauFace assets generated by our TransGS. Once trained, TransGS can instantly translate facial assets with lighting conditions to GauFace representation, With the rich conditioning modalities, it also enables editing and animation capabilities reminiscent of traditional CG pipelines. We conduct extensive evaluations and user studies, compared to traditional offline and online renderers, as well as recent neural rendering methods, which demonstrate the superior performance of our approach for facial asset rendering. We also showcase diverse immersive applications of facial assets using our TransGS approach and GauFace representation, across various platforms like PCs, phones and even VR headsets.