Instruction Pre-Training: Language Models are Supervised Multitask Learners

Daixuan Cheng, Yuxian Gu, Shaohan Huang, Junyu Bi, Minlie Huang, Furu Wei

2024-06-21

Summary

This paper introduces a new method called Instruction Pre-Training, which improves how language models learn by using instruction-response pairs to enhance their training process.

What's the problem?

Language models (LMs) have become very effective through a method called unsupervised multitask pre-training, but there are still limitations in how well they can generalize to different tasks. This means that while they can perform many tasks, they might not always do so accurately or effectively when faced with new challenges. There is a need for better training methods that help these models learn more effectively from the data.

What's the solution?

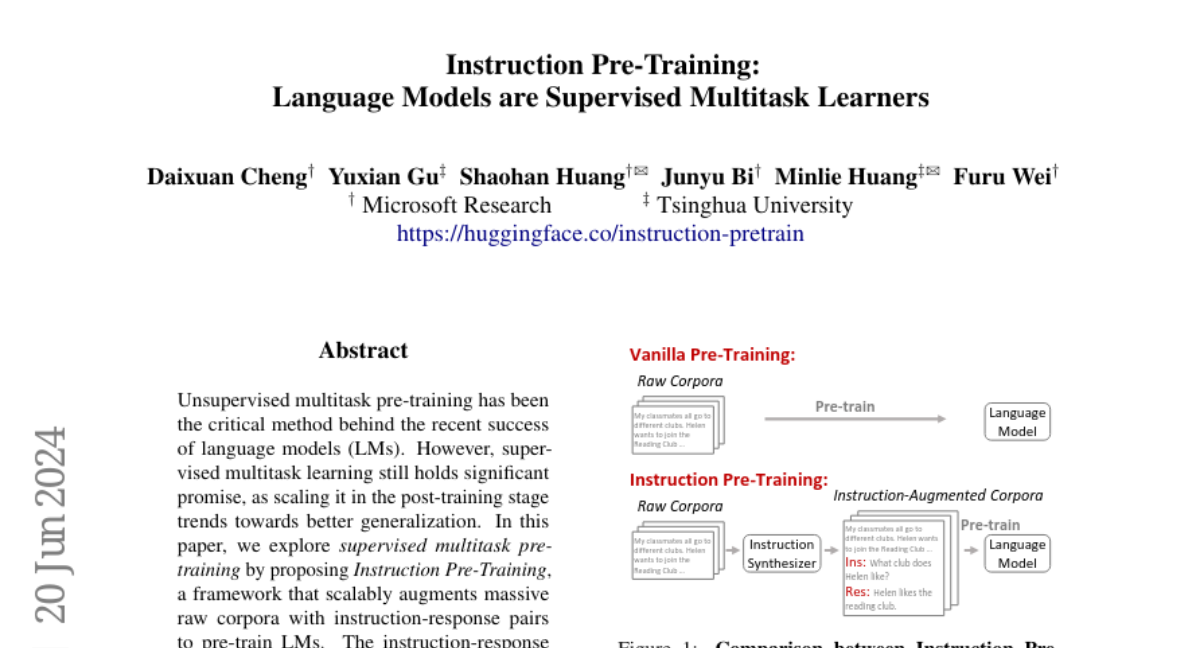

The researchers propose Instruction Pre-Training, which combines traditional training data with instruction-response pairs generated by an instruction synthesizer. This synthesizer creates relevant instructions based on the raw text data, allowing the model to learn from a wider variety of tasks. In their experiments, they generated 200 million instruction-response pairs covering over 40 different task categories. They found that models trained with this method performed better than those trained using traditional methods and even allowed smaller models to compete with larger ones in terms of performance.

Why it matters?

This research is significant because it shows that using instruction-response pairs can greatly enhance the learning capabilities of language models. By improving how these models are trained, we can make them more effective for various applications, such as chatbots, translation services, and other AI tools that rely on understanding and generating human language. This could lead to advancements in technology that improve user experiences across different fields.

Abstract

Unsupervised multitask pre-training has been the critical method behind the recent success of language models (LMs). However, supervised multitask learning still holds significant promise, as scaling it in the post-training stage trends towards better generalization. In this paper, we explore supervised multitask pre-training by proposing Instruction Pre-Training, a framework that scalably augments massive raw corpora with instruction-response pairs to pre-train LMs. The instruction-response pairs are generated by an efficient instruction synthesizer built on open-source models. In our experiments, we synthesize 200M instruction-response pairs covering 40+ task categories to verify the effectiveness of Instruction Pre-Training. In pre-training from scratch, Instruction Pre-Training not only consistently enhances pre-trained base models but also benefits more from further instruction tuning. In continual pre-training, Instruction Pre-Training enables Llama3-8B to be comparable to or even outperform Llama3-70B. Our model, code, and data are available at https://github.com/microsoft/LMOps.