InstructPart: Task-Oriented Part Segmentation with Instruction Reasoning

Zifu Wan, Yaqi Xie, Ce Zhang, Zhiqiu Lin, Zihan Wang, Simon Stepputtis, Deva Ramanan, Katia Sycara

2025-05-27

Summary

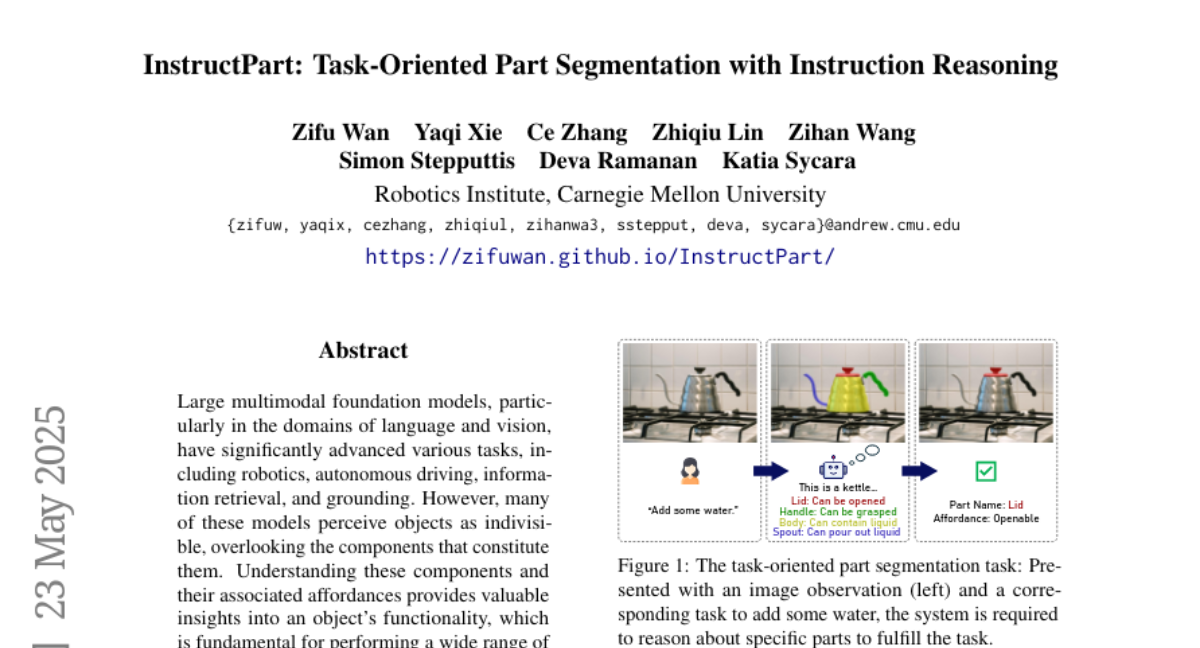

This paper talks about InstructPart, a new way to test and improve how well AI models that understand both images and language can follow instructions to identify different parts of objects in real-world situations.

What's the problem?

The problem is that current vision-language models often have trouble breaking down objects into their parts when given specific instructions, especially in complicated or realistic settings. This makes it hard for these models to be useful in tasks where understanding detailed instructions about images is important.

What's the solution?

The authors created a new benchmark and dataset called InstructPart, which gives these models more realistic and task-focused challenges. This helps researchers see exactly where the models struggle and how they can be improved to follow instructions better and perform more accurately in real-world tasks.

Why it matters?

This is important because it pushes AI to get better at understanding and following instructions about images, which can help in areas like robotics, manufacturing, and any job where machines need to work with people and objects in the real world.

Abstract

A new benchmark, InstructPart, and a task-oriented part segmentation dataset are introduced to evaluate and improve the performance of Vision-Language Models in real-world contexts.