Internal Consistency and Self-Feedback in Large Language Models: A Survey

Xun Liang, Shichao Song, Zifan Zheng, Hanyu Wang, Qingchen Yu, Xunkai Li, Rong-Hua Li, Feiyu Xiong, Zhiyu Li

2024-07-22

Summary

This paper surveys how large language models (LLMs) can improve their responses through internal consistency and self-feedback. It discusses the problems these models face, such as making mistakes or generating incorrect information, and how they can learn to correct themselves.

What's the problem?

LLMs are designed to generate human-like text, but they often struggle with reasoning and can produce incorrect or nonsensical answers, known as 'hallucinations.' These issues can undermine trust in AI systems. While there are methods like Self-Consistency and Self-Improve that aim to help LLMs evaluate and improve their own responses, there hasn't been a unified way to understand these approaches or their motivations.

What's the solution?

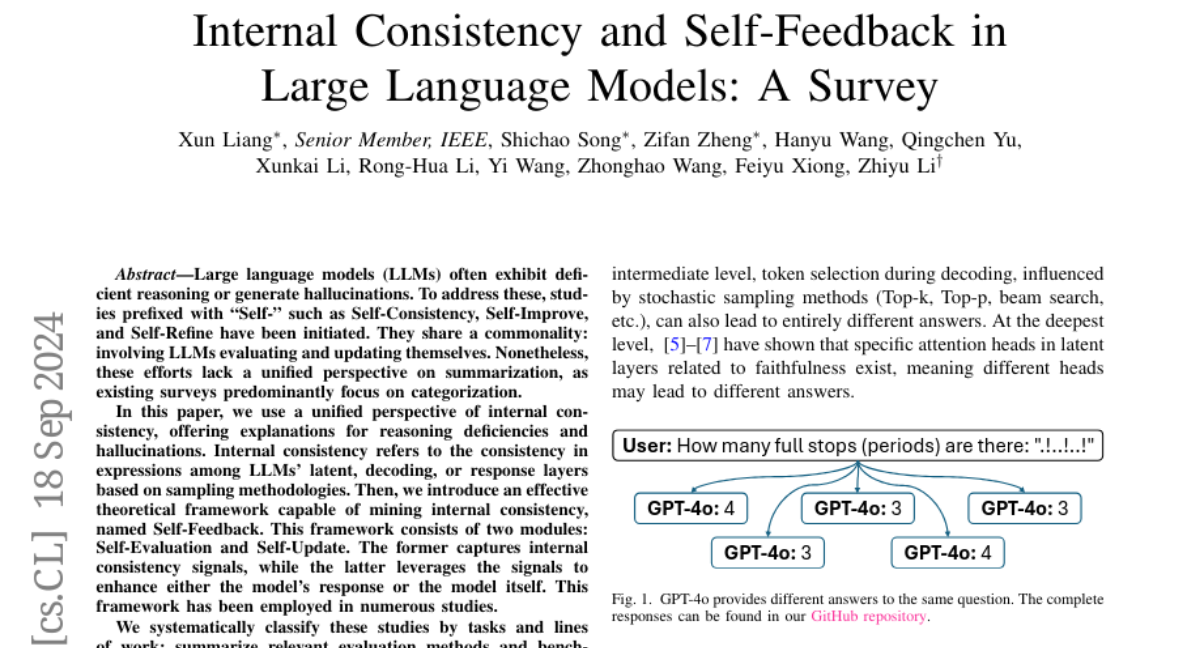

The authors introduce a theoretical framework called Internal Consistency, which helps explain why LLMs sometimes fail in reasoning and produce hallucinations. They also propose a new method called Self-Feedback, which includes two main parts: Self-Evaluation, where the model checks its own answers for consistency, and Self-Update, where it makes corrections based on that evaluation. By analyzing existing studies and methods, the authors provide a structured overview of how these frameworks can improve LLM performance.

Why it matters?

This research is important because it addresses critical challenges in making LLMs more reliable and accurate. By focusing on internal consistency and self-feedback, the study aims to enhance the ability of AI systems to provide correct information and maintain coherent reasoning. This is essential for applications where accurate communication is crucial, such as in healthcare, education, and customer service.

Abstract

Large language models (LLMs) are expected to respond accurately but often exhibit deficient reasoning or generate hallucinatory content. To address these, studies prefixed with ``Self-'' such as Self-Consistency, Self-Improve, and Self-Refine have been initiated. They share a commonality: involving LLMs evaluating and updating itself to mitigate the issues. Nonetheless, these efforts lack a unified perspective on summarization, as existing surveys predominantly focus on categorization without examining the motivations behind these works. In this paper, we summarize a theoretical framework, termed Internal Consistency, which offers unified explanations for phenomena such as the lack of reasoning and the presence of hallucinations. Internal Consistency assesses the coherence among LLMs' latent layer, decoding layer, and response layer based on sampling methodologies. Expanding upon the Internal Consistency framework, we introduce a streamlined yet effective theoretical framework capable of mining Internal Consistency, named Self-Feedback. The Self-Feedback framework consists of two modules: Self-Evaluation and Self-Update. This framework has been employed in numerous studies. We systematically classify these studies by tasks and lines of work; summarize relevant evaluation methods and benchmarks; and delve into the concern, ``Does Self-Feedback Really Work?'' We propose several critical viewpoints, including the ``Hourglass Evolution of Internal Consistency'', ``Consistency Is (Almost) Correctness'' hypothesis, and ``The Paradox of Latent and Explicit Reasoning''. Furthermore, we outline promising directions for future research. We have open-sourced the experimental code, reference list, and statistical data, available at https://github.com/IAAR-Shanghai/ICSFSurvey.