Interpreting the Weight Space of Customized Diffusion Models

Amil Dravid, Yossi Gandelsman, Kuan-Chieh Wang, Rameen Abdal, Gordon Wetzstein, Alexei A. Efros, Kfir Aberman

2024-06-14

Summary

This paper discusses a study on the 'weight space' of customized diffusion models, which are AI systems that generate images. The researchers created a large dataset of over 60,000 models, each fine-tuned to represent different people's visual identities. They introduced a concept called 'weights2weights' that helps understand how these models work and how they can be manipulated.

What's the problem?

Understanding how diffusion models learn and represent different identities is difficult because the internal workings of these models can be complex and abstract. This makes it challenging to control or predict how changes in the model will affect the generated images, especially when trying to create specific features or attributes in the images.

What's the solution?

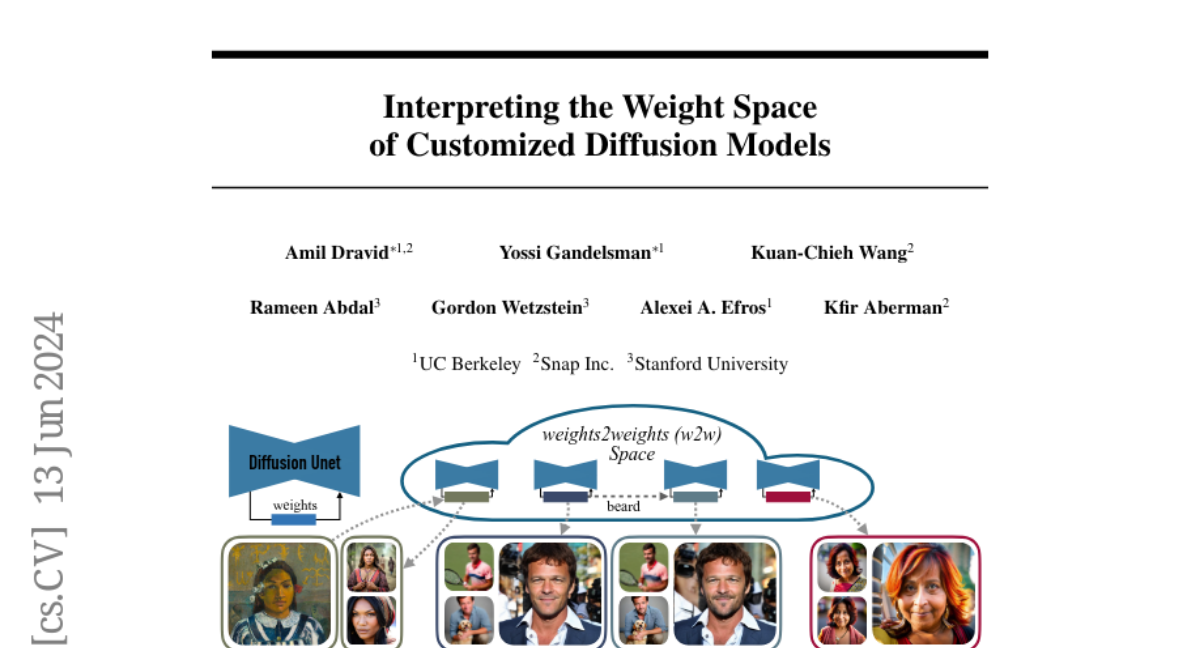

To address this issue, the authors developed the weights2weights framework, which organizes the weight space of these models into a structured format. They demonstrated three main applications: sampling new identities from this space, editing existing identities (like adding features such as a beard), and inverting images to reconstruct identities even from unusual inputs (like paintings). This framework allows for better manipulation of the models and clearer insights into how they function.

Why it matters?

This research is significant because it provides a way to better understand and control diffusion models, making it easier to generate specific visual identities. By creating a structured approach to analyze and manipulate model weights, this work can lead to advancements in personalized image generation, such as creating realistic avatars or enhancing creative tools in art and design.

Abstract

We investigate the space of weights spanned by a large collection of customized diffusion models. We populate this space by creating a dataset of over 60,000 models, each of which is a base model fine-tuned to insert a different person's visual identity. We model the underlying manifold of these weights as a subspace, which we term weights2weights. We demonstrate three immediate applications of this space -- sampling, editing, and inversion. First, as each point in the space corresponds to an identity, sampling a set of weights from it results in a model encoding a novel identity. Next, we find linear directions in this space corresponding to semantic edits of the identity (e.g., adding a beard). These edits persist in appearance across generated samples. Finally, we show that inverting a single image into this space reconstructs a realistic identity, even if the input image is out of distribution (e.g., a painting). Our results indicate that the weight space of fine-tuned diffusion models behaves as an interpretable latent space of identities.