KAN or MLP: A Fairer Comparison

Runpeng Yu, Weihao Yu, Xinchao Wang

2024-07-24

Summary

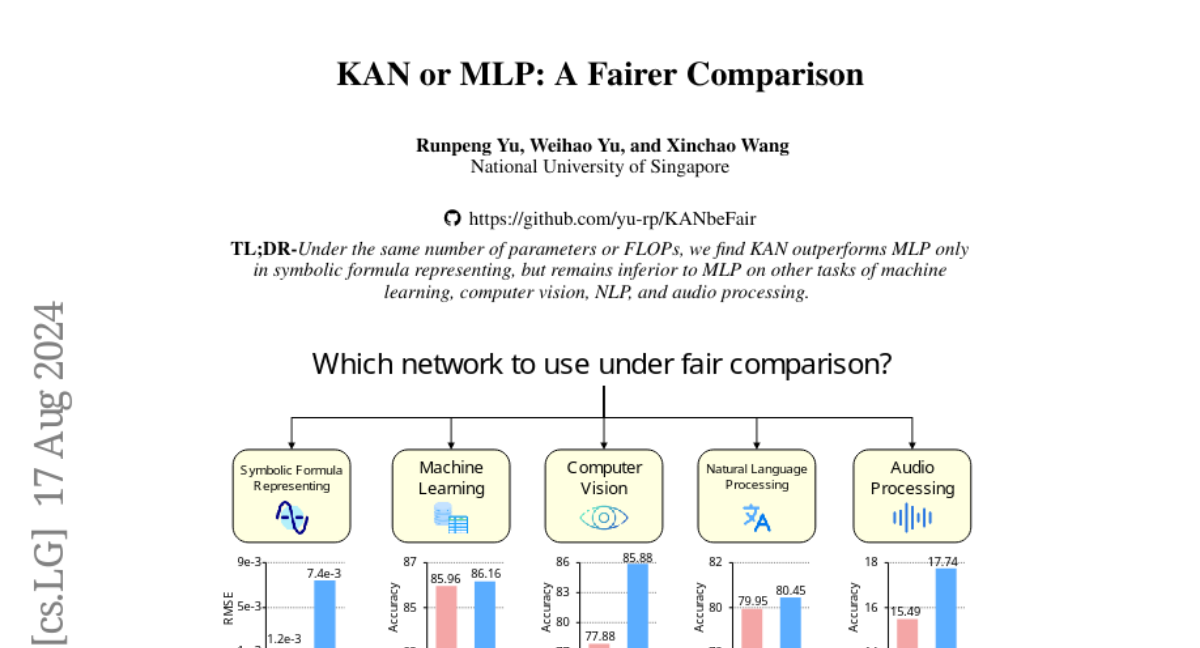

This paper compares two types of neural network models, KAN (Kolmogorov-Arnold Network) and MLP (Multi-Layer Perceptron), across various tasks like machine learning, computer vision, and audio processing. It aims to provide a fair assessment of their performance under similar conditions.

What's the problem?

While both KAN and MLP are used for different tasks in AI, there hasn't been a comprehensive comparison that controls for factors like the number of parameters or the amount of computation (FLOPs). This makes it hard to determine which model is better for specific applications. Additionally, KAN has been reported to perform well in some areas, but its overall effectiveness compared to MLP remains unclear.

What's the solution?

The authors conducted experiments to compare KAN and MLP while controlling for the number of parameters and FLOPs. They found that MLP generally outperforms KAN in most tasks except for symbolic formula representation, where KAN has an advantage due to its unique B-spline activation function. They also discovered that when they applied the B-spline function to MLP, its performance in symbolic tasks improved significantly. Furthermore, they noted that KAN struggles more with retaining information over time compared to MLP in continual learning scenarios.

Why it matters?

This research is significant because it helps clarify the strengths and weaknesses of KAN and MLP models, guiding researchers and developers in choosing the right model for their specific needs. By providing a fair comparison, the findings can influence future developments in neural network architectures and improve their applications in various fields.

Abstract

This paper does not introduce a novel method. Instead, it offers a fairer and more comprehensive comparison of KAN and MLP models across various tasks, including machine learning, computer vision, audio processing, natural language processing, and symbolic formula representation. Specifically, we control the number of parameters and FLOPs to compare the performance of KAN and MLP. Our main observation is that, except for symbolic formula representation tasks, MLP generally outperforms KAN. We also conduct ablation studies on KAN and find that its advantage in symbolic formula representation mainly stems from its B-spline activation function. When B-spline is applied to MLP, performance in symbolic formula representation significantly improves, surpassing or matching that of KAN. However, in other tasks where MLP already excels over KAN, B-spline does not substantially enhance MLP's performance. Furthermore, we find that KAN's forgetting issue is more severe than that of MLP in a standard class-incremental continual learning setting, which differs from the findings reported in the KAN paper. We hope these results provide insights for future research on KAN and other MLP alternatives. Project link: https://github.com/yu-rp/KANbeFair