Kolmogorov-Arnold Transformer

Xingyi Yang, Xinchao Wang

2024-09-17

Summary

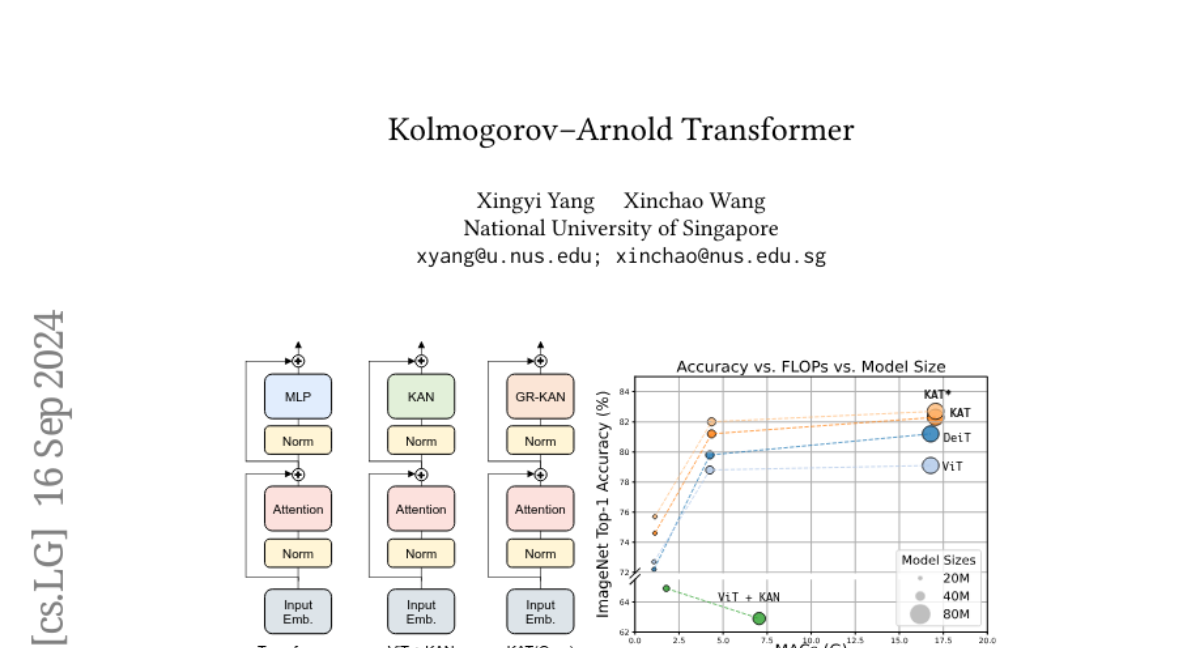

This paper introduces the Kolmogorov-Arnold Transformer (KAT), a new type of deep learning model that improves how information is processed in transformers by using a different kind of layer called Kolmogorov-Arnold Network (KAN).

What's the problem?

Traditional transformers use multi-layer perceptron (MLP) layers to mix information, but this can be inefficient and slow, especially with complex data. The standard methods struggle with three main issues: they are not optimized for modern computing hardware, they require a lot of computation for each input-output pair, and initializing weights correctly is challenging.

What's the solution?

To solve these problems, KAT replaces MLP layers with KAN layers. It uses rational functions instead of B-spline functions to improve speed on GPUs, shares weights among groups of neurons to reduce computational load, and carefully initializes weights to maintain consistent performance across layers. These changes allow KAT to work better and faster than traditional transformer models.

Why it matters?

This research is important because it enhances the efficiency and effectiveness of deep learning models, which are used in many applications like image recognition and natural language processing. By improving how transformers work, KAT can lead to better performance in various tasks while also being easier to implement.

Abstract

Transformers stand as the cornerstone of mordern deep learning. Traditionally, these models rely on multi-layer perceptron (MLP) layers to mix the information between channels. In this paper, we introduce the Kolmogorov-Arnold Transformer (KAT), a novel architecture that replaces MLP layers with Kolmogorov-Arnold Network (KAN) layers to enhance the expressiveness and performance of the model. Integrating KANs into transformers, however, is no easy feat, especially when scaled up. Specifically, we identify three key challenges: (C1) Base function. The standard B-spline function used in KANs is not optimized for parallel computing on modern hardware, resulting in slower inference speeds. (C2) Parameter and Computation Inefficiency. KAN requires a unique function for each input-output pair, making the computation extremely large. (C3) Weight initialization. The initialization of weights in KANs is particularly challenging due to their learnable activation functions, which are critical for achieving convergence in deep neural networks. To overcome the aforementioned challenges, we propose three key solutions: (S1) Rational basis. We replace B-spline functions with rational functions to improve compatibility with modern GPUs. By implementing this in CUDA, we achieve faster computations. (S2) Group KAN. We share the activation weights through a group of neurons, to reduce the computational load without sacrificing performance. (S3) Variance-preserving initialization. We carefully initialize the activation weights to make sure that the activation variance is maintained across layers. With these designs, KAT scales effectively and readily outperforms traditional MLP-based transformers.