LearnAct: Few-Shot Mobile GUI Agent with a Unified Demonstration Benchmark

Guangyi Liu, Pengxiang Zhao, Liang Liu, Zhiming Chen, Yuxiang Chai, Shuai Ren, Hao Wang, Shibo He, Wenchao Meng

2025-04-22

Summary

This paper talks about LearnAct, a new system and dataset that helps AI agents on mobile devices learn to use apps better by watching just a few examples of how a person does a task.

What's the problem?

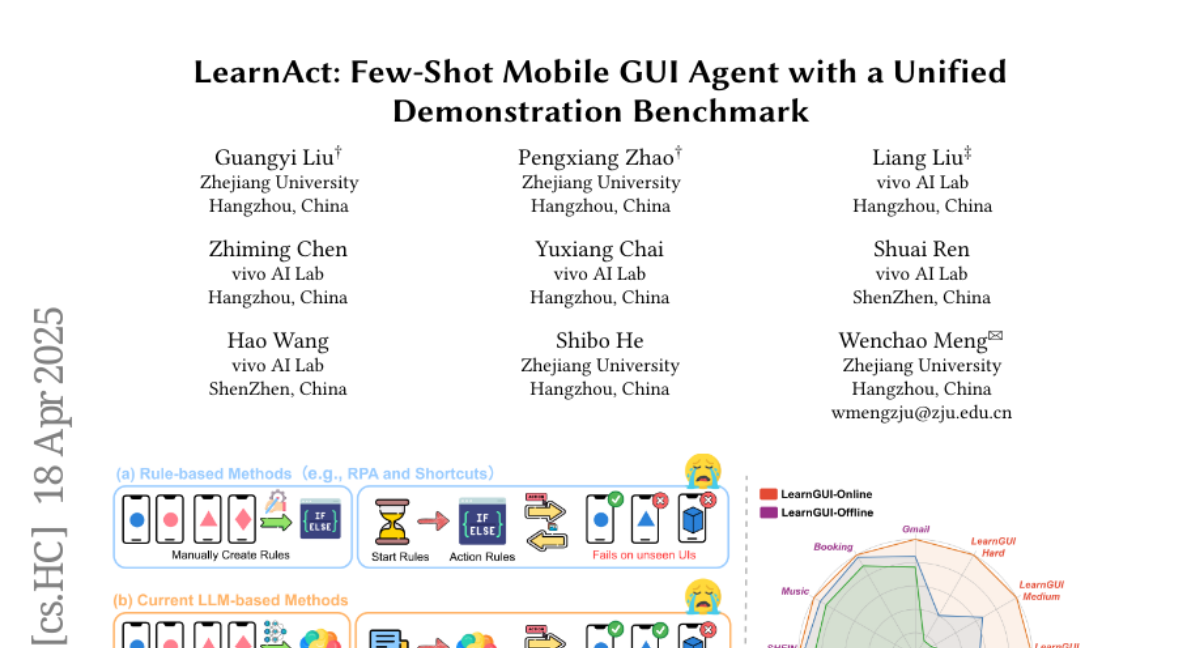

The problem is that mobile AI agents often struggle to handle the huge variety of apps and tasks that real people use every day. Traditional training methods need tons of data and still can't make the agents flexible or smart enough to deal with new or personalized situations, so they often fail when faced with something they haven't seen before.

What's the solution?

The researchers created LearnAct, which works by showing the AI a few demonstrations of how a person completes a task in a mobile app. They also built LearnGUI, a special dataset full of these example tasks. The LearnAct system has different parts that watch the demonstrations, figure out what knowledge is important, and then use that knowledge to help the AI finish similar tasks, even if the app or situation is a little different from what it saw before. This approach led to big improvements in how well the agents could complete tasks, both in tests and in real-world app use.

Why it matters?

This matters because it makes mobile AI assistants much more useful and adaptable, allowing them to help people with all kinds of personal or complex tasks without needing huge amounts of training data. It means AI can become a better helper for everyone, no matter what apps or routines they use.

Abstract

The LearnAct framework and LearnGUI dataset improve mobile GUI agent performance through demonstration-based learning, enhancing task success rates in both offline and online evaluations.