Leave No Document Behind: Benchmarking Long-Context LLMs with Extended Multi-Doc QA

Minzheng Wang, Longze Chen, Cheng Fu, Shengyi Liao, Xinghua Zhang, Bingli Wu, Haiyang Yu, Nan Xu, Lei Zhang, Run Luo, Yunshui Li, Min Yang, Fei Huang, Yongbin Li

2024-06-26

Summary

This paper presents Loong, a new benchmark designed to evaluate how well large language models (LLMs) handle long contexts using multiple documents. It aims to create a more realistic testing environment that reflects real-world scenarios.

What's the problem?

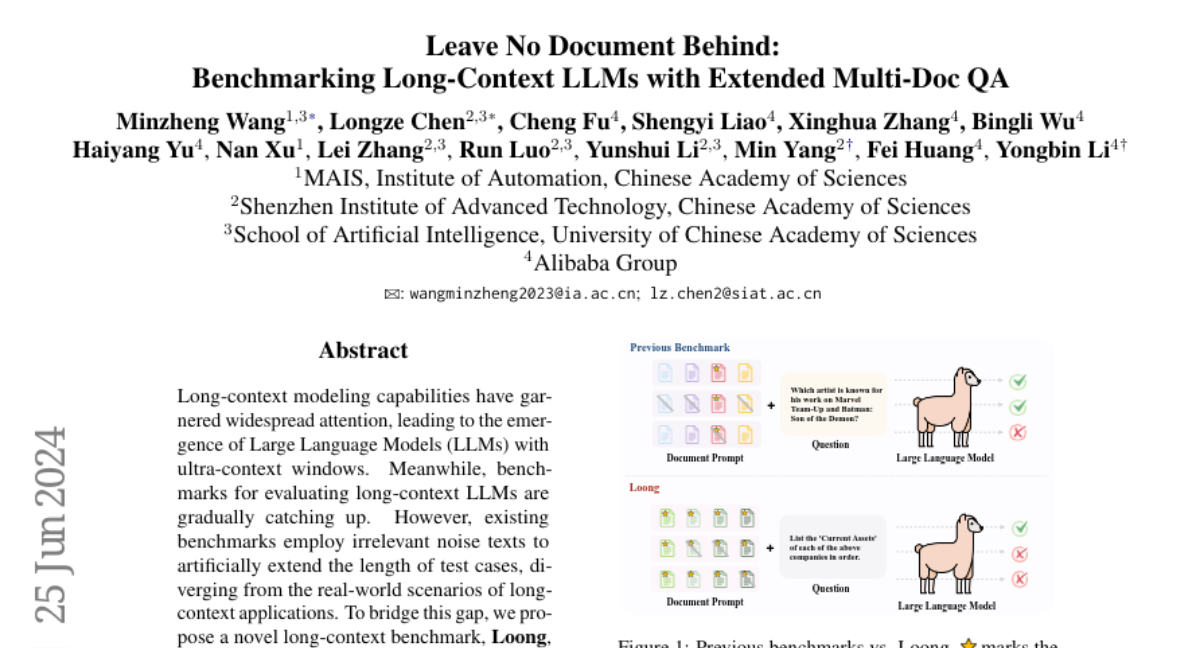

Many existing benchmarks for testing LLMs use irrelevant or noisy texts to artificially extend the length of test cases, which does not accurately represent how these models would perform in real-life situations. This makes it difficult to assess their true capabilities when dealing with long documents and complex information spread across multiple sources.

What's the solution?

To address this issue, the authors developed Loong, which focuses on extended multi-document question answering (QA). In this benchmark, every document included is relevant to the final answer, meaning that ignoring any one document could lead to an incorrect response. Loong introduces four types of evaluation tasks: Spotlight Locating (finding specific information), Comparison (comparing information across documents), Clustering (grouping related information), and Chain of Reasoning (drawing logical conclusions from multiple documents). This structure allows for a more comprehensive assessment of LLMs' abilities to understand and process long contexts.

Why it matters?

This research is important because it provides a more accurate way to evaluate the performance of LLMs in real-world applications. By focusing on how these models manage information across multiple documents, Loong can help identify areas where LLMs need improvement, ultimately leading to better AI systems that can handle complex tasks more effectively.

Abstract

Long-context modeling capabilities have garnered widespread attention, leading to the emergence of Large Language Models (LLMs) with ultra-context windows. Meanwhile, benchmarks for evaluating long-context LLMs are gradually catching up. However, existing benchmarks employ irrelevant noise texts to artificially extend the length of test cases, diverging from the real-world scenarios of long-context applications. To bridge this gap, we propose a novel long-context benchmark, Loong, aligning with realistic scenarios through extended multi-document question answering (QA). Unlike typical document QA, in Loong's test cases, each document is relevant to the final answer, ignoring any document will lead to the failure of the answer. Furthermore, Loong introduces four types of tasks with a range of context lengths: Spotlight Locating, Comparison, Clustering, and Chain of Reasoning, to facilitate a more realistic and comprehensive evaluation of long-context understanding. Extensive experiments indicate that existing long-context language models still exhibit considerable potential for enhancement. Retrieval augmented generation (RAG) achieves poor performance, demonstrating that Loong can reliably assess the model's long-context modeling capabilities.