Lessons from Learning to Spin "Pens"

Jun Wang, Ying Yuan, Haichuan Che, Haozhi Qi, Yi Ma, Jitendra Malik, Xiaolong Wang

2024-07-29

Summary

This paper discusses a project focused on teaching robots to manipulate pen-like objects, such as spinning them, which is an important skill for handling various tools in real life. The researchers used advanced learning techniques to improve how robots learn this skill.

What's the problem?

Teaching robots to manipulate objects like pens can be challenging because current methods often lack good examples of how to do it properly. Additionally, there is a big difference between how things behave in computer simulations and in the real world, making it hard for robots to learn effectively from simulations alone.

What's the solution?

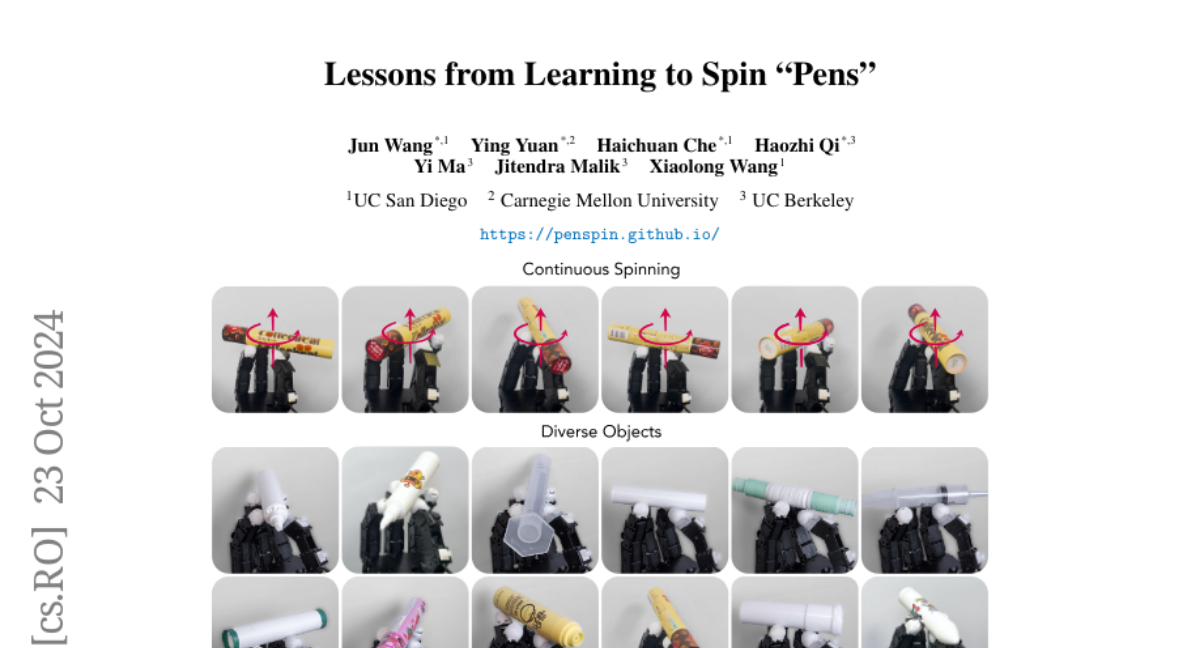

To address these challenges, the authors first used a technique called reinforcement learning to create a strong initial policy (or set of rules) for manipulating pen-like objects in a simulated environment. They generated a detailed dataset of movements (trajectories) that the robot could follow. This dataset was then used to train the robot in two ways: first, to learn how to manipulate objects in the simulation and second, to practice those movements in the real world. After fine-tuning their approach with real-world data, the robot was able to successfully spin over ten different pen-like objects using less than 50 practice runs.

Why it matters?

This research is important because it helps improve how robots learn to interact with everyday objects, which can lead to better robotic assistants and tools that can perform tasks more effectively. By understanding how to teach robots these skills, we can enhance their usefulness in various applications, from manufacturing to personal assistance.

Abstract

In-hand manipulation of pen-like objects is an important skill in our daily lives, as many tools such as hammers and screwdrivers are similarly shaped. However, current learning-based methods struggle with this task due to a lack of high-quality demonstrations and the significant gap between simulation and the real world. In this work, we push the boundaries of learning-based in-hand manipulation systems by demonstrating the capability to spin pen-like objects. We first use reinforcement learning to train an oracle policy with privileged information and generate a high-fidelity trajectory dataset in simulation. This serves two purposes: 1) pre-training a sensorimotor policy in simulation; 2) conducting open-loop trajectory replay in the real world. We then fine-tune the sensorimotor policy using these real-world trajectories to adapt it to the real world dynamics. With less than 50 trajectories, our policy learns to rotate more than ten pen-like objects with different physical properties for multiple revolutions. We present a comprehensive analysis of our design choices and share the lessons learned during development.