LinFusion: 1 GPU, 1 Minute, 16K Image

Songhua Liu, Weihao Yu, Zhenxiong Tan, Xinchao Wang

2024-09-04

Summary

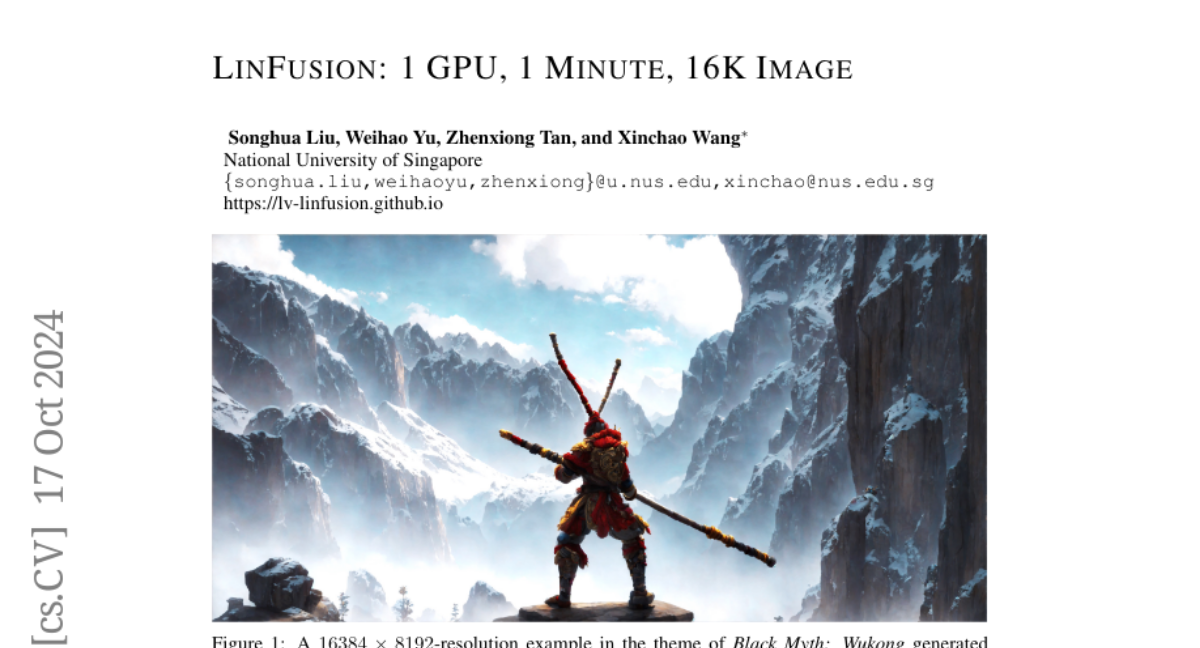

This paper talks about LinFusion, a new method that allows large language models to generate high-resolution images quickly and efficiently using only one GPU in a short amount of time.

What's the problem?

Current methods for generating detailed images with AI can be slow and require a lot of computing power, especially when working with high-resolution images. This makes it difficult to create complex visual content efficiently, as traditional models struggle with memory and processing time due to their complexity.

What's the solution?

LinFusion introduces a new linear attention mechanism that simplifies how the model processes information, allowing it to generate long sequences of images without needing as much memory or time. By building on existing models and optimizing their architecture, LinFusion can produce high-quality images at resolutions up to 16K while using significantly less computational power. It also works well with pre-trained models, making it easier to implement.

Why it matters?

This research is important because it makes it possible to create stunning high-resolution images more efficiently, which can benefit various fields such as gaming, film production, and virtual reality. By reducing the resources needed for image generation, LinFusion opens up new opportunities for creators and developers to produce high-quality visual content quickly.

Abstract

Modern diffusion models, particularly those utilizing a Transformer-based UNet for denoising, rely heavily on self-attention operations to manage complex spatial relationships, thus achieving impressive generation performance. However, this existing paradigm faces significant challenges in generating high-resolution visual content due to its quadratic time and memory complexity with respect to the number of spatial tokens. To address this limitation, we aim at a novel linear attention mechanism as an alternative in this paper. Specifically, we begin our exploration from recently introduced models with linear complexity, e.g., Mamba, Mamba2, and Gated Linear Attention, and identify two key features-attention normalization and non-causal inference-that enhance high-resolution visual generation performance. Building on these insights, we introduce a generalized linear attention paradigm, which serves as a low-rank approximation of a wide spectrum of popular linear token mixers. To save the training cost and better leverage pre-trained models, we initialize our models and distill the knowledge from pre-trained StableDiffusion (SD). We find that the distilled model, termed LinFusion, achieves performance on par with or superior to the original SD after only modest training, while significantly reducing time and memory complexity. Extensive experiments on SD-v1.5, SD-v2.1, and SD-XL demonstrate that LinFusion delivers satisfactory zero-shot cross-resolution generation performance, generating high-resolution images like 16K resolution. Moreover, it is highly compatible with pre-trained SD components, such as ControlNet and IP-Adapter, requiring no adaptation efforts. Codes are available at https://github.com/Huage001/LinFusion.