Linguistic Generalizability of Test-Time Scaling in Mathematical Reasoning

Guijin Son, Jiwoo Hong, Hyunwoo Ko, James Thorne

2025-02-25

Summary

This paper talks about testing whether giving AI language models more time to think during problem-solving (called test-time scaling) works as well for math problems in many languages as it does for English

What's the problem?

While we know that training AI on more data in different languages helps it understand multiple languages, we're not sure if giving the AI extra time to think during problem-solving works equally well across languages. This is important because we want AI to be good at math in all languages, not just English

What's the solution?

The researchers created a new test called MCLM with hard math problems in 55 languages. They then tried three different ways of giving AI extra thinking time and tested these on two AI models. They compared how well these methods worked in English versus other languages

Why it matters?

This matters because it shows that current ways of improving AI's math skills might not work equally well for all languages. The study found that while giving AI more thinking time helped a lot for English math problems, it didn't help nearly as much for other languages. This suggests we need to find better ways to make AI equally smart at math no matter what language it's working in, which is crucial for making AI fair and useful worldwide

Abstract

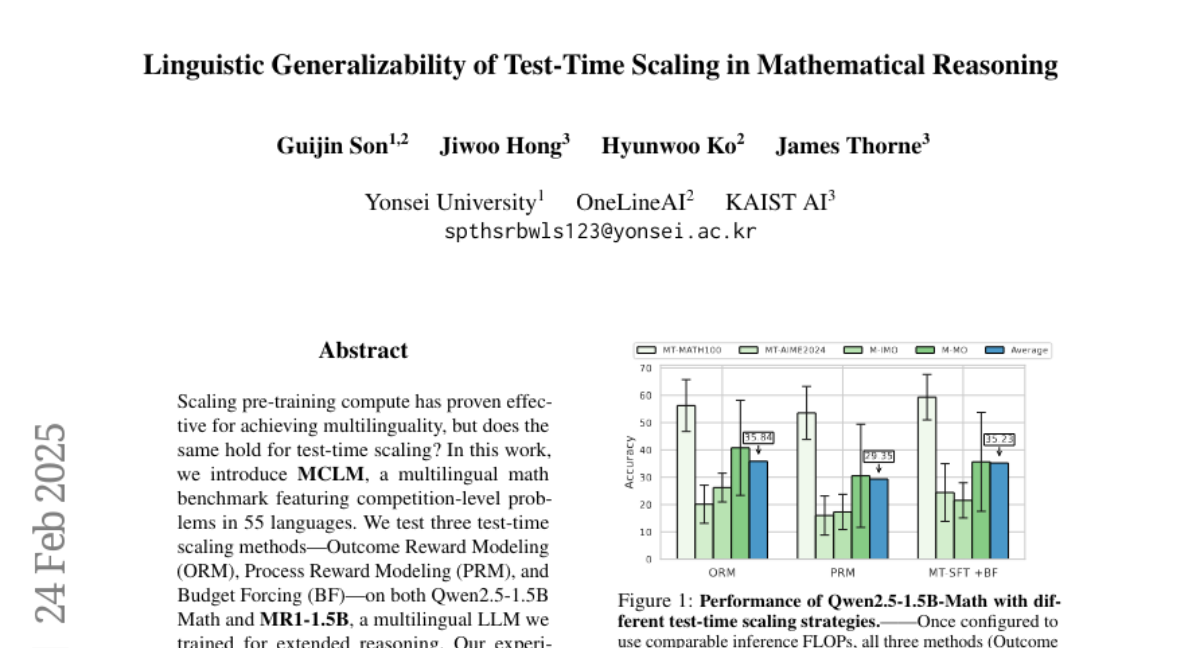

Scaling pre-training compute has proven effective for achieving mulitlinguality, but does the same hold for test-time scaling? In this work, we introduce MCLM, a multilingual math benchmark featuring competition-level problems in 55 languages. We test three test-time scaling methods-Outcome Reward Modeling (ORM), Process Reward Modeling (ORM), and Budget Forcing (BF)-on both Qwen2.5-1.5B Math and MR1-1.5B, a multilingual LLM we trained for extended reasoning. Our experiments show that using Qwen2.5-1.5B Math with ORM achieves a score of 35.8 on MCLM, while BF on MR1-1.5B attains 35.2. Although "thinking LLMs" have recently garnered significant attention, we find that their performance is comparable to traditional scaling methods like best-of-N once constrained to similar levels of inference FLOPs. Moreover, while BF yields a 20-point improvement on English AIME, it provides only a 1.94-point average gain across other languages-a pattern consistent across the other test-time scaling methods we studied-higlighting that test-time scaling may not generalize as effectively to multilingual tasks. To foster further research, we release MCLM, MR1-1.5B, and evaluation results.