LLaVA-3D: A Simple yet Effective Pathway to Empowering LMMs with 3D-awareness

Chenming Zhu, Tai Wang, Wenwei Zhang, Jiangmiao Pang, Xihui Liu

2024-09-27

Summary

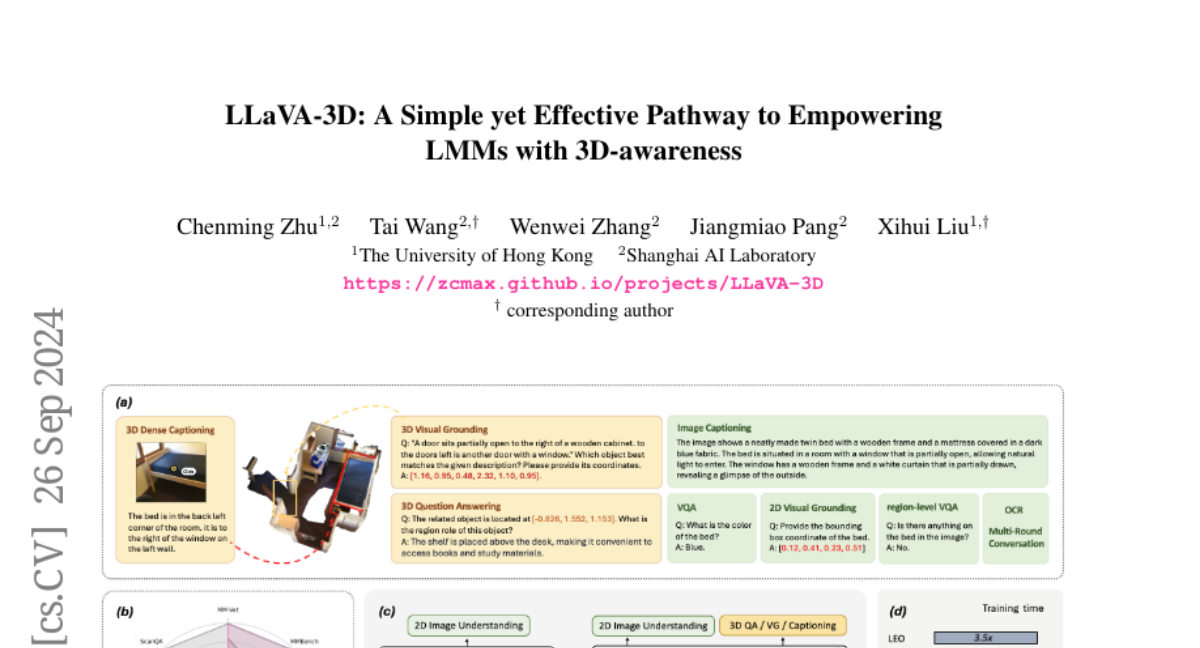

This paper talks about LLaVA-3D, a new framework that enhances large multimodal models (LMMs) by giving them the ability to understand and interpret 3D scenes. It builds on existing 2D capabilities while adding 3D awareness.

What's the problem?

While many models have become good at understanding 2D images and videos, they struggle with understanding 3D scenes because there aren't enough large datasets or effective tools for processing 3D information. This limits their ability to fully grasp how objects exist and interact in three-dimensional space.

What's the solution?

LLaVA-3D addresses this challenge by using a method called '3D Patch' that connects features from 2D images to their positions in 3D space. This allows the model to learn from both 2D and 3D data simultaneously. The researchers integrated this new representation into the existing LLaVA model and trained it to handle both types of information effectively. As a result, LLaVA-3D can understand and analyze 3D scenes while still maintaining its strong performance with 2D images.

Why it matters?

This research is significant because it improves how AI models can interpret the world around them, making them more versatile for various applications such as virtual reality, robotics, and augmented reality. By enhancing LLMs with 3D awareness, LLaVA-3D could lead to better interactions between humans and machines in environments that require a deep understanding of spatial relationships.

Abstract

Recent advancements in Large Multimodal Models (LMMs) have greatly enhanced their proficiency in 2D visual understanding tasks, enabling them to effectively process and understand images and videos. However, the development of LMMs with 3D-awareness for 3D scene understanding has been hindered by the lack of large-scale 3D vision-language datasets and powerful 3D encoders. In this paper, we introduce a simple yet effective framework called LLaVA-3D. Leveraging the strong 2D understanding priors from LLaVA, our LLaVA-3D efficiently adapts LLaVA for 3D scene understanding without compromising 2D understanding capabilities. To achieve this, we employ a simple yet effective representation, 3D Patch, which connects 2D CLIP patch features with their corresponding positions in 3D space. By integrating the 3D Patches into 2D LMMs and employing joint 2D and 3D vision-language instruction tuning, we establish a unified architecture for both 2D image understanding and 3D scene understanding. Experimental results show that LLaVA-3D converges 3.5x faster than existing 3D LMMs when trained on 3D vision-language datasets. Moreover, LLaVA-3D not only achieves state-of-the-art performance across various 3D tasks but also maintains comparable 2D image understanding and vision-language conversation capabilities with LLaVA.