LLM-SRBench: A New Benchmark for Scientific Equation Discovery with Large Language Models

Parshin Shojaee, Ngoc-Hieu Nguyen, Kazem Meidani, Amir Barati Farimani, Khoa D Doan, Chandan K Reddy

2025-04-15

Summary

This paper talks about LLM-SRBench, a new set of tests made to see how well large language models can discover and figure out scientific equations, rather than just memorizing them. The benchmark uses fresh ways of presenting problems so the AI can't just repeat what it has seen before.

What's the problem?

The problem is that many AI models seem smart because they remember lots of information, but that doesn't mean they truly understand how to discover or create new scientific equations. This makes it hard to know if an AI can actually help with real scientific discovery or if it's just repeating what it already knows.

What's the solution?

The researchers developed LLM-SRBench to test AI models in a way that stops them from simply memorizing answers. The benchmark gives problems in new forms and checks if the AI can actually work out the equations on its own. This makes the test much more challenging and realistic for scientific research.

Why it matters?

This work matters because it helps show the real strengths and weaknesses of AI when it comes to scientific discovery. By making sure the AI is actually reasoning and not just memorizing, LLM-SRBench can help guide future improvements and make AI a more reliable tool for scientists.

Abstract

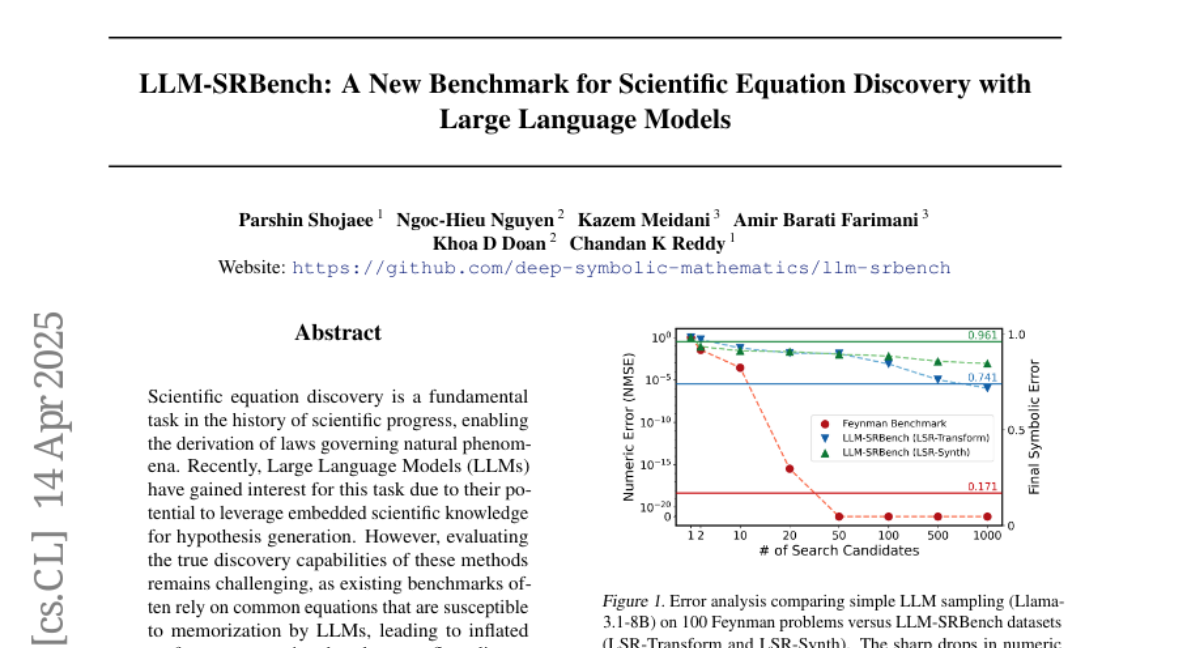

LLM-SRBench is a comprehensive benchmark designed to evaluate LLMs in scientific equation discovery by preventing memorization and using novel problem representations, indicating significant challenges in this domain.