LoftUp: Learning a Coordinate-Based Feature Upsampler for Vision Foundation Models

Haiwen Huang, Anpei Chen, Volodymyr Havrylov, Andreas Geiger, Dan Zhang

2025-04-22

Summary

This paper talks about LoftUp, a new technique that helps computer vision models create clearer and more detailed images by improving how they fill in or 'upsample' features at the pixel level.

What's the problem?

The problem is that when vision models try to create high-resolution images or analyze tiny details, they often lose important information or make things look blurry because their current methods for upsampling aren't smart enough.

What's the solution?

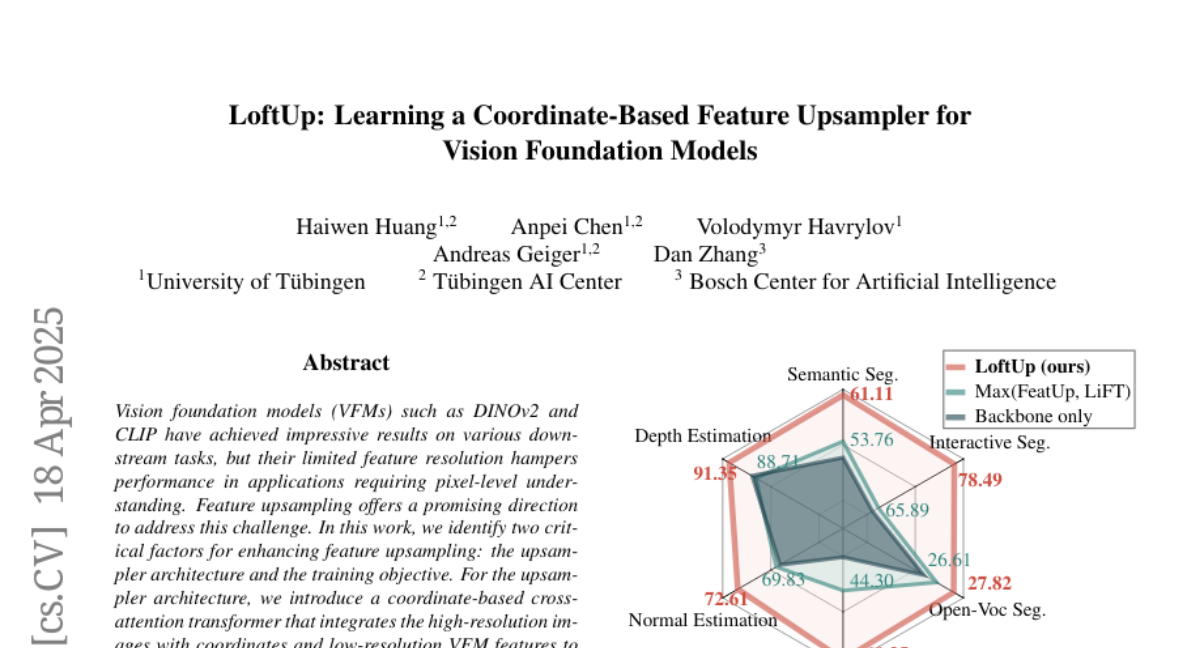

The researchers designed a special transformer that uses coordinate-based cross-attention, along with a clever masking and self-training method, to help the model focus on the right areas and learn from itself. This makes the upsampling process much more accurate, so the final images are sharper and more precise.

Why it matters?

This matters because it allows AI to better understand and generate images, which is important for things like medical scans, satellite photos, and any application where seeing small details clearly can make a big difference.

Abstract

A coordinate-based cross-attention transformer and a class-agnostic masking with self-distillation approach improve feature upsampling in vision foundation models, enhancing performance in pixel-level understanding tasks.