LongRAG: Enhancing Retrieval-Augmented Generation with Long-context LLMs

Ziyan Jiang, Xueguang Ma, Wenhu Chen

2024-06-24

Summary

This paper introduces LongRAG, a new framework that improves how retrieval-augmented generation (RAG) works by using longer pieces of text to help answer questions more effectively.

What's the problem?

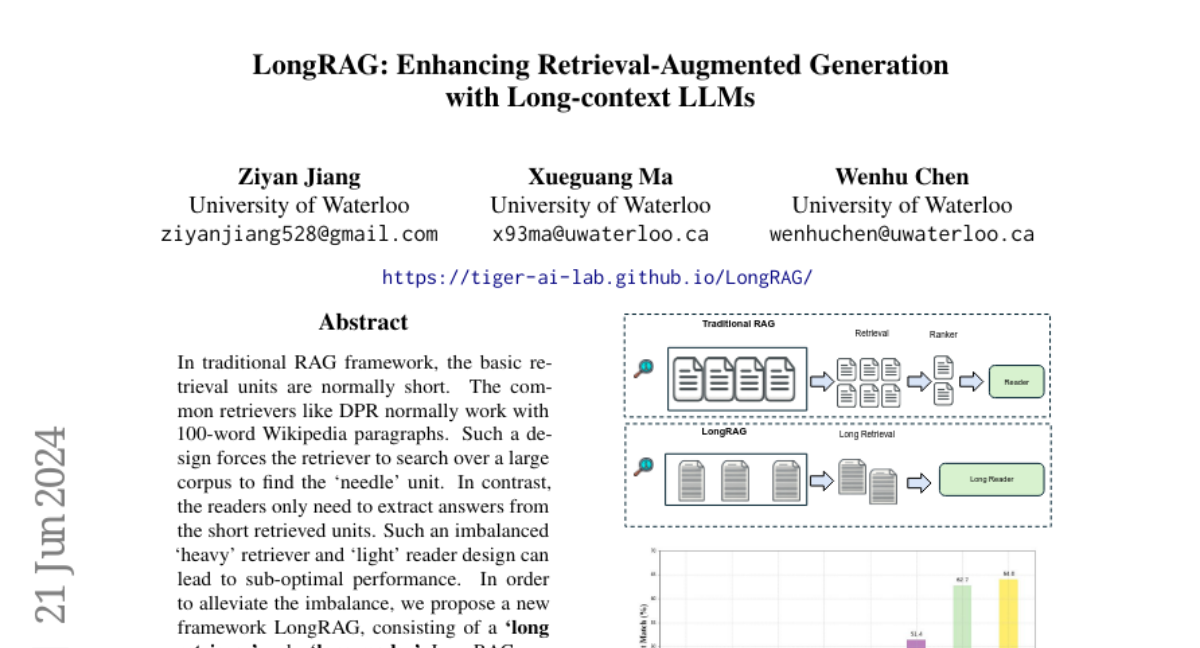

Traditional RAG systems use short text passages (like 100-word sections from Wikipedia) to find answers. This approach makes it hard for the system to retrieve relevant information because it has to sift through a large amount of data to find the right piece, which can lead to poor performance. The design creates an imbalance where the retrieval system (the part that finds information) is overloaded while the reading part (the part that extracts answers) is underused.

What's the solution?

The researchers developed LongRAG, which uses 'long retrievers' and 'long readers.' Instead of short passages, LongRAG processes longer units of text, around 4,000 words each, allowing the system to work with fewer but more informative pieces of data. This change reduces the total number of text units from 22 million to just 700,000. By doing this, they significantly improved the retrieval accuracy: they achieved a recall rate of 71% on one dataset and 72% on another. They then used a long-context language model to extract answers from these longer texts without needing extra training.

Why it matters?

This research is important because it shows how using longer text units can make AI systems better at answering questions. By improving the way these systems retrieve and process information, LongRAG can lead to more accurate and efficient AI applications in areas like education, customer service, and research, where understanding complex information is crucial.

Abstract

In traditional RAG framework, the basic retrieval units are normally short. The common retrievers like DPR normally work with 100-word Wikipedia paragraphs. Such a design forces the retriever to search over a large corpus to find the `needle' unit. In contrast, the readers only need to extract answers from the short retrieved units. Such an imbalanced `heavy' retriever and `light' reader design can lead to sub-optimal performance. In order to alleviate the imbalance, we propose a new framework LongRAG, consisting of a `long retriever' and a `long reader'. LongRAG processes the entire Wikipedia into 4K-token units, which is 30x longer than before. By increasing the unit size, we significantly reduce the total units from 22M to 700K. This significantly lowers the burden of retriever, which leads to a remarkable retrieval score: answer recall@1=71% on NQ (previously 52%) and answer recall@2=72% (previously 47%) on HotpotQA (full-wiki). Then we feed the top-k retrieved units (approx 30K tokens) to an existing long-context LLM to perform zero-shot answer extraction. Without requiring any training, LongRAG achieves an EM of 62.7% on NQ, which is the best known result. LongRAG also achieves 64.3% on HotpotQA (full-wiki), which is on par of the SoTA model. Our study offers insights into the future roadmap for combining RAG with long-context LLMs.