MagicMan: Generative Novel View Synthesis of Humans with 3D-Aware Diffusion and Iterative Refinement

Xu He, Xiaoyu Li, Di Kang, Jiangnan Ye, Chaopeng Zhang, Liyang Chen, Xiangjun Gao, Han Zhang, Zhiyong Wu, Haolin Zhuang

2024-08-27

Summary

This paper introduces MagicMan, a new model designed to generate high-quality images of humans from a single reference image, improving the way we can create and visualize 3D human figures.

What's the problem?

Current methods for reconstructing images of humans from a single picture often struggle because they don't have enough training data or fail to maintain consistency in 3D representations. This makes it hard to create accurate and realistic images from just one view.

What's the solution?

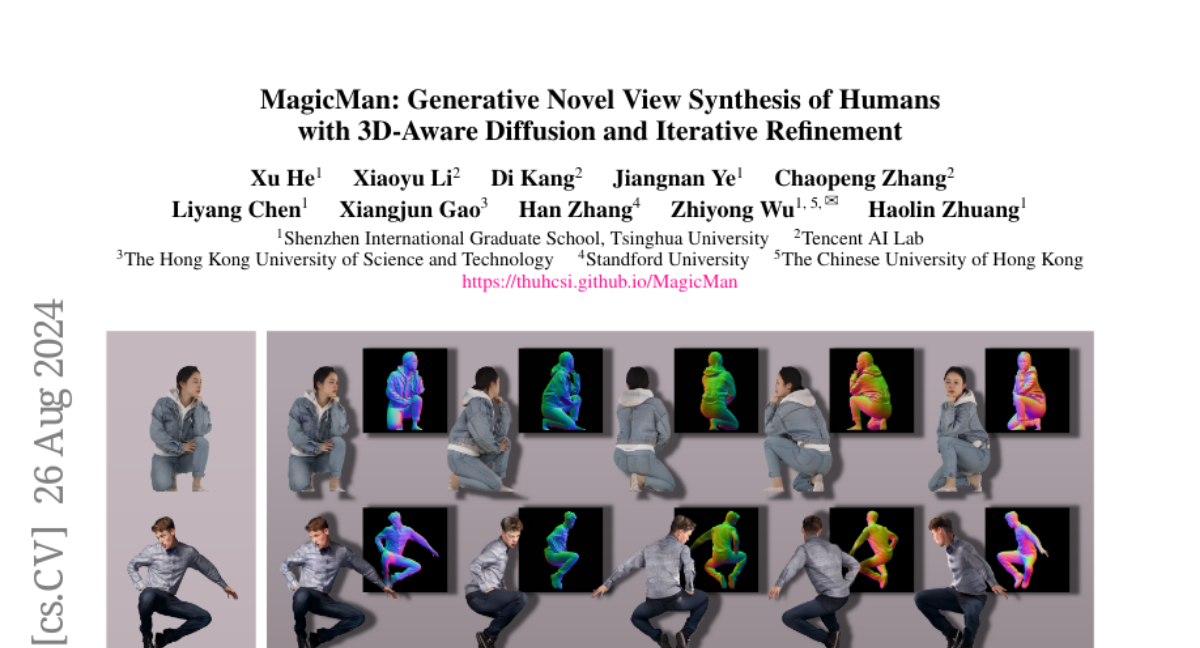

MagicMan uses a combination of a pre-trained 2D diffusion model and a 3D body model called SMPL-X to generate images. It employs techniques like hybrid multi-view attention to share information between different views and a dual branch system that works on both color and shape details. Additionally, it includes an iterative refinement strategy to improve the accuracy of the generated images over time. This approach allows for better quality and consistency in the final images.

Why it matters?

This research is significant because it enhances our ability to create realistic 3D representations of humans from limited input. This can be useful in various fields such as video games, movies, and virtual reality, where accurate human models are essential for storytelling and interaction.

Abstract

Existing works in single-image human reconstruction suffer from weak generalizability due to insufficient training data or 3D inconsistencies for a lack of comprehensive multi-view knowledge. In this paper, we introduce MagicMan, a human-specific multi-view diffusion model designed to generate high-quality novel view images from a single reference image. As its core, we leverage a pre-trained 2D diffusion model as the generative prior for generalizability, with the parametric SMPL-X model as the 3D body prior to promote 3D awareness. To tackle the critical challenge of maintaining consistency while achieving dense multi-view generation for improved 3D human reconstruction, we first introduce hybrid multi-view attention to facilitate both efficient and thorough information interchange across different views. Additionally, we present a geometry-aware dual branch to perform concurrent generation in both RGB and normal domains, further enhancing consistency via geometry cues. Last but not least, to address ill-shaped issues arising from inaccurate SMPL-X estimation that conflicts with the reference image, we propose a novel iterative refinement strategy, which progressively optimizes SMPL-X accuracy while enhancing the quality and consistency of the generated multi-views. Extensive experimental results demonstrate that our method significantly outperforms existing approaches in both novel view synthesis and subsequent 3D human reconstruction tasks.