MagicTailor: Component-Controllable Personalization in Text-to-Image Diffusion Models

Donghao Zhou, Jiancheng Huang, Jinbin Bai, Jiaze Wang, Hao Chen, Guangyong Chen, Xiaowei Hu, Pheng-Ann Heng

2024-10-21

Summary

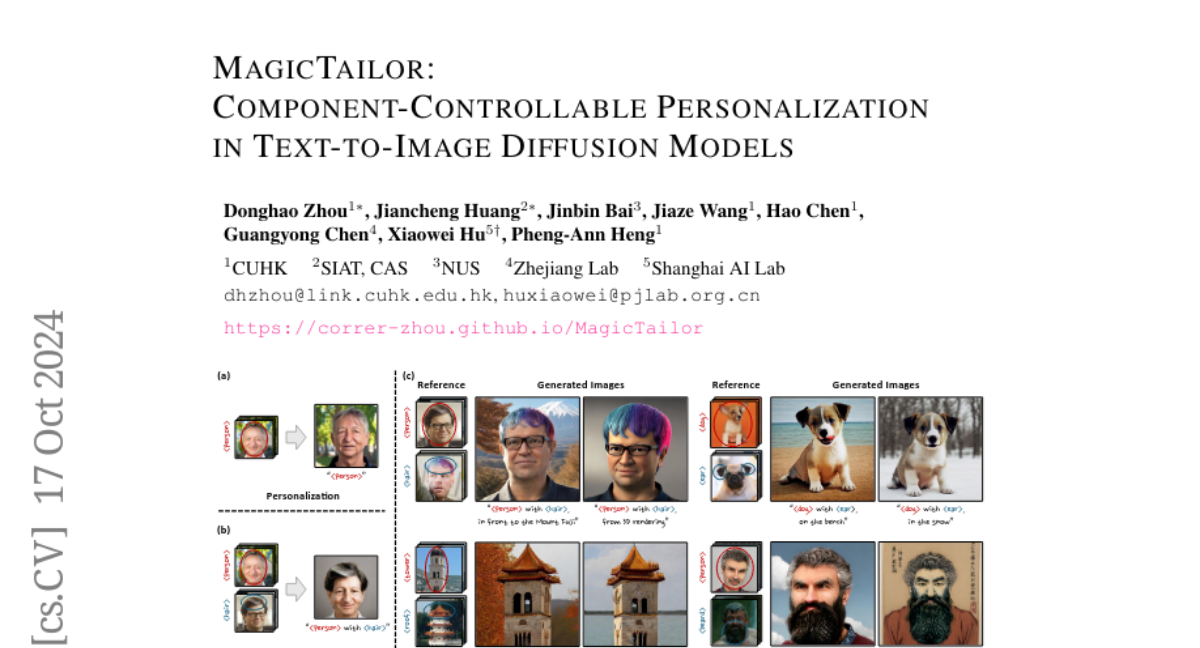

This paper introduces MagicTailor, a new method that allows users to customize specific parts of images generated from text descriptions, improving control over the final output.

What's the problem?

While recent advancements in text-to-image (T2I) models have made it easier to create high-quality images from text prompts, these models often struggle to give users precise control over specific visual elements. For example, if you want to change just the color of a shirt in an image, current models may not allow for that level of detail, leading to unwanted changes in the overall image.

What's the solution?

To tackle this issue, the authors developed MagicTailor, which introduces a task called component-controllable personalization. This allows users to adjust specific components of an image while keeping the overall concept intact. They faced two main challenges: semantic pollution (where unwanted elements mess up the desired image) and semantic imbalance (where the model learns some parts too well and others not enough). To overcome these, they used techniques like Dynamic Masked Degradation (DM-Deg) to filter out unwanted visuals and Dual-Stream Balancing (DS-Bal) to ensure balanced learning of all components. This approach allows for more precise customization when generating images.

Why it matters?

This research is significant because it enhances how users can interact with AI-generated images, making it possible to create more personalized and detailed visuals. This could be especially useful in fields like fashion design, gaming, and character creation, where having control over specific details can greatly improve creativity and satisfaction with the final product.

Abstract

Recent advancements in text-to-image (T2I) diffusion models have enabled the creation of high-quality images from text prompts, but they still struggle to generate images with precise control over specific visual concepts. Existing approaches can replicate a given concept by learning from reference images, yet they lack the flexibility for fine-grained customization of the individual component within the concept. In this paper, we introduce component-controllable personalization, a novel task that pushes the boundaries of T2I models by allowing users to reconfigure specific components when personalizing visual concepts. This task is particularly challenging due to two primary obstacles: semantic pollution, where unwanted visual elements corrupt the personalized concept, and semantic imbalance, which causes disproportionate learning of the concept and component. To overcome these challenges, we design MagicTailor, an innovative framework that leverages Dynamic Masked Degradation (DM-Deg) to dynamically perturb undesired visual semantics and Dual-Stream Balancing (DS-Bal) to establish a balanced learning paradigm for desired visual semantics. Extensive comparisons, ablations, and analyses demonstrate that MagicTailor not only excels in this challenging task but also holds significant promise for practical applications, paving the way for more nuanced and creative image generation.