Masked Scene Modeling: Narrowing the Gap Between Supervised and Self-Supervised Learning in 3D Scene Understanding

Pedro Hermosilla, Christian Stippel, Leon Sick

2025-04-10

Summary

This paper talks about a new way to teach AI models to understand 3D scenes without needing labeled data, by hiding parts of the scene and making the AI guess what’s missing, similar to how you might learn by solving puzzles with missing pieces.

What's the problem?

Current AI models for 3D scenes need lots of labeled data to learn, and self-trained models without labels are usually only good as a starting point, not for real tasks like recognizing objects or layouts.

What's the solution?

The paper introduces a training method where the AI learns by reconstructing hidden parts of 3D scenes using clues from visible areas, combined with a new testing system to check how well the AI understands scenes without extra training.

Why it matters?

This helps AI systems learn 3D environments more efficiently, reducing the need for expensive labeled data and improving applications like robot navigation, AR/VR, and autonomous driving.

Abstract

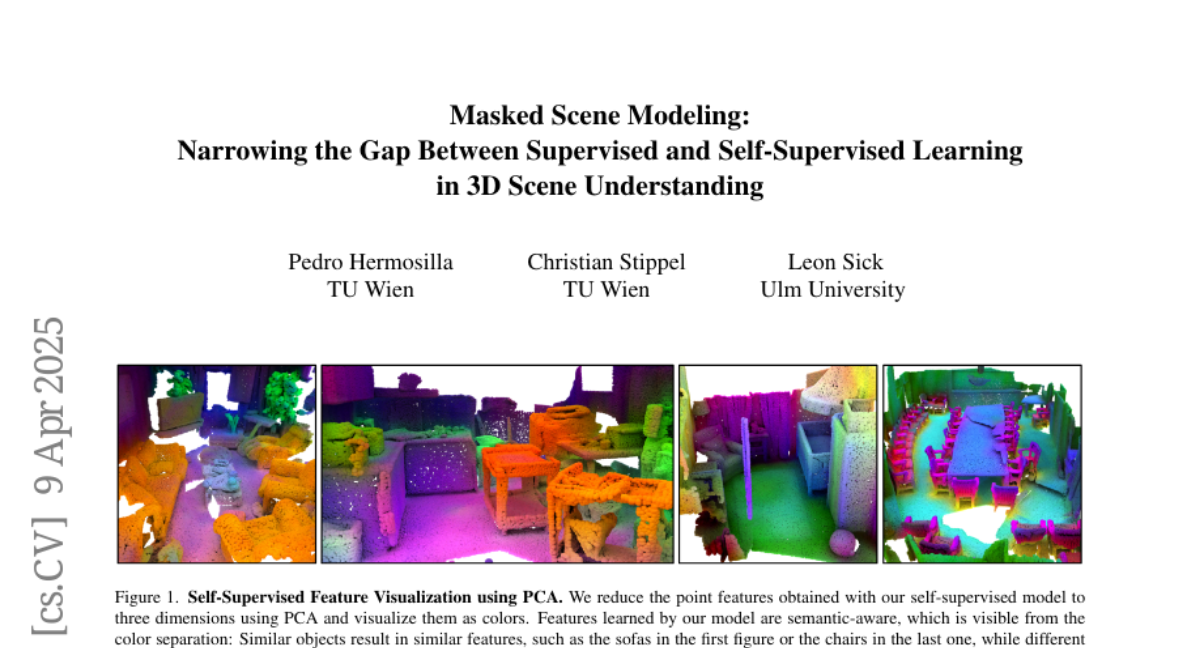

Self-supervised learning has transformed 2D computer vision by enabling models trained on large, unannotated datasets to provide versatile off-the-shelf features that perform similarly to models trained with labels. However, in 3D scene understanding, self-supervised methods are typically only used as a weight initialization step for task-specific fine-tuning, limiting their utility for general-purpose feature extraction. This paper addresses this shortcoming by proposing a robust evaluation protocol specifically designed to assess the quality of self-supervised features for 3D scene understanding. Our protocol uses multi-resolution feature sampling of hierarchical models to create rich point-level representations that capture the semantic capabilities of the model and, hence, are suitable for evaluation with linear probing and nearest-neighbor methods. Furthermore, we introduce the first self-supervised model that performs similarly to supervised models when only off-the-shelf features are used in a linear probing setup. In particular, our model is trained natively in 3D with a novel self-supervised approach based on a Masked Scene Modeling objective, which reconstructs deep features of masked patches in a bottom-up manner and is specifically tailored to hierarchical 3D models. Our experiments not only demonstrate that our method achieves competitive performance to supervised models, but also surpasses existing self-supervised approaches by a large margin. The model and training code can be found at our Github repository (https://github.com/phermosilla/msm).