mDPO: Conditional Preference Optimization for Multimodal Large Language Models

Fei Wang, Wenxuan Zhou, James Y. Huang, Nan Xu, Sheng Zhang, Hoifung Poon, Muhao Chen

2024-06-18

Summary

This paper introduces mDPO, a new method for improving how large language models (LLMs) understand and respond to multimodal inputs, which include both text and images. It aims to ensure that these models consider both types of information equally when making decisions.

What's the problem?

Many existing LLMs struggle when it comes to processing multimodal data because they often prioritize language over images. This can lead to problems where the model doesn't fully understand the context provided by images, resulting in less accurate or relevant responses. Additionally, when models are trained using traditional methods, they may end up giving lower chances to correct answers, even if they are appropriate.

What's the solution?

To solve this issue, the authors developed mDPO, which stands for multimodal Direct Preference Optimization. This method encourages the model to balance its focus between language and image preferences during training. They also introduced a 'reward anchor' that ensures the model maintains a positive likelihood for correct responses, preventing it from undervaluing these responses. Through experiments with different multimodal LLMs, mDPO was shown to effectively reduce errors known as 'hallucinations,' where models generate incorrect or nonsensical outputs.

Why it matters?

This research is significant because it enhances the ability of AI models to process and understand information from multiple sources at once. By improving how these models respond to combined text and images, mDPO can lead to more accurate and reliable AI applications in areas like virtual assistants, content creation, and interactive media. This advancement helps bridge the gap between how humans communicate (using both words and visuals) and how AI understands that communication.

Abstract

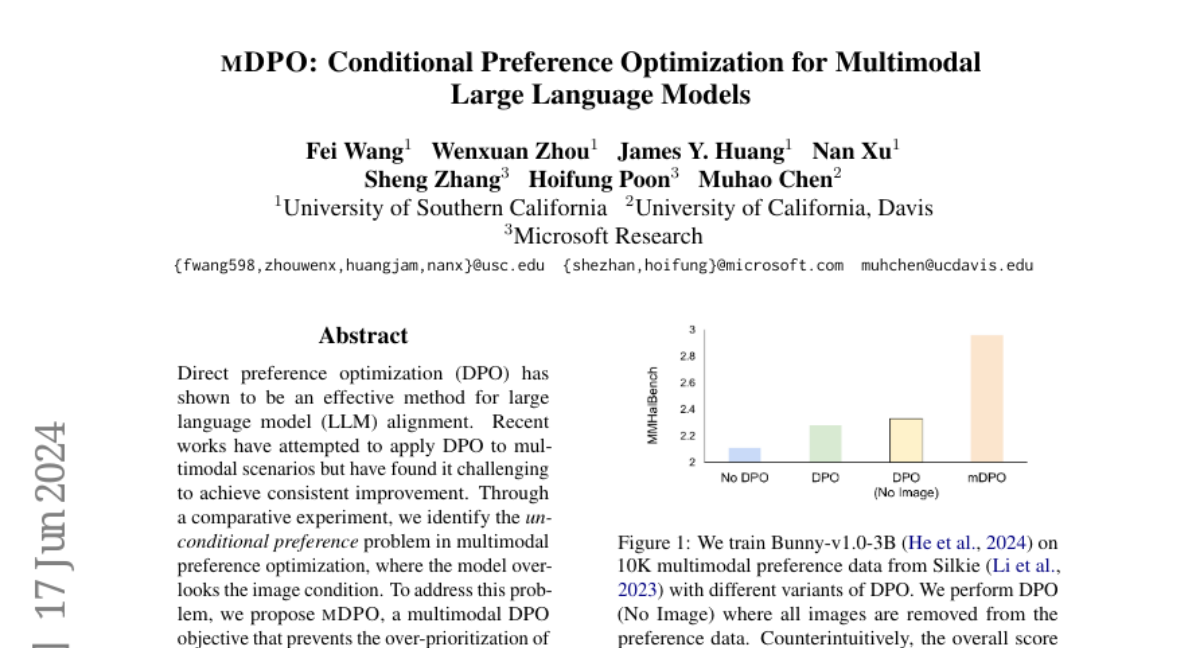

Direct preference optimization (DPO) has shown to be an effective method for large language model (LLM) alignment. Recent works have attempted to apply DPO to multimodal scenarios but have found it challenging to achieve consistent improvement. Through a comparative experiment, we identify the unconditional preference problem in multimodal preference optimization, where the model overlooks the image condition. To address this problem, we propose mDPO, a multimodal DPO objective that prevents the over-prioritization of language-only preferences by also optimizing image preference. Moreover, we introduce a reward anchor that forces the reward to be positive for chosen responses, thereby avoiding the decrease in their likelihood -- an intrinsic problem of relative preference optimization. Experiments on two multimodal LLMs of different sizes and three widely used benchmarks demonstrate that mDPO effectively addresses the unconditional preference problem in multimodal preference optimization and significantly improves model performance, particularly in reducing hallucination.