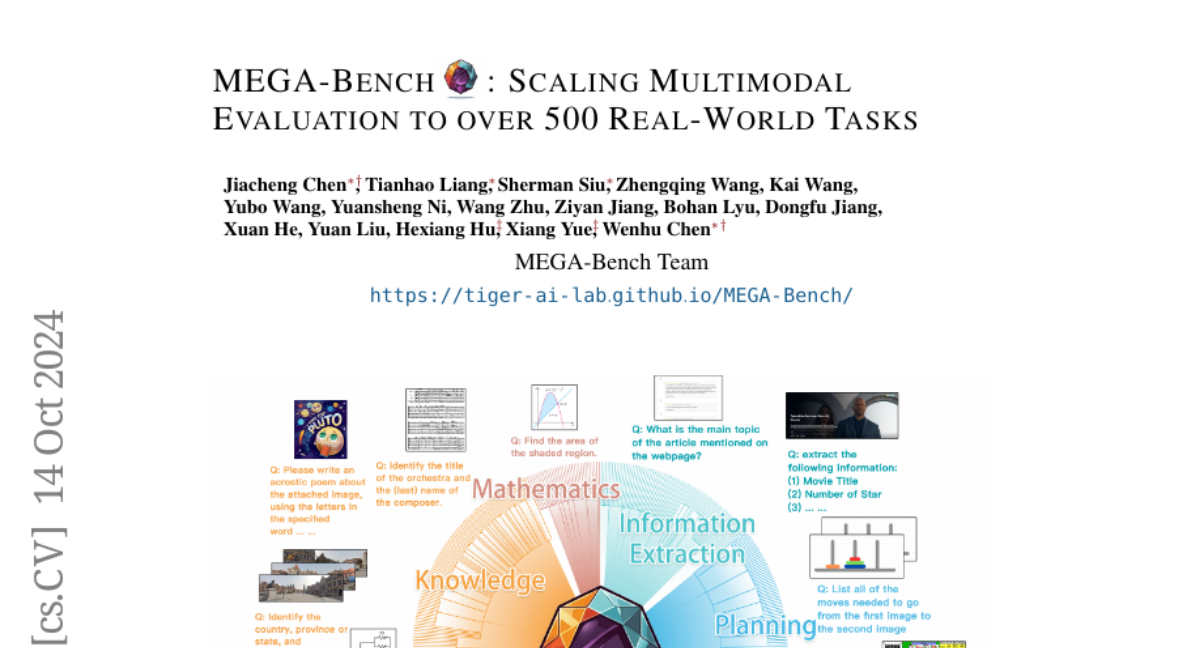

MEGA-Bench: Scaling Multimodal Evaluation to over 500 Real-World Tasks

Jiacheng Chen, Tianhao Liang, Sherman Siu, Zhengqing Wang, Kai Wang, Yubo Wang, Yuansheng Ni, Wang Zhu, Ziyan Jiang, Bohan Lyu, Dongfu Jiang, Xuan He, Yuan Liu, Hexiang Hu, Xiang Yue, Wenhu Chen

2024-10-15

Summary

This paper introduces MEGA-Bench, a comprehensive evaluation tool designed to test how well models can handle over 500 real-world tasks that involve both text and images.

What's the problem?

As AI technology becomes more advanced, it's important to evaluate how well these models can perform in real-world situations where they need to understand and generate content that includes both text and images. However, existing evaluation methods are limited in size and scope, making it difficult to accurately measure model performance across a wide range of tasks.

What's the solution?

MEGA-Bench addresses this problem by providing a large set of 505 realistic tasks that cover various multimodal scenarios. It includes over 8,000 samples and allows for different types of outputs, such as numbers, phrases, code, and more. The benchmark also features more than 40 metrics to evaluate these tasks thoroughly. Unlike other benchmarks that provide a single score, MEGA-Bench offers detailed reports on model performance across multiple dimensions, helping users understand the strengths and weaknesses of different models.

Why it matters?

This research is significant because it sets a new standard for evaluating AI models in multimodal tasks. By providing a diverse and comprehensive evaluation framework, MEGA-Bench can help researchers and developers improve their models, making them more effective in real-world applications where understanding both text and images is crucial.

Abstract

We present MEGA-Bench, an evaluation suite that scales multimodal evaluation to over 500 real-world tasks, to address the highly heterogeneous daily use cases of end users. Our objective is to optimize for a set of high-quality data samples that cover a highly diverse and rich set of multimodal tasks, while enabling cost-effective and accurate model evaluation. In particular, we collected 505 realistic tasks encompassing over 8,000 samples from 16 expert annotators to extensively cover the multimodal task space. Instead of unifying these problems into standard multi-choice questions (like MMMU, MMBench, and MMT-Bench), we embrace a wide range of output formats like numbers, phrases, code, \LaTeX, coordinates, JSON, free-form, etc. To accommodate these formats, we developed over 40 metrics to evaluate these tasks. Unlike existing benchmarks, MEGA-Bench offers a fine-grained capability report across multiple dimensions (e.g., application, input type, output format, skill), allowing users to interact with and visualize model capabilities in depth. We evaluate a wide variety of frontier vision-language models on MEGA-Bench to understand their capabilities across these dimensions.