MeshAnything: Artist-Created Mesh Generation with Autoregressive Transformers

Yiwen Chen, Tong He, Di Huang, Weicai Ye, Sijin Chen, Jiaxiang Tang, Xin Chen, Zhongang Cai, Lei Yang, Gang Yu, Guosheng Lin, Chi Zhang

2024-06-18

Summary

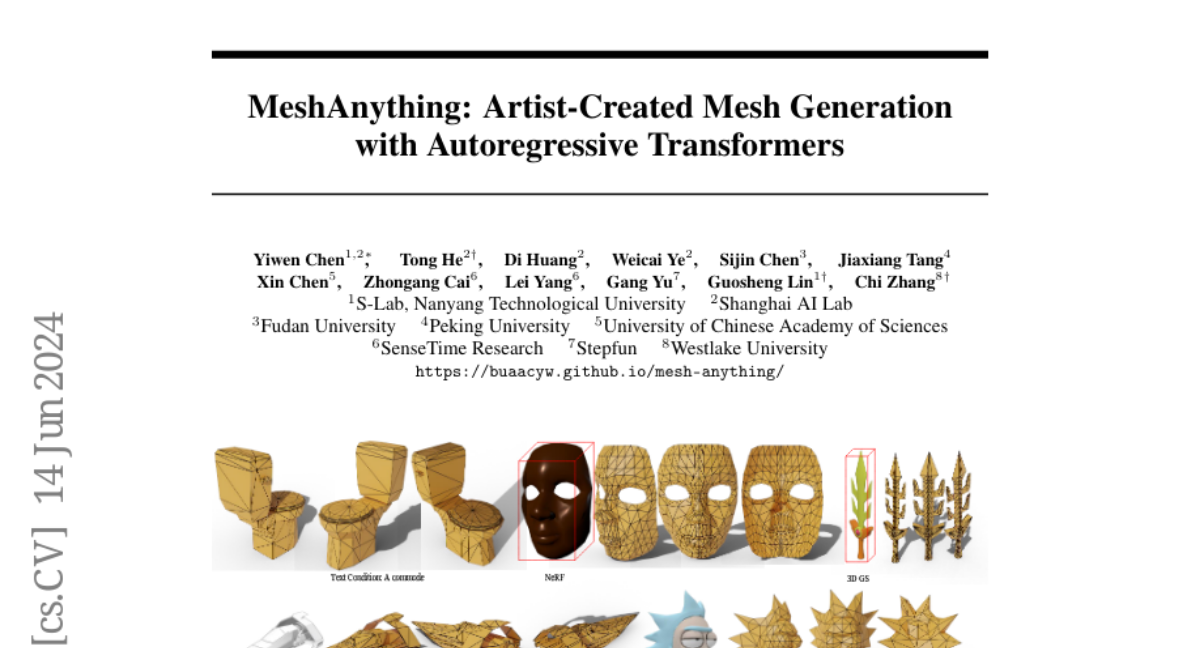

This paper introduces MeshAnything, a new model designed to create high-quality 3D meshes that resemble those made by human artists. It focuses on improving how 3D assets are converted into meshes, making the process more efficient and effective.

What's the problem?

Many 3D objects created through computer processes need to be turned into meshes for use in various applications, like video games or animations. However, the current methods for creating these meshes often result in lower quality compared to those made by artists. These methods tend to use too many faces (the flat surfaces that make up a 3D shape) and ignore important details about the shape, leading to inefficiencies and extra work needed to fix the meshes afterward.

What's the solution?

To solve this problem, the authors developed MeshAnything, which treats the creation of meshes as a generation task rather than just a conversion task. This model uses advanced techniques, including a VQ-VAE (a type of neural network) and a shape-conditioned transformer, to learn how to generate high-quality meshes based on specific shapes. By doing this, MeshAnything produces meshes with significantly fewer faces while maintaining high precision and quality, making it easier and faster to use in 3D applications.

Why it matters?

This research is important because it shows how technology can improve the way we create and use 3D models. By generating better quality meshes that are more efficient to store and render, MeshAnything can help streamline the production process in industries like gaming, film, and virtual reality. This advancement could lead to more realistic and visually appealing 3D content that enhances user experiences.

Abstract

Recently, 3D assets created via reconstruction and generation have matched the quality of manually crafted assets, highlighting their potential for replacement. However, this potential is largely unrealized because these assets always need to be converted to meshes for 3D industry applications, and the meshes produced by current mesh extraction methods are significantly inferior to Artist-Created Meshes (AMs), i.e., meshes created by human artists. Specifically, current mesh extraction methods rely on dense faces and ignore geometric features, leading to inefficiencies, complicated post-processing, and lower representation quality. To address these issues, we introduce MeshAnything, a model that treats mesh extraction as a generation problem, producing AMs aligned with specified shapes. By converting 3D assets in any 3D representation into AMs, MeshAnything can be integrated with various 3D asset production methods, thereby enhancing their application across the 3D industry. The architecture of MeshAnything comprises a VQ-VAE and a shape-conditioned decoder-only transformer. We first learn a mesh vocabulary using the VQ-VAE, then train the shape-conditioned decoder-only transformer on this vocabulary for shape-conditioned autoregressive mesh generation. Our extensive experiments show that our method generates AMs with hundreds of times fewer faces, significantly improving storage, rendering, and simulation efficiencies, while achieving precision comparable to previous methods.