MIMO: Controllable Character Video Synthesis with Spatial Decomposed Modeling

Yifang Men, Yuan Yao, Miaomiao Cui, Liefeng Bo

2024-09-25

Summary

This paper presents MIMO, a new system designed to create realistic videos of animated characters that can interact with different scenes. It allows users to control various aspects of the character and environment easily.

What's the problem?

Creating lifelike animations of characters in 3D environments is challenging because traditional methods often require multiple camera views for training, which can be time-consuming and limit flexibility. Existing 2D methods have improved this but still struggle with accurately depicting character movements and interactions in diverse scenes.

What's the solution?

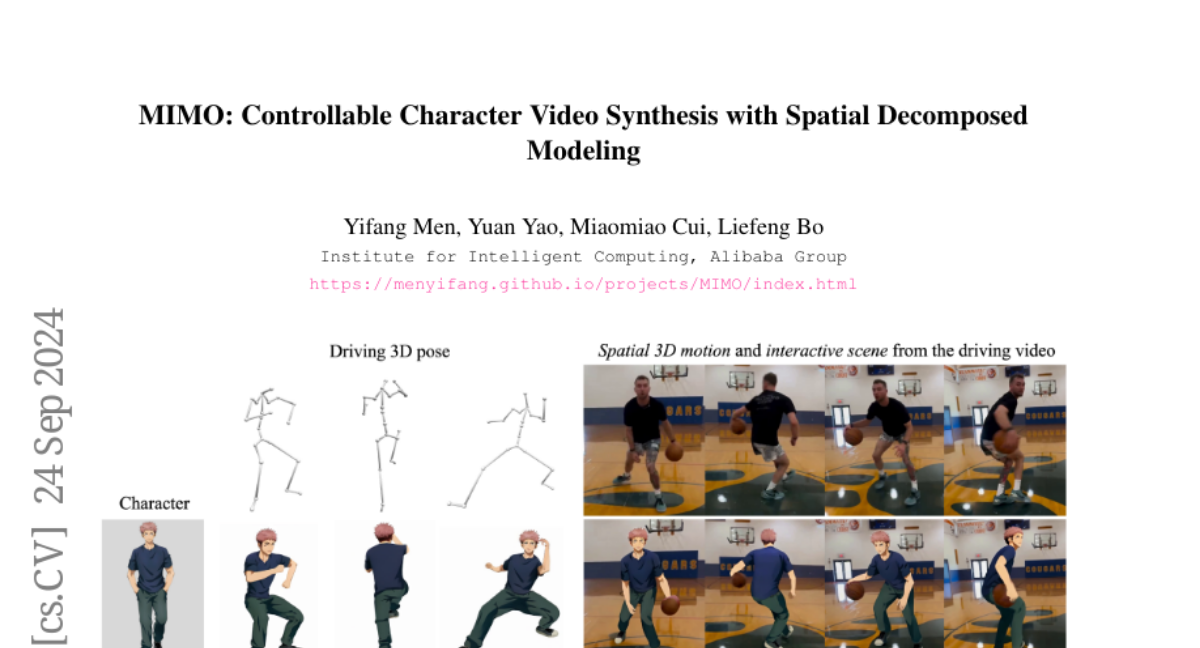

To overcome these challenges, MIMO uses a unique approach that combines 2D video inputs with advanced techniques to understand the 3D nature of scenes. It breaks down video clips into three main components: the character, the background scene, and any floating objects. By transforming 2D images into 3D representations using depth estimation, MIMO allows for detailed control over character attributes like identity, motion, and scene context. This means users can easily create animations that look realistic and respond dynamically to different environments.

Why it matters?

This research is significant because it enhances the ability to generate high-quality character animations for various applications, including video games, movies, and virtual reality. By simplifying the process of creating complex animations while maintaining realism, MIMO opens up new possibilities for interactive storytelling and immersive experiences in digital media.

Abstract

Character video synthesis aims to produce realistic videos of animatable characters within lifelike scenes. As a fundamental problem in the computer vision and graphics community, 3D works typically require multi-view captures for per-case training, which severely limits their applicability of modeling arbitrary characters in a short time. Recent 2D methods break this limitation via pre-trained diffusion models, but they struggle for pose generality and scene interaction. To this end, we propose MIMO, a novel framework which can not only synthesize character videos with controllable attributes (i.e., character, motion and scene) provided by simple user inputs, but also simultaneously achieve advanced scalability to arbitrary characters, generality to novel 3D motions, and applicability to interactive real-world scenes in a unified framework. The core idea is to encode the 2D video to compact spatial codes, considering the inherent 3D nature of video occurrence. Concretely, we lift the 2D frame pixels into 3D using monocular depth estimators, and decompose the video clip to three spatial components (i.e., main human, underlying scene, and floating occlusion) in hierarchical layers based on the 3D depth. These components are further encoded to canonical identity code, structured motion code and full scene code, which are utilized as control signals of synthesis process. The design of spatial decomposed modeling enables flexible user control, complex motion expression, as well as 3D-aware synthesis for scene interactions. Experimental results demonstrate effectiveness and robustness of the proposed method.