Mixture-of-Subspaces in Low-Rank Adaptation

Taiqiang Wu, Jiahao Wang, Zhe Zhao, Ngai Wong

2024-06-19

Summary

This paper introduces a new method called Mixture-of-Subspaces LoRA (MoSLoRA) that improves how we adapt large language models to specific tasks. It does this by using a smart way to mix different parts of the model's weights, making it more efficient and effective.

What's the problem?

When adapting large language models (LLMs) for specific tasks, traditional methods can be slow and require a lot of resources. The existing Low-Rank Adaptation (LoRA) method simplifies the model by breaking down its weight parameters into smaller parts, but it doesn't always perform as well as it could because it treats all parts equally without considering their differences.

What's the solution?

To improve this, the authors developed MoSLoRA, which takes the idea of breaking down the model's weights into smaller subspaces and mixes them in a more flexible way. Instead of using a fixed method to combine these parts, MoSLoRA learns how to best mix them together while still keeping the original strengths of the LoRA method. This allows the model to adapt better to various tasks, such as understanding common sense, following visual instructions, and generating images based on text prompts.

Why it matters?

This research is important because it shows how we can make large AI models more efficient and effective without needing extra resources. By improving how these models learn and adapt, MoSLoRA could help create better AI applications in areas like natural language processing, image generation, and more. This means faster and more accurate AI systems that can handle a wider range of tasks.

Abstract

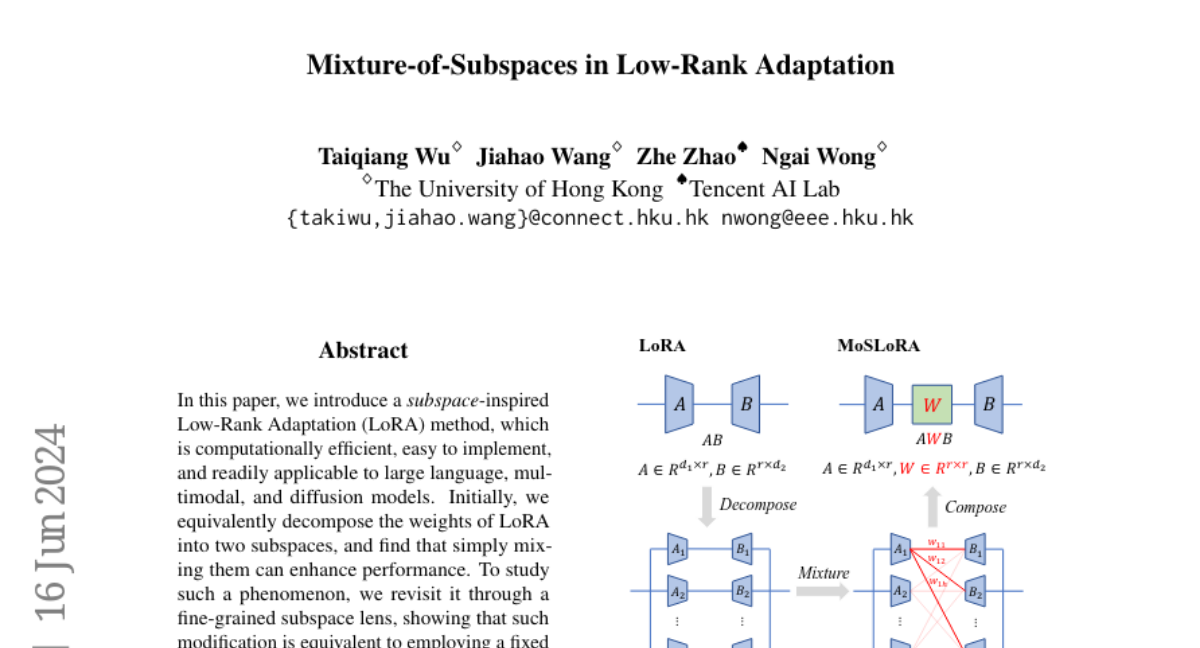

In this paper, we introduce a subspace-inspired Low-Rank Adaptation (LoRA) method, which is computationally efficient, easy to implement, and readily applicable to large language, multimodal, and diffusion models. Initially, we equivalently decompose the weights of LoRA into two subspaces, and find that simply mixing them can enhance performance. To study such a phenomenon, we revisit it through a fine-grained subspace lens, showing that such modification is equivalent to employing a fixed mixer to fuse the subspaces. To be more flexible, we jointly learn the mixer with the original LoRA weights, and term the method Mixture-of-Subspaces LoRA (MoSLoRA). MoSLoRA consistently outperforms LoRA on tasks in different modalities, including commonsense reasoning, visual instruction tuning, and subject-driven text-to-image generation, demonstrating its effectiveness and robustness. Codes are available at https://github.com/wutaiqiang/MoSLoRA{github}.