Model Internals-based Answer Attribution for Trustworthy Retrieval-Augmented Generation

Jirui Qi, Gabriele Sarti, Raquel Fernández, Arianna Bisazza

2024-06-21

Summary

This paper introduces MIRAGE, a new method that helps large language models (LLMs) provide trustworthy answers by clearly showing where their information comes from when answering questions.

What's the problem?

In question answering systems that use retrieval-augmented generation (RAG), it's important to verify the answers provided by models. However, many LLMs struggle with self-citation, meaning they often fail to properly reference the sources of their information. They might cite sources that don't exist or fail to accurately reflect how they used the information. This makes it hard for users to trust the answers they receive.

What's the solution?

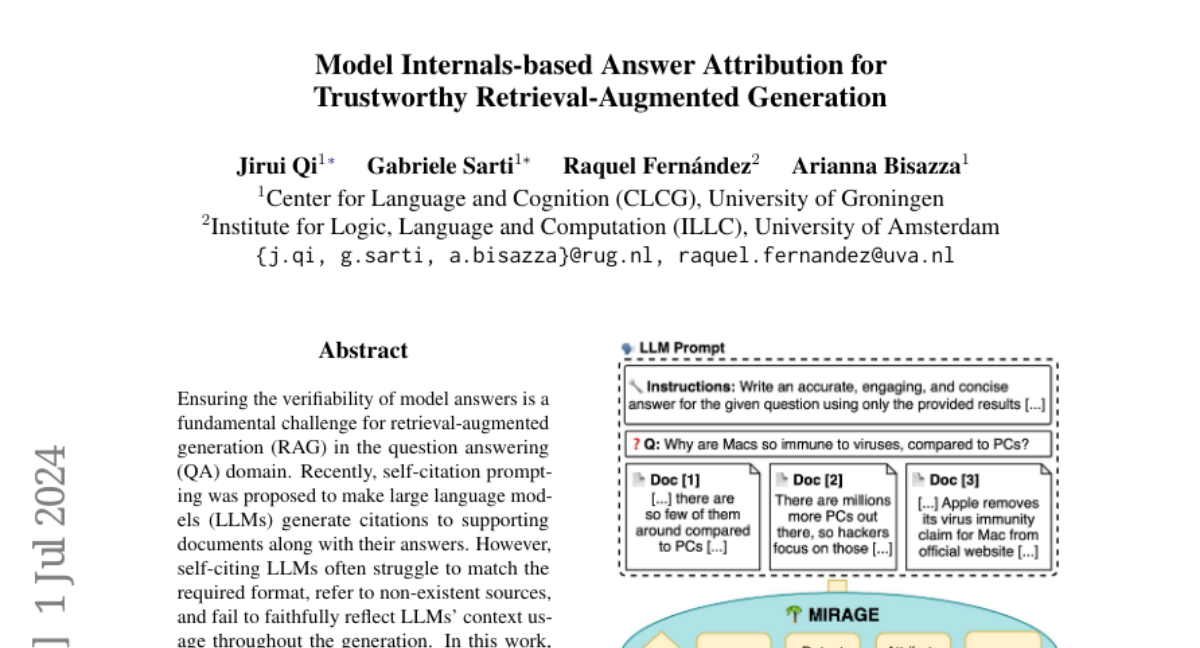

The researchers developed MIRAGE, which uses the internal workings of the language model to improve how it attributes its answers. This method detects specific parts of the answer that relate to retrieved documents and pairs them together, allowing the model to show users exactly which pieces of information contributed to its response. They tested MIRAGE on a multilingual dataset and found that it closely matched human attribution, providing high-quality citations and allowing for better control over how attributions are made.

Why it matters?

This research is important because it enhances the reliability of AI systems that provide answers based on retrieved information. By improving how models attribute their responses, MIRAGE can help build user trust and ensure that AI-generated content is more accurate and accountable. This is crucial as AI becomes more integrated into areas like education, research, and customer service, where accurate information is essential.

Abstract

Ensuring the verifiability of model answers is a fundamental challenge for retrieval-augmented generation (RAG) in the question answering (QA) domain. Recently, self-citation prompting was proposed to make large language models (LLMs) generate citations to supporting documents along with their answers. However, self-citing LLMs often struggle to match the required format, refer to non-existent sources, and fail to faithfully reflect LLMs' context usage throughout the generation. In this work, we present MIRAGE --Model Internals-based RAG Explanations -- a plug-and-play approach using model internals for faithful answer attribution in RAG applications. MIRAGE detects context-sensitive answer tokens and pairs them with retrieved documents contributing to their prediction via saliency methods. We evaluate our proposed approach on a multilingual extractive QA dataset, finding high agreement with human answer attribution. On open-ended QA, MIRAGE achieves citation quality and efficiency comparable to self-citation while also allowing for a finer-grained control of attribution parameters. Our qualitative evaluation highlights the faithfulness of MIRAGE's attributions and underscores the promising application of model internals for RAG answer attribution.