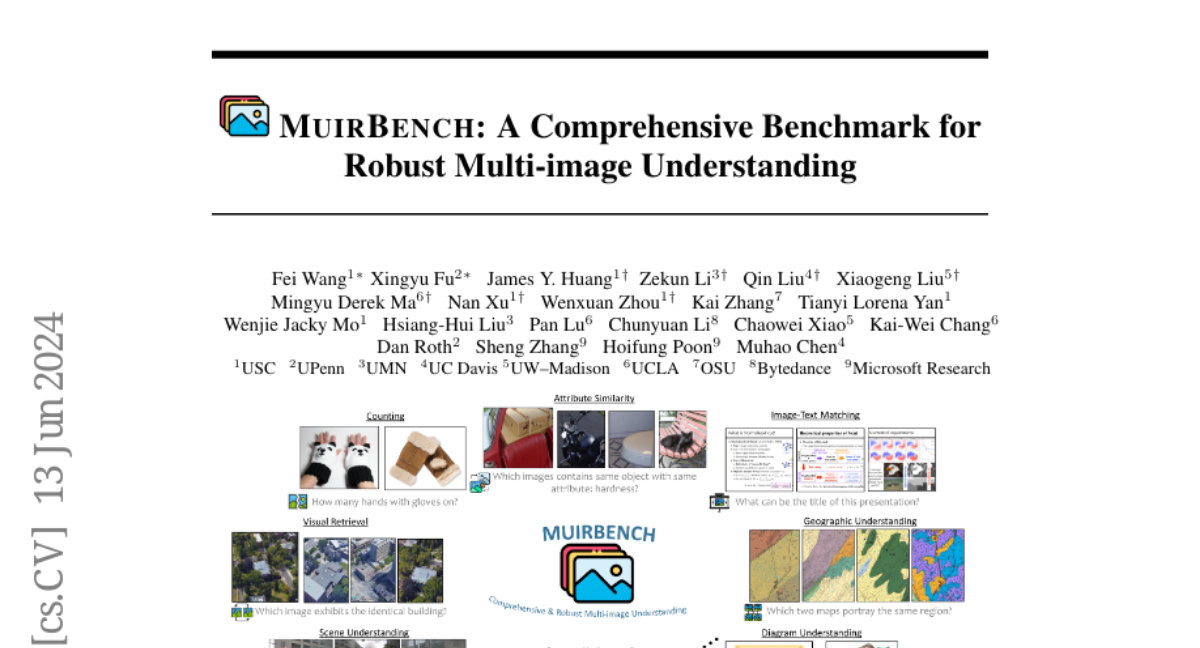

MuirBench: A Comprehensive Benchmark for Robust Multi-image Understanding

Fei Wang, Xingyu Fu, James Y. Huang, Zekun Li, Qin Liu, Xiaogeng Liu, Mingyu Derek Ma, Nan Xu, Wenxuan Zhou, Kai Zhang, Tianyi Lorena Yan, Wenjie Jacky Mo, Hsiang-Hui Liu, Pan Lu, Chunyuan Li, Chaowei Xiao, Kai-Wei Chang, Dan Roth, Sheng Zhang, Hoifung Poon, Muhao Chen

2024-06-14

Summary

This paper introduces MuirBench, a new benchmark designed to evaluate how well multimodal language models (LLMs) understand and analyze multiple images at once. It includes a variety of tasks that test the models' abilities to reason about the relationships between images.

What's the problem?

Most existing language models are primarily trained to work with single images, which limits their ability to understand complex scenarios that involve multiple images. This is a problem because many real-world applications, like visual reasoning or scene analysis, require the ability to interpret and relate information from several images simultaneously. Current benchmarks do not adequately assess how well these models can handle such tasks.

What's the solution?

MuirBench addresses this issue by providing a comprehensive set of 12 tasks that involve understanding relationships between pairs of images, such as spatial and temporal connections. The benchmark includes 11,264 images and 2,600 multiple-choice questions designed to challenge the models in various ways. Each task is carefully constructed to include both answerable and unanswerable questions, allowing for a reliable assessment of the models' performance. The results showed that even the best models struggled with these tasks, indicating a need for further development in this area.

Why it matters?

This research is important because it highlights the need for better evaluation tools for multimodal language models that can process multiple images. By creating MuirBench, the authors aim to encourage researchers to improve these models so they can better understand complex visual information, which is crucial for advancements in fields like robotics, autonomous vehicles, and enhanced AI applications.

Abstract

We introduce MuirBench, a comprehensive benchmark that focuses on robust multi-image understanding capabilities of multimodal LLMs. MuirBench consists of 12 diverse multi-image tasks (e.g., scene understanding, ordering) that involve 10 categories of multi-image relations (e.g., multiview, temporal relations). Comprising 11,264 images and 2,600 multiple-choice questions, MuirBench is created in a pairwise manner, where each standard instance is paired with an unanswerable variant that has minimal semantic differences, in order for a reliable assessment. Evaluated upon 20 recent multi-modal LLMs, our results reveal that even the best-performing models like GPT-4o and Gemini Pro find it challenging to solve MuirBench, achieving 68.0% and 49.3% in accuracy. Open-source multimodal LLMs trained on single images can hardly generalize to multi-image questions, hovering below 33.3% in accuracy. These results highlight the importance of MuirBench in encouraging the community to develop multimodal LLMs that can look beyond a single image, suggesting potential pathways for future improvements.