Mulberry: Empowering MLLM with o1-like Reasoning and Reflection via Collective Monte Carlo Tree Search

Huanjin Yao, Jiaxing Huang, Wenhao Wu, Jingyi Zhang, Yibo Wang, Shunyu Liu, Yingjie Wang, Yuxin Song, Haocheng Feng, Li Shen, Dacheng Tao

2024-12-26

Summary

This paper talks about Mulberry, a new method that enhances how multimodal large language models (MLLMs) reason and reflect on problems by using a technique called Collective Monte Carlo Tree Search (CoMCTS).

What's the problem?

Multimodal large language models need to break down complex questions into smaller steps to find answers effectively. However, existing methods often struggle to do this efficiently, which can lead to longer processing times and less accurate results. There's a need for a better way to guide these models through reasoning processes.

What's the solution?

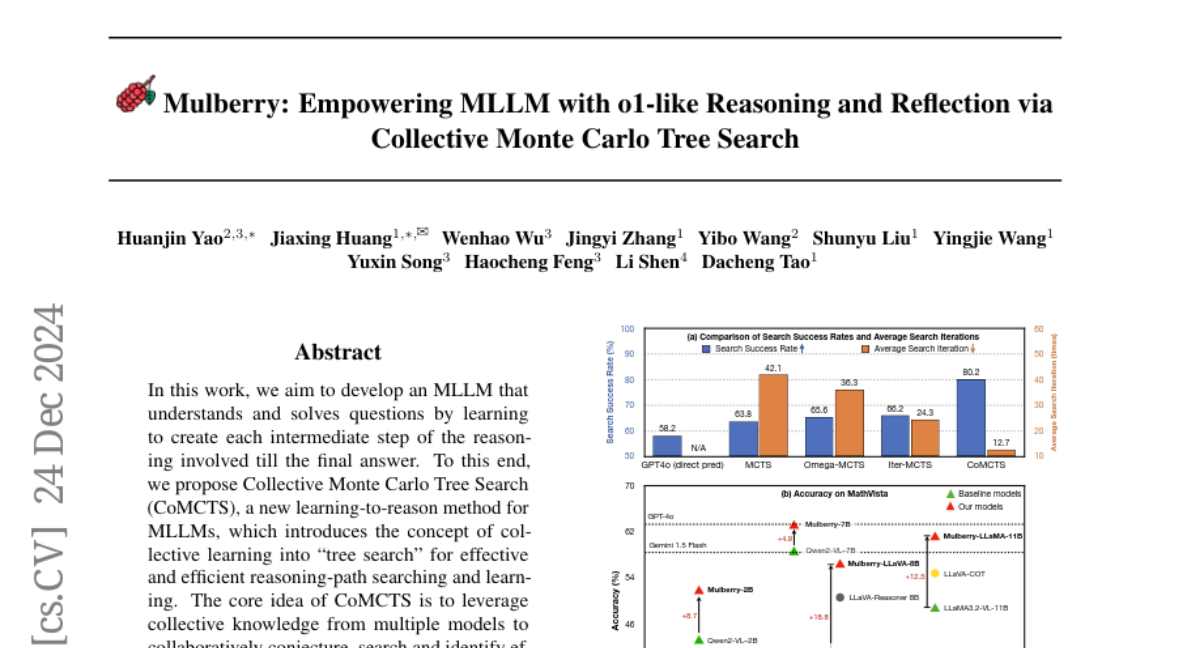

The authors propose CoMCTS, which allows multiple models to work together to figure out the best reasoning paths to take. This collective learning approach includes four main steps: Expansion (exploring new possibilities), Simulation and Error Positioning (testing ideas and finding mistakes), Backpropagation (learning from errors), and Selection (choosing the best options). They also created a new dataset called Mulberry-260k that provides structured reasoning paths for training the models. This combination helps the models improve their step-by-step reasoning and reflection skills.

Why it matters?

This research is important because it advances the capabilities of AI in understanding and solving complex problems. By improving how MLLMs reason, Mulberry can enhance applications in various fields, such as education, customer service, and research, making AI more effective at providing accurate answers and insights.

Abstract

In this work, we aim to develop an MLLM that understands and solves questions by learning to create each intermediate step of the reasoning involved till the final answer. To this end, we propose Collective Monte Carlo Tree Search (CoMCTS), a new learning-to-reason method for MLLMs, which introduces the concept of collective learning into ``tree search'' for effective and efficient reasoning-path searching and learning. The core idea of CoMCTS is to leverage collective knowledge from multiple models to collaboratively conjecture, search and identify effective reasoning paths toward correct answers via four iterative operations including Expansion, Simulation and Error Positioning, Backpropagation, and Selection. Using CoMCTS, we construct Mulberry-260k, a multimodal dataset with a tree of rich, explicit and well-defined reasoning nodes for each question. With Mulberry-260k, we perform collective SFT to train our model, Mulberry, a series of MLLMs with o1-like step-by-step Reasoning and Reflection capabilities. Extensive experiments demonstrate the superiority of our proposed methods on various benchmarks. Code will be available at https://github.com/HJYao00/Mulberry