Natural Language Reinforcement Learning

Xidong Feng, Ziyu Wan, Haotian Fu, Bo Liu, Mengyue Yang, Girish A. Koushik, Zhiyuan Hu, Ying Wen, Jun Wang

2024-11-21

Summary

This paper introduces Natural Language Reinforcement Learning (NLRL), a new approach that combines reinforcement learning with natural language processing to improve decision-making in various tasks.

What's the problem?

Traditional reinforcement learning uses mathematical models to make decisions, but it often struggles to work effectively with natural language tasks. This limits its application in areas like conversational AI and language understanding, where human-like reasoning is needed.

What's the solution?

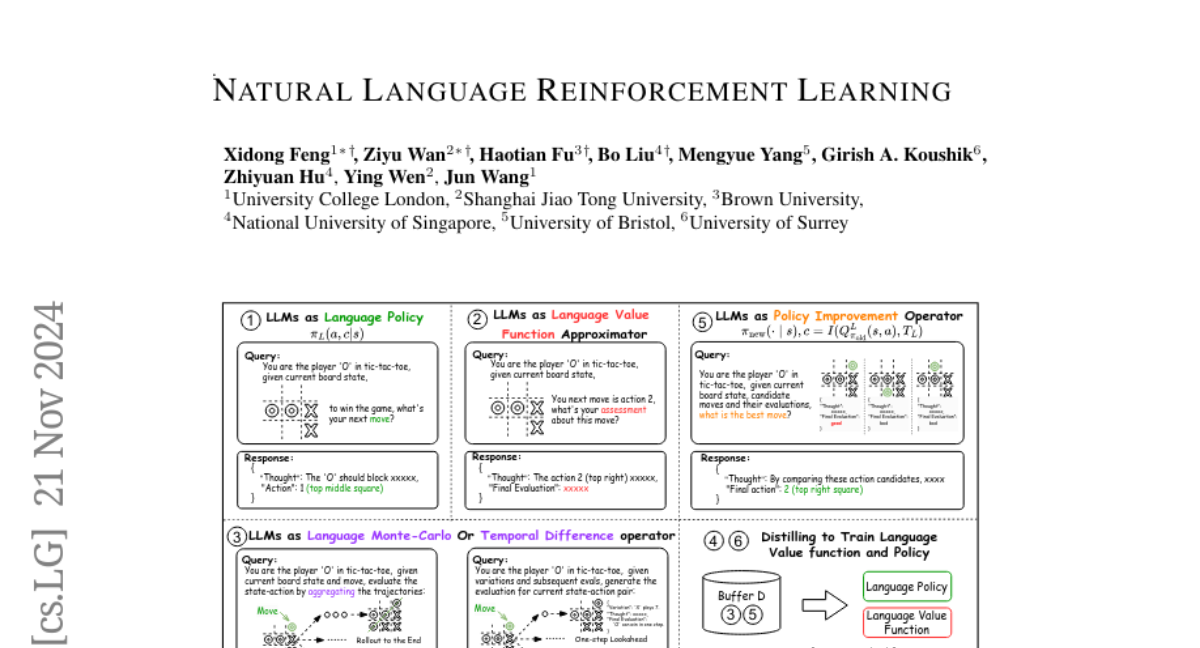

NLRL extends the standard reinforcement learning framework by redefining key concepts—such as goals, strategies, and value assessments—in terms of natural language. This allows researchers to use large language models to enhance decision-making processes. The paper shows that NLRL can be implemented effectively through simple prompts or more complex training methods. Experiments in games like Maze, Breakthrough, and Tic-Tac-Toe demonstrate that NLRL can improve performance and understanding in these scenarios.

Why it matters?

This research is significant because it opens up new possibilities for using reinforcement learning in natural language tasks. By bridging the gap between decision-making and language understanding, NLRL can lead to better AI systems that can interact with humans more naturally and effectively.

Abstract

Reinforcement Learning (RL) mathematically formulates decision-making with Markov Decision Process (MDP). With MDPs, researchers have achieved remarkable breakthroughs across various domains, including games, robotics, and language models. This paper seeks a new possibility, Natural Language Reinforcement Learning (NLRL), by extending traditional MDP to natural language-based representation space. Specifically, NLRL innovatively redefines RL principles, including task objectives, policy, value function, Bellman equation, and policy iteration, into their language counterparts. With recent advancements in large language models (LLMs), NLRL can be practically implemented to achieve RL-like policy and value improvement by either pure prompting or gradient-based training. Experiments over Maze, Breakthrough, and Tic-Tac-Toe games demonstrate the effectiveness, efficiency, and interpretability of the NLRL framework among diverse use cases. Our code will be released at https://github.com/waterhorse1/Natural-language-RL.