Non Verbis, Sed Rebus: Large Language Models are Weak Solvers of Italian Rebuses

Gabriele Sarti, Tommaso Caselli, Malvina Nissim, Arianna Bisazza

2024-08-02

Summary

This paper discusses how large language models (LLMs) struggle to solve Italian rebuses, which are puzzles that require combining images and letters to find hidden phrases. The authors introduce a new collection of rebuses to test these models and analyze their performance.

What's the problem?

Rebuses are complex puzzles that involve multi-step reasoning, where you need to interpret images and letters together to uncover a hidden message. However, current LLMs like LLaMA-3 and GPT-4o perform poorly on these tasks. This is partly because they tend to memorize answers rather than truly understand how to solve the puzzles, making them ineffective for this type of reasoning.

What's the solution?

To address this problem, the authors created a large dataset of verbalized rebuses in Italian and used it to evaluate the rebus-solving abilities of various LLMs. They found that while fine-tuning the models could improve their performance, much of the improvement came from memorization rather than genuine understanding. This highlights the need for better methods to enhance LLMs' reasoning skills.

Why it matters?

This research is significant because it sheds light on the limitations of current language models in solving complex puzzles like rebuses. By identifying these weaknesses, it encourages further development of more capable AI systems that can handle intricate reasoning tasks, ultimately improving their usefulness in real-world applications.

Abstract

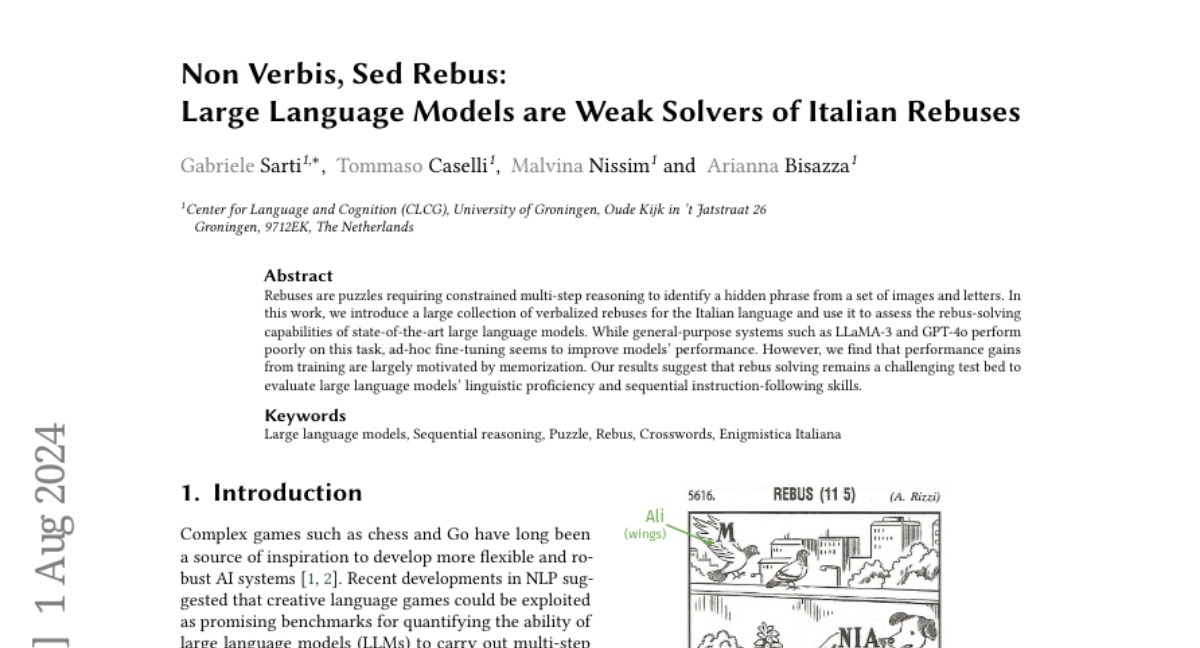

Rebuses are puzzles requiring constrained multi-step reasoning to identify a hidden phrase from a set of images and letters. In this work, we introduce a large collection of verbalized rebuses for the Italian language and use it to assess the rebus-solving capabilities of state-of-the-art large language models. While general-purpose systems such as LLaMA-3 and GPT-4o perform poorly on this task, ad-hoc fine-tuning seems to improve models' performance. However, we find that performance gains from training are largely motivated by memorization. Our results suggest that rebus solving remains a challenging test bed to evaluate large language models' linguistic proficiency and sequential instruction-following skills.