Normalizing Flows are Capable Generative Models

Shuangfei Zhai, Ruixiang Zhang, Preetum Nakkiran, David Berthelot, Jiatao Gu, Huangjie Zheng, Tianrong Chen, Miguel Angel Bautista, Navdeep Jaitly, Josh Susskind

2024-12-13

Summary

This paper discusses Normalizing Flows (NFs), a powerful type of model used in machine learning that helps generate new data and estimate probabilities more effectively than traditional methods.

What's the problem?

Many existing models for generating data, like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), have limitations when it comes to accurately modeling complex data distributions. They often struggle with stability during training and may not provide clear probability estimates for the generated data, making it hard to understand how likely certain outcomes are.

What's the solution?

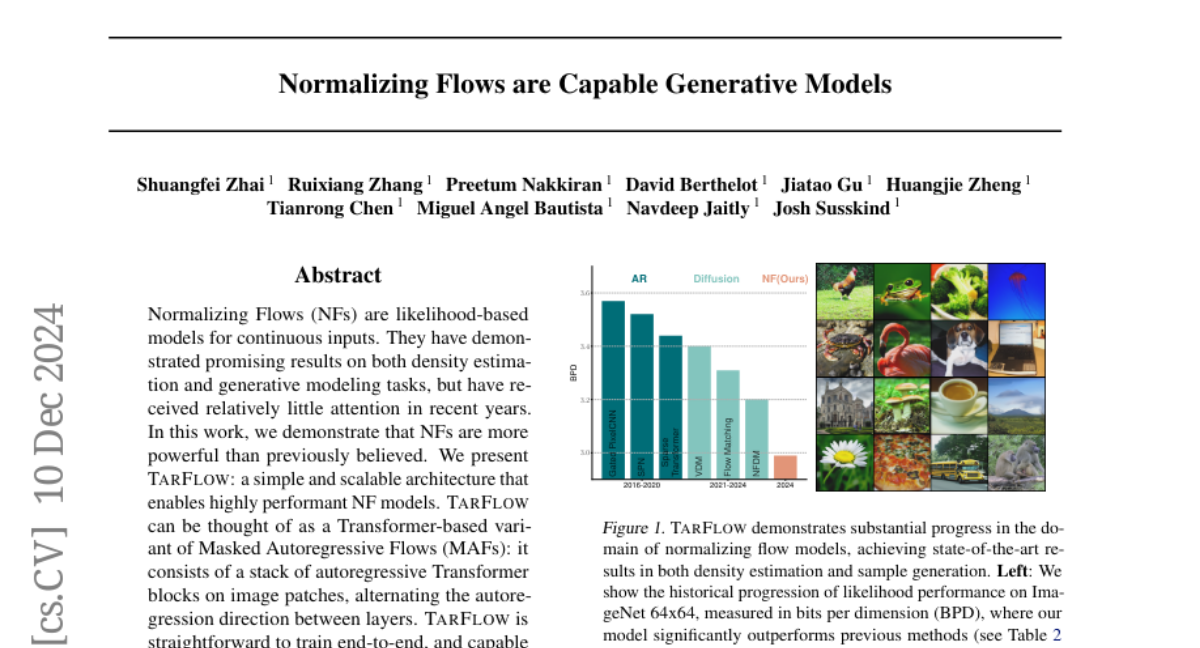

The authors introduce TarFlow, a new architecture based on Normalizing Flows that improves the generation of high-quality images. TarFlow uses a combination of autoregressive Transformer blocks and innovative techniques like Gaussian noise augmentation to enhance the quality of the generated images. This approach allows TarFlow to produce images with high fidelity and diversity while being easier to train than previous models. It sets new records in likelihood estimation for images, outperforming existing methods significantly.

Why it matters?

This research is important because it shows that Normalizing Flows can be more effective than previously thought, especially for generating complex data like images. By improving how we generate and estimate data, TarFlow can lead to advancements in various applications, including image processing, computer graphics, and other fields where high-quality data generation is crucial.

Abstract

Normalizing Flows (NFs) are likelihood-based models for continuous inputs. They have demonstrated promising results on both density estimation and generative modeling tasks, but have received relatively little attention in recent years. In this work, we demonstrate that NFs are more powerful than previously believed. We present TarFlow: a simple and scalable architecture that enables highly performant NF models. TarFlow can be thought of as a Transformer-based variant of Masked Autoregressive Flows (MAFs): it consists of a stack of autoregressive Transformer blocks on image patches, alternating the autoregression direction between layers. TarFlow is straightforward to train end-to-end, and capable of directly modeling and generating pixels. We also propose three key techniques to improve sample quality: Gaussian noise augmentation during training, a post training denoising procedure, and an effective guidance method for both class-conditional and unconditional settings. Putting these together, TarFlow sets new state-of-the-art results on likelihood estimation for images, beating the previous best methods by a large margin, and generates samples with quality and diversity comparable to diffusion models, for the first time with a stand-alone NF model. We make our code available at https://github.com/apple/ml-tarflow.