Out-of-Distribution Detection with Attention Head Masking for Multimodal Document Classification

Christos Constantinou, Georgios Ioannides, Aman Chadha, Aaron Elkins, Edwin Simpson

2024-08-22

Summary

This paper introduces a method for detecting out-of-distribution (OOD) data in multimodal document classification systems, which is important for improving the reliability of machine learning models.

What's the problem?

Detecting OOD data is essential because it helps prevent machine learning models from being overly confident in their predictions when they encounter unfamiliar data. Most existing methods focus on single types of data, like images or text, and there is not enough research on how to effectively detect OOD data in documents that combine both visual and textual information.

What's the solution?

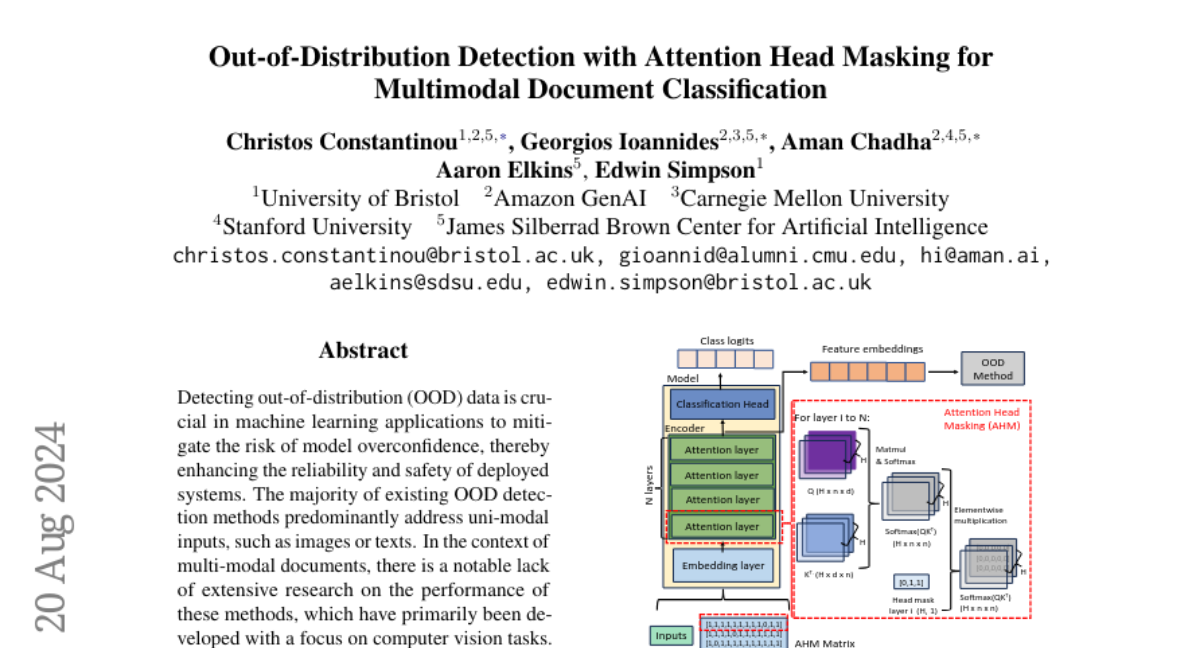

The authors propose a new technique called attention head masking (AHM) specifically for multimodal document classification. This method improves the detection of OOD data by masking certain parts of the model's attention mechanism, allowing it to focus better on relevant features in the documents. They also introduce a new dataset called FinanceDocs to support research in this area.

Why it matters?

This research is important because it enhances the safety and reliability of machine learning systems that analyze documents. By improving how these systems detect unfamiliar data, it can lead to better performance in real-world applications, such as finance, healthcare, and any field where accurate document analysis is crucial.

Abstract

Detecting out-of-distribution (OOD) data is crucial in machine learning applications to mitigate the risk of model overconfidence, thereby enhancing the reliability and safety of deployed systems. The majority of existing OOD detection methods predominantly address uni-modal inputs, such as images or texts. In the context of multi-modal documents, there is a notable lack of extensive research on the performance of these methods, which have primarily been developed with a focus on computer vision tasks. We propose a novel methodology termed as attention head masking (AHM) for multi-modal OOD tasks in document classification systems. Our empirical results demonstrate that the proposed AHM method outperforms all state-of-the-art approaches and significantly decreases the false positive rate (FPR) compared to existing solutions up to 7.5\%. This methodology generalizes well to multi-modal data, such as documents, where visual and textual information are modeled under the same Transformer architecture. To address the scarcity of high-quality publicly available document datasets and encourage further research on OOD detection for documents, we introduce FinanceDocs, a new document AI dataset. Our code and dataset are publicly available.