PingPong: A Benchmark for Role-Playing Language Models with User Emulation and Multi-Model Evaluation

Ilya Gusev

2024-09-12

Summary

This paper talks about PingPong, a new benchmark designed to evaluate how well language models can role-play in conversations by simulating user interactions.

What's the problem?

Evaluating how effectively language models can engage in role-playing conversations is challenging. Traditional methods often lack a dynamic way to assess these models in multi-turn dialogues, making it hard to understand their performance in real-world scenarios.

What's the solution?

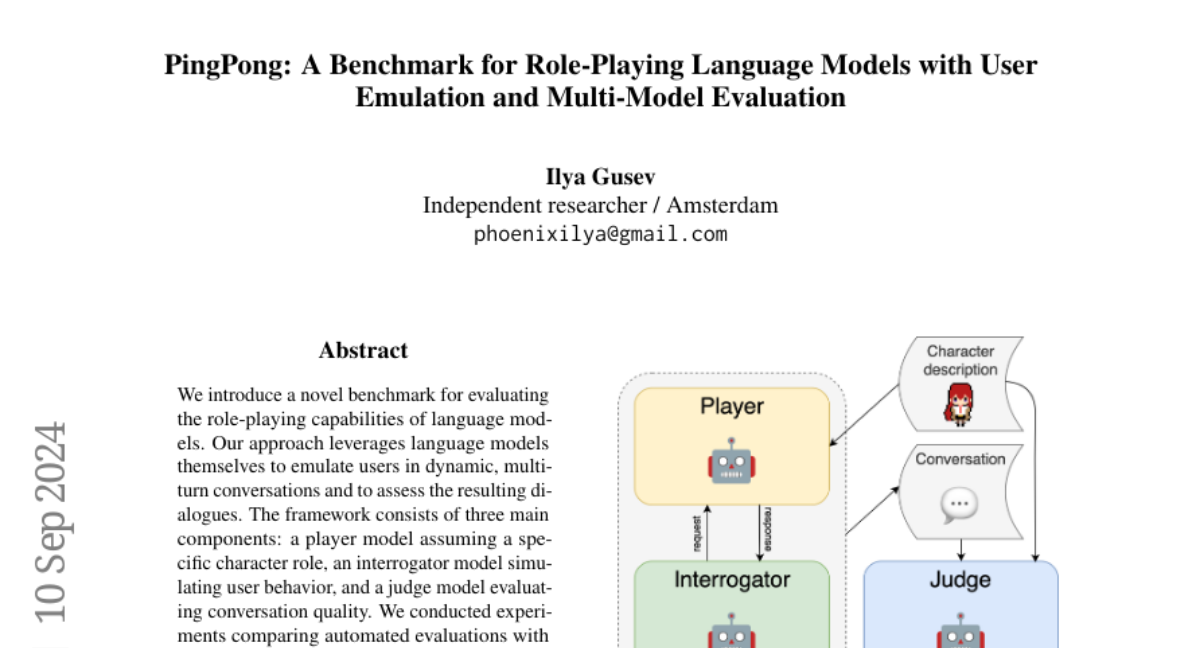

To tackle this issue, the authors created PingPong, which uses language models themselves to emulate users during conversations. The system includes three main parts: a player model that takes on a character role, an interrogator model that simulates user behavior, and a judge model that evaluates the quality of the conversations. They tested this approach against human evaluations and found strong agreement between automated and human assessments.

Why it matters?

This research is important because it provides a structured way to evaluate the conversational abilities of language models in interactive settings. By improving how we assess these models, developers can create more effective AI systems for applications like chatbots, virtual assistants, and interactive storytelling.

Abstract

We introduce a novel benchmark for evaluating the role-playing capabilities of language models. Our approach leverages language models themselves to emulate users in dynamic, multi-turn conversations and to assess the resulting dialogues. The framework consists of three main components: a player model assuming a specific character role, an interrogator model simulating user behavior, and a judge model evaluating conversation quality. We conducted experiments comparing automated evaluations with human annotations to validate our approach, demonstrating strong correlations across multiple criteria. This work provides a foundation for a robust and dynamic evaluation of model capabilities in interactive scenarios.