Precise In-Parameter Concept Erasure in Large Language Models

Yoav Gur-Arieh, Clara Suslik, Yihuai Hong, Fazl Barez, Mor Geva

2025-05-29

Summary

This paper talks about PISCES, a new technique that lets researchers remove specific ideas or concepts from large language models without messing up everything else the model knows.

What's the problem?

The problem is that sometimes AI models learn things that are harmful, biased, or just unwanted, and it's really hard to get rid of those specific ideas without accidentally erasing other useful knowledge or making the model worse overall.

What's the solution?

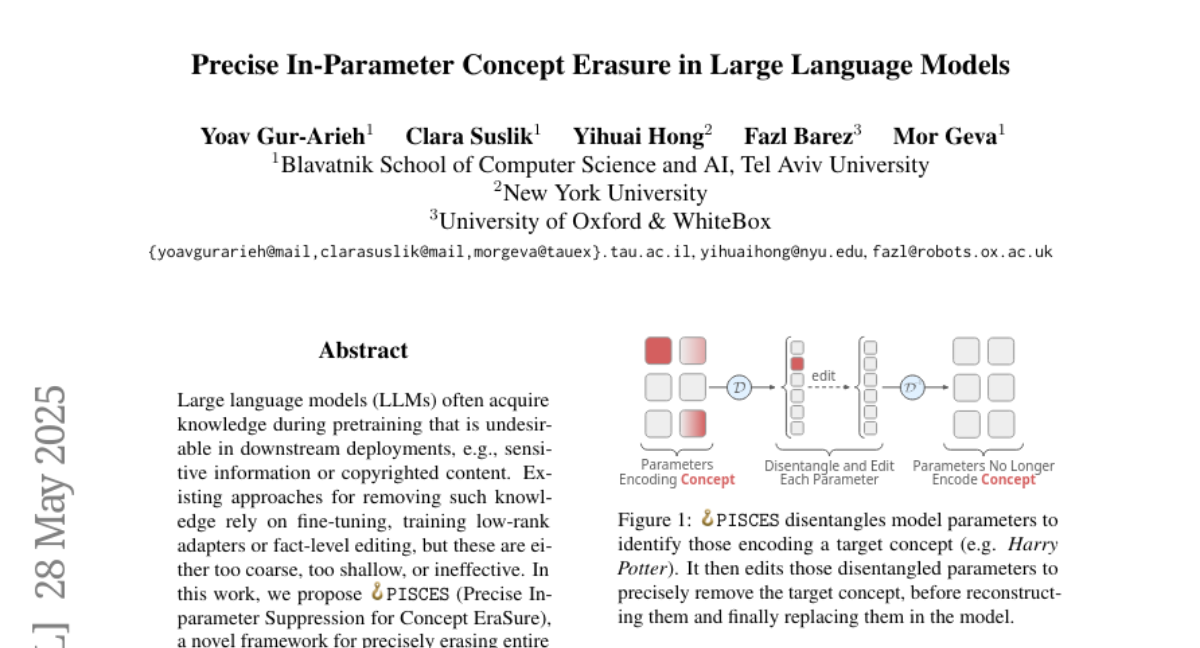

The researchers developed a method that targets and removes only the exact concepts they want to erase by editing the features inside the model's parameters. This approach is precise and strong, so the model loses just the unwanted concept but keeps working well for everything else.

Why it matters?

This is important because it gives people more control over what AI models know and how they behave, making it easier to remove harmful or sensitive information and create safer, more trustworthy technology.

Abstract

PISCES, a novel framework using feature-based in-parameter editing, effectively erases concepts from large language models with high specificity and robustness.