PrimeGuard: Safe and Helpful LLMs through Tuning-Free Routing

Blazej Manczak, Eliott Zemour, Eric Lin, Vaikkunth Mugunthan

2024-07-24

Summary

This paper discusses SIGMA, a new method for improving how video models learn from data. It focuses on better understanding videos by learning both detailed and broader features simultaneously, which helps create more accurate representations of video content.

What's the problem?

Current video modeling techniques often struggle to capture complex meanings in videos because they typically focus on low-level details, like individual pixels. This limitation prevents them from understanding higher-level concepts and relationships within the video content, making it difficult for AI to analyze and generate videos effectively.

What's the solution?

To address this issue, SIGMA introduces a novel approach that uses a projection network to learn both the video model and the target features together. Instead of just focusing on pixels, SIGMA organizes video features into clusters and uses an optimal transport method to ensure that the features are diverse and meaningful. This allows the model to better understand how different parts of a video relate to each other over time, improving both spatial and temporal understanding.

Why it matters?

This research is significant because it enhances the capabilities of AI systems in processing and understanding videos. By improving how models learn from video data, SIGMA can be applied in various fields such as video analysis, surveillance, and content generation. This advancement can lead to better performance in tasks like video summarization and object detection, ultimately making AI more effective in real-world applications.

Abstract

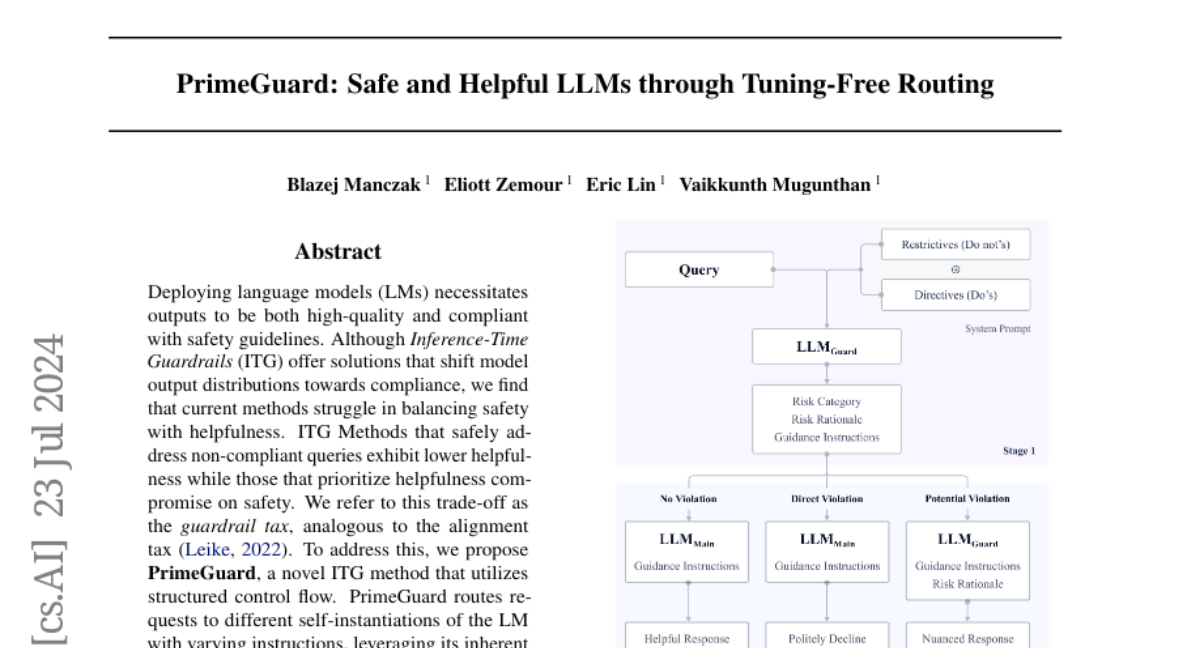

Deploying language models (LMs) necessitates outputs to be both high-quality and compliant with safety guidelines. Although Inference-Time Guardrails (ITG) offer solutions that shift model output distributions towards compliance, we find that current methods struggle in balancing safety with helpfulness. ITG Methods that safely address non-compliant queries exhibit lower helpfulness while those that prioritize helpfulness compromise on safety. We refer to this trade-off as the guardrail tax, analogous to the alignment tax. To address this, we propose PrimeGuard, a novel ITG method that utilizes structured control flow. PrimeGuard routes requests to different self-instantiations of the LM with varying instructions, leveraging its inherent instruction-following capabilities and in-context learning. Our tuning-free approach dynamically compiles system-designer guidelines for each query. We construct and release safe-eval, a diverse red-team safety benchmark. Extensive evaluations demonstrate that PrimeGuard, without fine-tuning, overcomes the guardrail tax by (1) significantly increasing resistance to iterative jailbreak attacks and (2) achieving state-of-the-art results in safety guardrailing while (3) matching helpfulness scores of alignment-tuned models. Extensive evaluations demonstrate that PrimeGuard, without fine-tuning, outperforms all competing baselines and overcomes the guardrail tax by improving the fraction of safe responses from 61% to 97% and increasing average helpfulness scores from 4.17 to 4.29 on the largest models, while reducing attack success rate from 100% to 8%. PrimeGuard implementation is available at https://github.com/dynamofl/PrimeGuard and safe-eval dataset is available at https://huggingface.co/datasets/dynamoai/safe_eval.