PUMA: Empowering Unified MLLM with Multi-granular Visual Generation

Rongyao Fang, Chengqi Duan, Kun Wang, Hao Li, Hao Tian, Xingyu Zeng, Rui Zhao, Jifeng Dai, Hongsheng Li, Xihui Liu

2024-10-22

Summary

This paper introduces PUMA, a new model that enhances multimodal large language models (MLLMs) by improving their ability to generate images at different levels of detail for various tasks.

What's the problem?

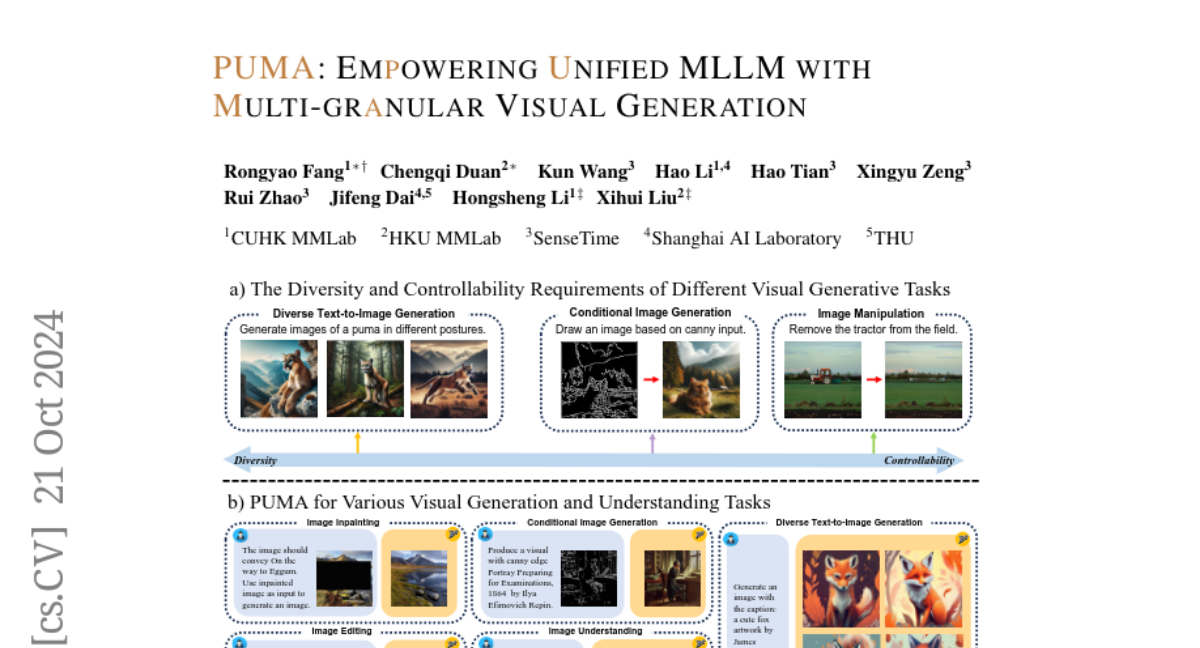

As technology advances, there is a growing need for models that can understand and generate visual content alongside text. However, existing models struggle to handle different levels of detail required for various image generation tasks. For example, generating a simple image from text requires different capabilities than editing an existing image. This lack of flexibility can limit the effectiveness of these models in real-world applications.

What's the solution?

To address this issue, the authors developed PUMA, which allows MLLMs to process and generate images at multiple levels of detail. This means that PUMA can handle everything from broad, general images to specific, detailed edits within the same framework. The model is trained using a combination of multimodal pretraining and task-specific instruction tuning, enabling it to perform a wide range of tasks like image generation, editing, and understanding.

Why it matters?

This research is significant because it represents a step towards creating more versatile AI models that can seamlessly switch between different types of visual tasks. By improving how models generate and manipulate images, PUMA could enhance applications in fields like graphic design, video game development, and virtual reality, making these technologies more accessible and effective.

Abstract

Recent advancements in multimodal foundation models have yielded significant progress in vision-language understanding. Initial attempts have also explored the potential of multimodal large language models (MLLMs) for visual content generation. However, existing works have insufficiently addressed the varying granularity demands of different image generation tasks within a unified MLLM paradigm - from the diversity required in text-to-image generation to the precise controllability needed in image manipulation. In this work, we propose PUMA, emPowering Unified MLLM with Multi-grAnular visual generation. PUMA unifies multi-granular visual features as both inputs and outputs of MLLMs, elegantly addressing the different granularity requirements of various image generation tasks within a unified MLLM framework. Following multimodal pretraining and task-specific instruction tuning, PUMA demonstrates proficiency in a wide range of multimodal tasks. This work represents a significant step towards a truly unified MLLM capable of adapting to the granularity demands of various visual tasks. The code and model will be released in https://github.com/rongyaofang/PUMA.