R1-Onevision: Advancing Generalized Multimodal Reasoning through Cross-Modal Formalization

Yi Yang, Xiaoxuan He, Hongkun Pan, Xiyan Jiang, Yan Deng, Xingtao Yang, Haoyu Lu, Dacheng Yin, Fengyun Rao, Minfeng Zhu, Bo Zhang, Wei Chen

2025-03-14

Summary

This paper introduces R1-Onevision, a model designed to improve how AI understands and reasons using both images and text, creating a more effective way to analyze visual content.

What's the problem?

Current AI models struggle with multimodal reasoning, which means they have difficulty combining visual and textual information to solve problems. They're not great at understanding images deeply, leading to poor performance on complex tasks, and there aren't good benchmarks to accurately measure their abilities.

What's the solution?

The researchers developed a system that turns images into text-like descriptions, allowing the model to reason about them using language-based techniques. They also created a dataset and benchmark called R1-Onevision to train and test the model's reasoning abilities, covering a wide range of difficulty levels.

Why it matters?

This work matters because it advances the field of AI by creating models that can reason more effectively with both visual and textual data. This improvement could lead to better AI applications in areas like education, problem-solving, and understanding complex information.

Abstract

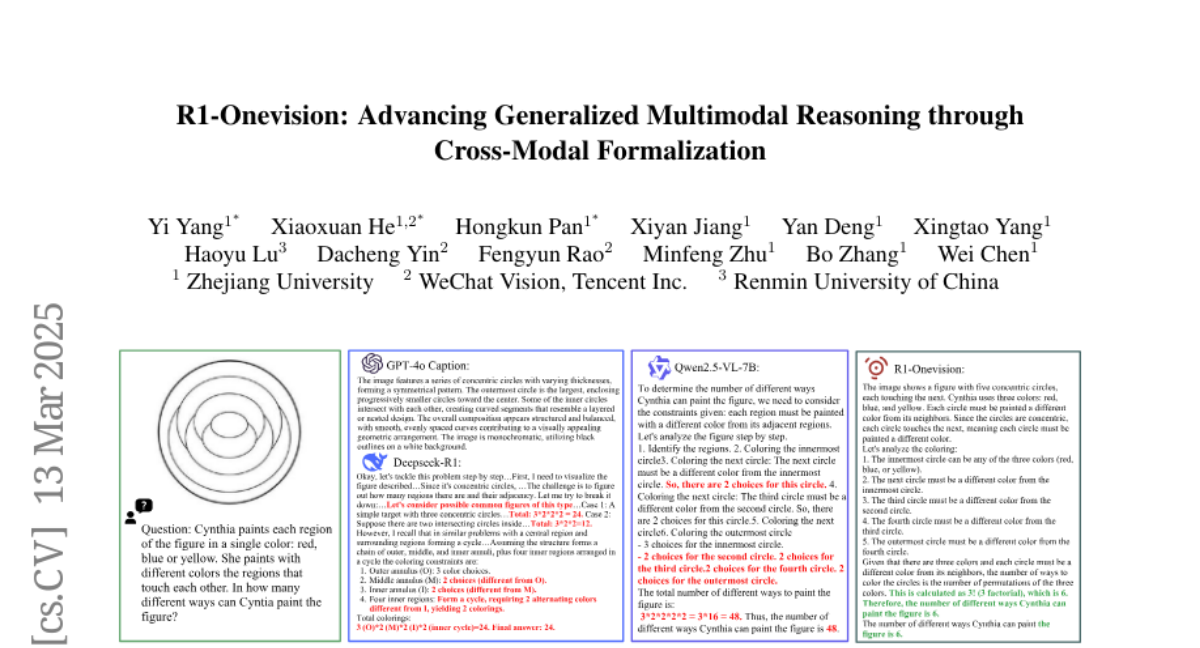

Large Language Models have demonstrated remarkable reasoning capability in complex textual tasks. However, multimodal reasoning, which requires integrating visual and textual information, remains a significant challenge. Existing visual-language models often struggle to effectively analyze and reason visual content, resulting in suboptimal performance on complex reasoning tasks. Moreover, the absence of comprehensive benchmarks hinders the accurate assessment of multimodal reasoning capabilities. In this paper, we introduce R1-Onevision, a multimodal reasoning model designed to bridge the gap between visual perception and deep reasoning. To achieve this, we propose a cross-modal reasoning pipeline that transforms images into formal textural representations, enabling precise language-based reasoning. Leveraging this pipeline, we construct the R1-Onevision dataset which provides detailed, step-by-step multimodal reasoning annotations across diverse domains. We further develop the R1-Onevision model through supervised fine-tuning and reinforcement learning to cultivate advanced reasoning and robust generalization abilities. To comprehensively evaluate multimodal reasoning performance across different grades, we introduce R1-Onevision-Bench, a benchmark aligned with human educational stages, covering exams from junior high school to university and beyond. Experimental results show that R1-Onevision achieves state-of-the-art performance, outperforming models such as GPT-4o and Qwen2.5-VL on multiple challenging multimodal reasoning benchmarks.