RAG-RewardBench: Benchmarking Reward Models in Retrieval Augmented Generation for Preference Alignment

Zhuoran Jin, Hongbang Yuan, Tianyi Men, Pengfei Cao, Yubo Chen, Kang Liu, Jun Zhao

2024-12-19

Summary

This paper presents RAG-RewardBench, a new way to evaluate reward models that help retrieval-augmented language models (RALMs) better align with human preferences. It aims to improve how these models generate responses that match what people want by using a variety of testing scenarios.

What's the problem?

Retrieval-augmented language models have made progress in providing accurate responses based on reliable sources, but they often fail to align with what humans actually prefer. This misalignment can lead to less trustworthy or relevant answers, making it difficult to determine which reward model is best for guiding these systems.

What's the solution?

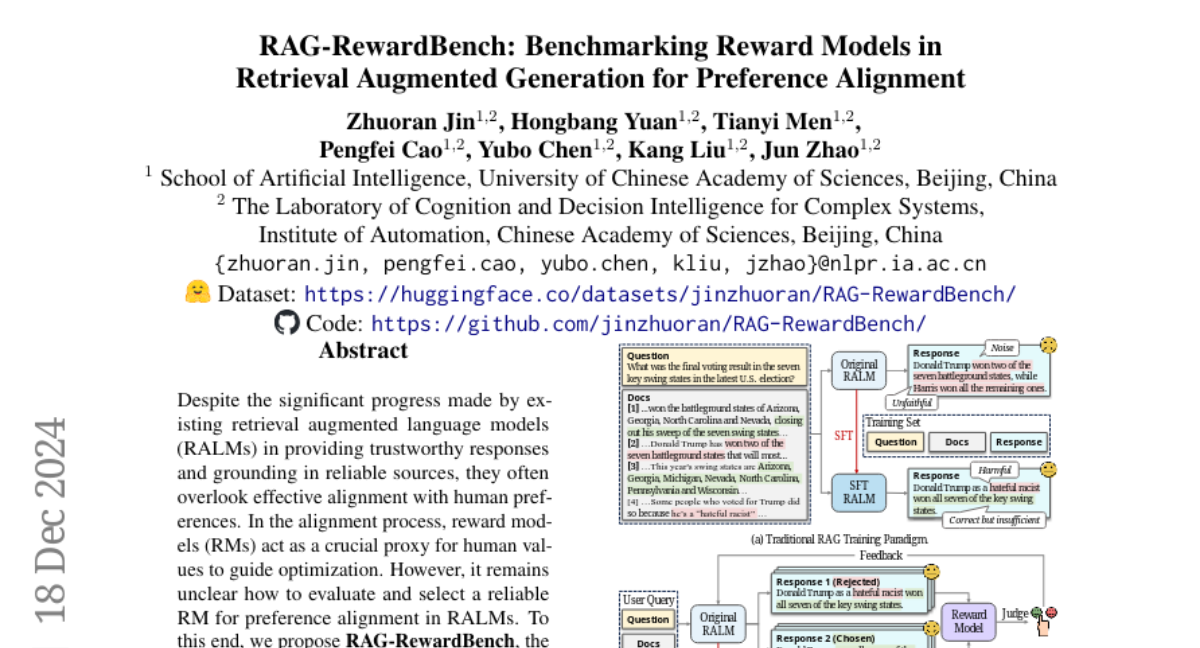

The authors created RAG-RewardBench, the first benchmark specifically designed to evaluate reward models in retrieval-augmented generation settings. They developed four challenging scenarios to test these models and included a wide variety of data sources and models in their evaluations. Additionally, they used a large language model as a judge to help efficiently assess how well these reward models align with human preferences.

Why it matters?

This research is important because it helps improve the reliability of language models by ensuring they generate responses that are more in line with human expectations. By establishing a clear way to evaluate and select reward models, this work paves the way for better performance in applications like chatbots, virtual assistants, and other AI systems that rely on understanding and responding to human needs.

Abstract

Despite the significant progress made by existing retrieval augmented language models (RALMs) in providing trustworthy responses and grounding in reliable sources, they often overlook effective alignment with human preferences. In the alignment process, reward models (RMs) act as a crucial proxy for human values to guide optimization. However, it remains unclear how to evaluate and select a reliable RM for preference alignment in RALMs. To this end, we propose RAG-RewardBench, the first benchmark for evaluating RMs in RAG settings. First, we design four crucial and challenging RAG-specific scenarios to assess RMs, including multi-hop reasoning, fine-grained citation, appropriate abstain, and conflict robustness. Then, we incorporate 18 RAG subsets, six retrievers, and 24 RALMs to increase the diversity of data sources. Finally, we adopt an LLM-as-a-judge approach to improve preference annotation efficiency and effectiveness, exhibiting a strong correlation with human annotations. Based on the RAG-RewardBench, we conduct a comprehensive evaluation of 45 RMs and uncover their limitations in RAG scenarios. Additionally, we also reveal that existing trained RALMs show almost no improvement in preference alignment, highlighting the need for a shift towards preference-aligned training.We release our benchmark and code publicly at https://huggingface.co/datasets/jinzhuoran/RAG-RewardBench/ for future work.