RATIONALYST: Pre-training Process-Supervision for Improving Reasoning

Dongwei Jiang, Guoxuan Wang, Yining Lu, Andrew Wang, Jingyu Zhang, Chuyu Liu, Benjamin Van Durme, Daniel Khashabi

2024-10-03

Summary

This paper presents RATIONALYST, a new model designed to improve the reasoning abilities of large language models (LLMs) by explicitly teaching them to understand and articulate their reasoning processes.

What's the problem?

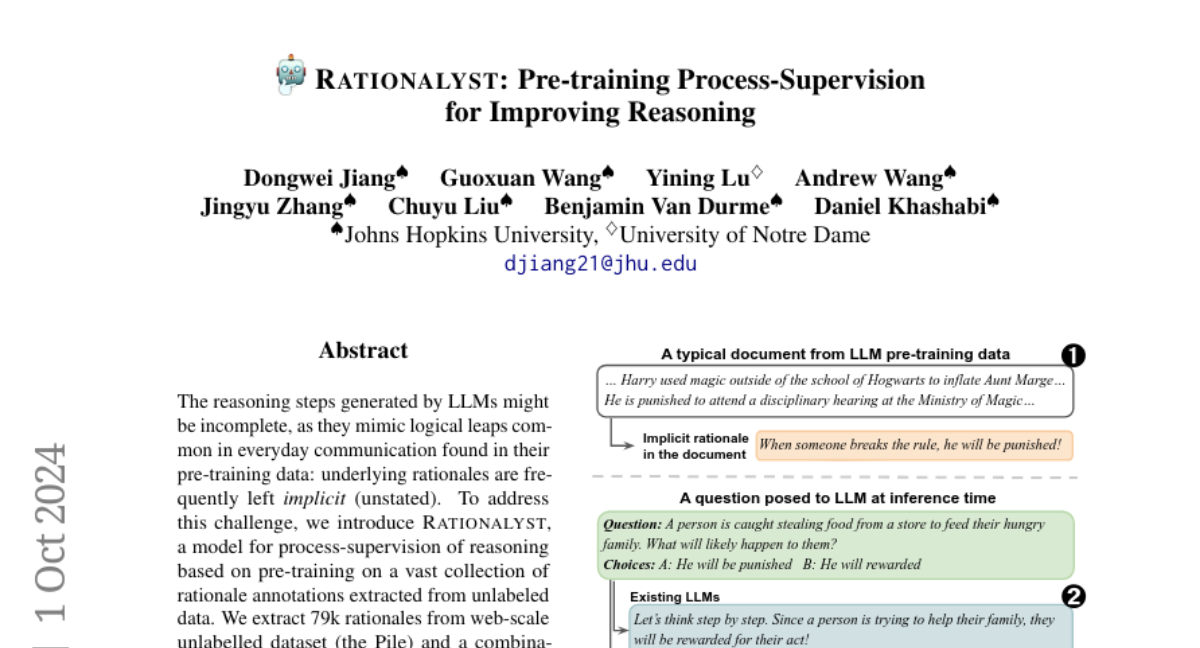

Many LLMs struggle with reasoning because they often skip important logical steps or leave them unstated, which can lead to incomplete or incorrect answers. This happens because these models are trained on data that reflects everyday communication, where not all reasoning steps are clearly expressed.

What's the solution?

RATIONALYST addresses this issue by using a process called 'process supervision.' It extracts 79,000 implicit rationales (hidden reasoning steps) from a large dataset and trains the model to recognize and generate these rationales during its reasoning process. This helps the model to clearly articulate each step it takes to arrive at an answer. The model was fine-tuned from LLaMa-3-8B and showed an average accuracy improvement of 3.9% across various reasoning tasks compared to other models, including larger ones like GPT-4.

Why it matters?

This research is significant because it enhances the ability of AI systems to reason more effectively, making them more reliable for complex tasks like math or logical problem-solving. By improving how LLMs articulate their reasoning, RATIONALYST can help create AI that is not only smarter but also easier for humans to understand and trust.

Abstract

The reasoning steps generated by LLMs might be incomplete, as they mimic logical leaps common in everyday communication found in their pre-training data: underlying rationales are frequently left implicit (unstated). To address this challenge, we introduce RATIONALYST, a model for process-supervision of reasoning based on pre-training on a vast collection of rationale annotations extracted from unlabeled data. We extract 79k rationales from web-scale unlabelled dataset (the Pile) and a combination of reasoning datasets with minimal human intervention. This web-scale pre-training for reasoning allows RATIONALYST to consistently generalize across diverse reasoning tasks, including mathematical, commonsense, scientific, and logical reasoning. Fine-tuned from LLaMa-3-8B, RATIONALYST improves the accuracy of reasoning by an average of 3.9% on 7 representative reasoning benchmarks. It also demonstrates superior performance compared to significantly larger verifiers like GPT-4 and similarly sized models fine-tuned on matching training sets.