RE-AdaptIR: Improving Information Retrieval through Reverse Engineered Adaptation

William Fleshman, Benjamin Van Durme

2024-06-24

Summary

This paper introduces RE-AdaptIR, a new method that enhances information retrieval systems using large language models (LLMs). It focuses on improving how these models find and deliver relevant information without needing a lot of labeled training data.

What's the problem?

Improving LLMs for information retrieval typically requires many labeled examples to train on, which can be hard to get and costly. This makes it difficult to enhance the performance of these models in real-world applications, especially when there is a lack of available data.

What's the solution?

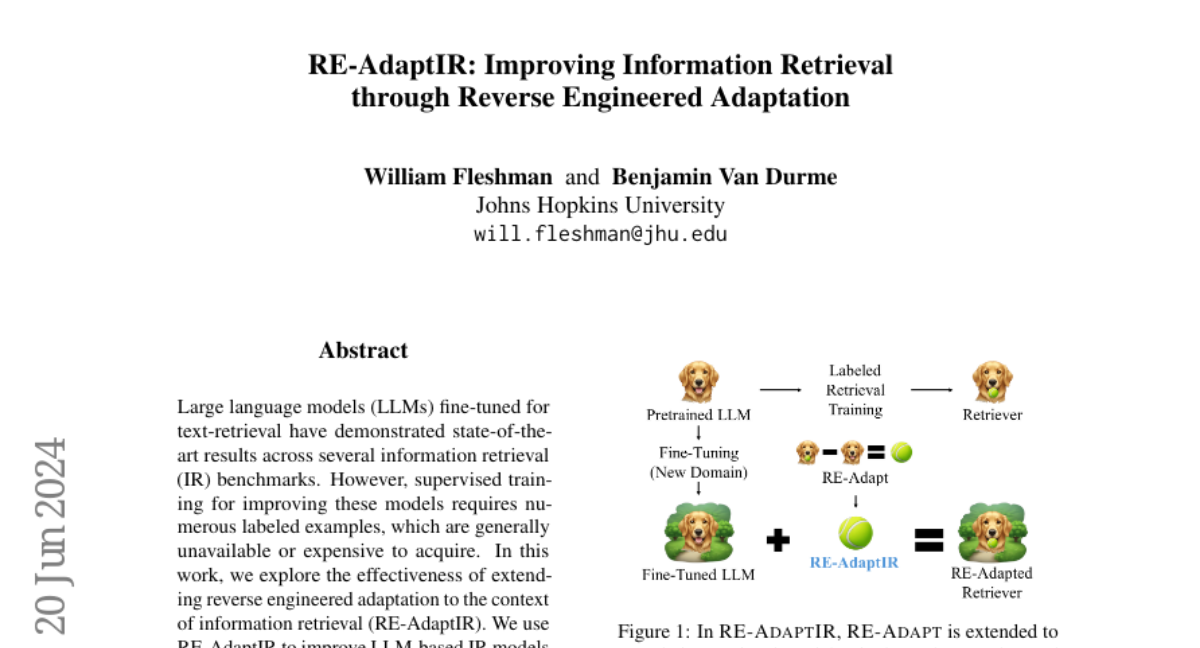

The authors developed RE-AdaptIR to allow LLMs to learn from unlabeled data instead of relying on expensive labeled examples. This method uses reverse-engineered adaptation techniques to improve the models' ability to retrieve information. They tested this approach in various scenarios, including situations where the models had never encountered specific queries before, and found that it significantly boosted performance.

Why it matters?

This research is important because it provides a way to enhance information retrieval systems without the need for extensive labeled datasets. By making it easier and cheaper to train these models, RE-AdaptIR could lead to better search engines and information systems that are more effective and user-friendly.

Abstract

Large language models (LLMs) fine-tuned for text-retrieval have demonstrated state-of-the-art results across several information retrieval (IR) benchmarks. However, supervised training for improving these models requires numerous labeled examples, which are generally unavailable or expensive to acquire. In this work, we explore the effectiveness of extending reverse engineered adaptation to the context of information retrieval (RE-AdaptIR). We use RE-AdaptIR to improve LLM-based IR models using only unlabeled data. We demonstrate improved performance both in training domains as well as zero-shot in domains where the models have seen no queries. We analyze performance changes in various fine-tuning scenarios and offer findings of immediate use to practitioners.